mirror of

https://github.com/Farama-Foundation/Gymnasium.git

synced 2025-07-31 22:04:31 +00:00

Update tutorial ci (#1344)

This commit is contained in:

@@ -1,7 +1,9 @@

|

||||

Tutorials

|

||||

=========

|

||||

In this section, we cover some of the most well-known benchmarks of RL including the Frozen Lake, Black Jack, and Training using REINFORCE for Mujoco.

|

||||

|

||||

Additionally, we provide a guide on how to load custom quadruped robot environments, implementing custom wrappers, creating custom environments, handling time limits, and training A2C with Vector Envs and Domain Randomization.

|

||||

We provide two sets of tutorials: basics and training.

|

||||

|

||||

Lastly, there is a guide on third-party integrations with Gymnasium.

|

||||

* The aim of the basics tutorials is to showcase the fundamental API of Gymnasium to help users implement it

|

||||

* The most common application of Gymnasium is for training RL agents, the training tutorials aim to show a range of example implementations for different environments

|

||||

|

||||

Additionally, we provide the third party tutorials as a link for external projects that utilise Gymnasium that could help users.

|

||||

|

||||

@@ -1,16 +1,10 @@

|

||||

Gymnasium Basics Documentation Links

|

||||

Gymnasium Basics

|

||||

----------------

|

||||

Load custom quadruped robot environments link: https://gymnasium.farama.org/tutorials/gymnasium_basics/load_quadruped_model/

|

||||

|

||||

Implementing Custom Wrappers link: https://gymnasium.farama.org/tutorials/gymnasium_basics/implementing_custom_wrappers/

|

||||

|

||||

Make your own custom environment(environment_creation.py): https://gymnasium.farama.org/tutorials/gymnasium_basics/environment_creation/

|

||||

|

||||

Handling Time Limits: https://gymnasium.farama.org/tutorials/gymnasium_basics/handling_time_limits/

|

||||

|

||||

Training A2C with Vector Envs and Domain Randomization: https://gymnasium.farama.org/tutorials/gymnasium_basics/vector_envs_tutorial/

|

||||

|

||||

.. toctree::

|

||||

:hidden:

|

||||

:hidden:

|

||||

|

||||

/tutorials/gymnasium_basics/load_quadruped_model.md

|

||||

environment_creation

|

||||

implementing_custom_wrappers

|

||||

handling_time_limits

|

||||

load_quadruped_model

|

||||

|

||||

@@ -58,16 +58,16 @@ Answer the questions, and when it's finished you should get a project structure

|

||||

|

||||

.

|

||||

├── gymnasium_env

|

||||

│ ├── envs

|

||||

│ │ ├── grid_world.py

|

||||

│ │ └── __init__.py

|

||||

│ ├── __init__.py

|

||||

│ └── wrappers

|

||||

│ ├── clip_reward.py

|

||||

│ ├── discrete_actions.py

|

||||

│ ├── __init__.py

|

||||

│ ├── reacher_weighted_reward.py

|

||||

│ └── relative_position.py

|

||||

│ ├── envs

|

||||

│ │ ├── grid_world.py

|

||||

│ │ └── __init__.py

|

||||

│ ├── __init__.py

|

||||

│ └── wrappers

|

||||

│ ├── clip_reward.py

|

||||

│ ├── discrete_actions.py

|

||||

│ ├── __init__.py

|

||||

│ ├── reacher_weighted_reward.py

|

||||

│ └── relative_position.py

|

||||

├── LICENSE

|

||||

├── pyproject.toml

|

||||

└── README.md

|

||||

|

||||

@@ -32,9 +32,9 @@ Before following this tutorial, make sure to check out the docs of the :mod:`gym

|

||||

# observation wrapper like this:

|

||||

|

||||

import numpy as np

|

||||

from gym import ActionWrapper, ObservationWrapper, RewardWrapper, Wrapper

|

||||

|

||||

import gymnasium as gym

|

||||

from gymnasium import ActionWrapper, ObservationWrapper, RewardWrapper, Wrapper

|

||||

from gymnasium.spaces import Box, Discrete

|

||||

|

||||

|

||||

@@ -69,12 +69,12 @@ class DiscreteActions(ActionWrapper):

|

||||

return self.disc_to_cont[act]

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

env = gym.make("LunarLanderContinuous-v2")

|

||||

wrapped_env = DiscreteActions(

|

||||

env, [np.array([1, 0]), np.array([-1, 0]), np.array([0, 1]), np.array([0, -1])]

|

||||

)

|

||||

print(wrapped_env.action_space) # Discrete(4)

|

||||

env = gym.make("LunarLanderContinuous-v3")

|

||||

# print(env.action_space) # Box(-1.0, 1.0, (2,), float32)

|

||||

wrapped_env = DiscreteActions(

|

||||

env, [np.array([1, 0]), np.array([-1, 0]), np.array([0, 1]), np.array([0, -1])]

|

||||

)

|

||||

# print(wrapped_env.action_space) # Discrete(4)

|

||||

|

||||

|

||||

# %%

|

||||

|

||||

@@ -1,247 +0,0 @@

|

||||

Load custom quadruped robot environments

|

||||

================================

|

||||

|

||||

In this tutorial we will see how to use the `MuJoCo/Ant-v5` framework to create a quadruped walking environment, using a model file (ending in `.xml`) without having to create a new class.

|

||||

|

||||

Steps:

|

||||

|

||||

0. Get your **MJCF** (or **URDF**) model file of your robot.

|

||||

- Create your own model (see the [Guide](https://mujoco.readthedocs.io/en/stable/m22odeling.html)) or,

|

||||

- Find a ready-made model (in this tutorial, we will use a model from the [**MuJoCo Menagerie**](https://github.com/google-deepmind/mujoco_menagerie) collection).

|

||||

1. Load the model with the `xml_file` argument.

|

||||

2. Tweak the environment parameters to get the desired behavior.

|

||||

1. Tweak the environment simulation parameters.

|

||||

2. Tweak the environment termination parameters.

|

||||

3. Tweak the environment reward parameters.

|

||||

4. Tweak the environment observation parameters.

|

||||

3. Train an agent to move your robot.

|

||||

|

||||

|

||||

The reader is expected to be familiar with the `Gymnasium` API & library, the basics of robotics, and the included `Gymnasium/MuJoCo` environments with the robot model they use. Familiarity with the **MJCF** file model format and the `MuJoCo` simulator is not required but is recommended.

|

||||

|

||||

Setup

|

||||

------

|

||||

We will need `gymnasium>=1.0.0`.

|

||||

|

||||

```sh

|

||||

pip install "gymnasium>=1.0.0"

|

||||

```

|

||||

|

||||

Step 0.1 - Download a Robot Model

|

||||

-------------------------

|

||||

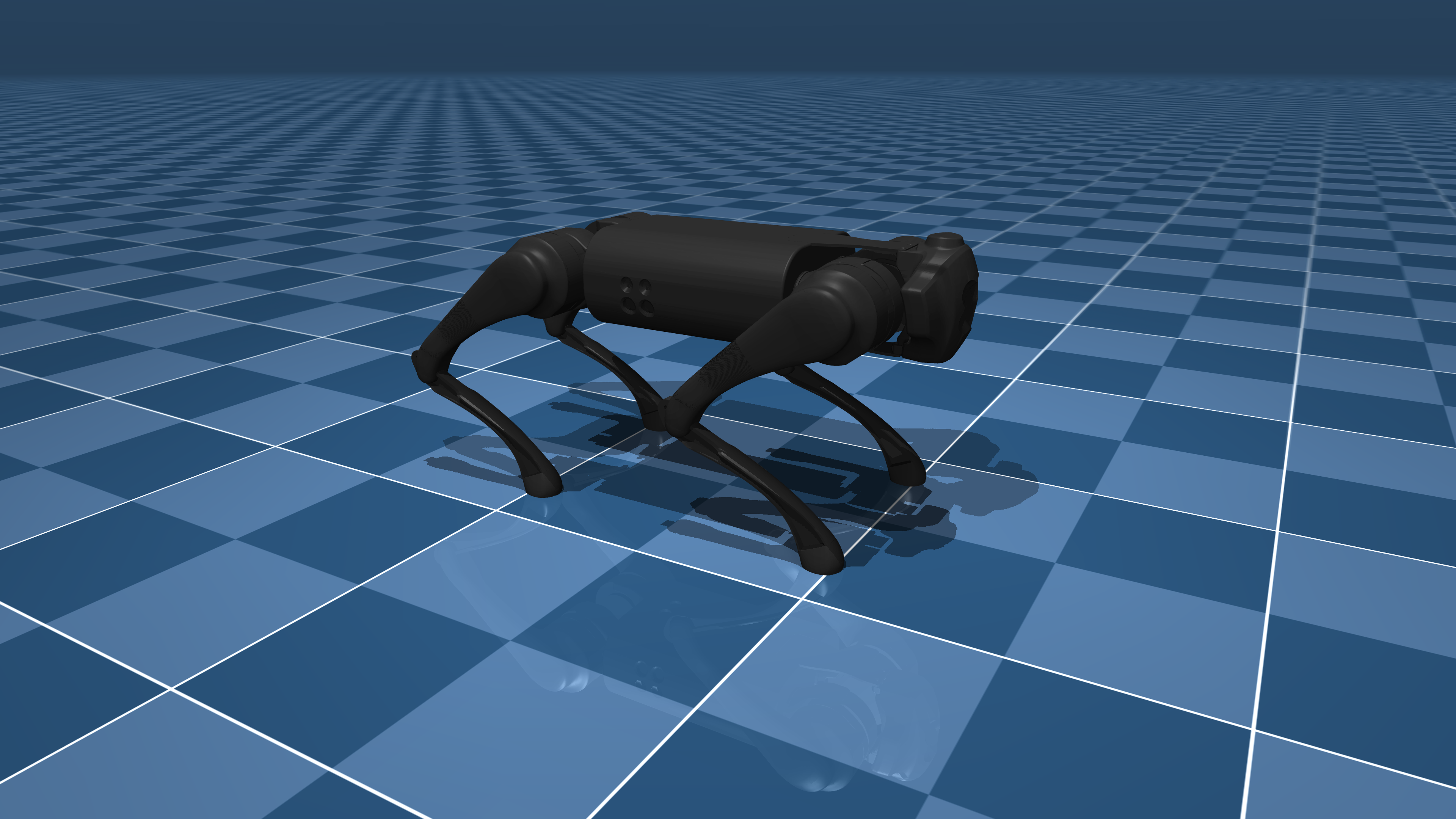

In this tutorial we will load the [Unitree Go1](

|

||||

https://github.com/google-deepmind/mujoco_menagerie/blob/main/unitree_go1/README.md) robot from the excellent [MuJoCo Menagerie](https://github.com/google-deepmind/mujoco_menagerie) robot model collection.

|

||||

|

||||

|

||||

`Go1` is a quadruped robot, controlling it to move is a significant learning problem, much harder than the `Gymnasium/MuJoCo/Ant` environment.

|

||||

|

||||

We can download the whole MuJoCo Menagerie collection (which includes `Go1`),

|

||||

```sh

|

||||

git clone https://github.com/google-deepmind/mujoco_menagerie.git

|

||||

```

|

||||

You can use any other quadruped robot with this tutorial, just adjust the environment parameter values for your robot.

|

||||

|

||||

|

||||

Step 1 - Load the model

|

||||

-------------------------

|

||||

To load the model, all we have to do is use the `xml_file` argument with the `Ant-v5` framework.

|

||||

|

||||

```py

|

||||

import gymnasium

|

||||

import numpy as np

|

||||

env = gymnasium.make('Ant-v5', xml_file='./mujoco_menagerie/unitree_go1/scene.xml')

|

||||

```

|

||||

|

||||

Although this is enough to load the model, we will need to tweak some environment parameters to get the desired behavior for our environment, for now we will also explicitly set the simulation, termination, reward and observation arguments, which we will tweak in the next step.

|

||||

|

||||

```py

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(0, np.inf),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0,

|

||||

frame_skip=1,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

Step 2 - Tweaking the Environment Parameters

|

||||

-------------------------

|

||||

Tweaking the environment parameters is essential to get the desired behavior for learning.

|

||||

In the following subsections, the reader is encouraged to consult the [documentation of the arguments](https://gymnasium.farama.org/main/environments/mujoco/ant/#arguments) for more detailed information.

|

||||

|

||||

|

||||

|

||||

Step 2.1 - Tweaking the Environment Simulation Parameters

|

||||

-------------------------

|

||||

The arguments of interest are `frame_skip`, `reset_noise_scale` and `max_episode_steps`.

|

||||

|

||||

We want to tweak the `frame_skip` parameter to get `dt` to an acceptable value (typical values are `dt` $\in [0.01, 0.1]$ seconds),

|

||||

|

||||

Reminder: $dt = frame\_skip \times model.opt.timestep$, where `model.opt.timestep` is the integrator time step selected in the MJCF model file.

|

||||

|

||||

The `Go1` model we are using has an integrator timestep of `0.002`, so by selecting `frame_skip=25` we can set the value of `dt` to `0.05s`.

|

||||

|

||||

To avoid overfitting the policy, `reset_noise_scale` should be set to a value appropriate to the size of the robot, we want the value to be as large as possible without the initial distribution of states being invalid (`Terminal` regardless of control actions), for `Go1` we choose a value of `0.1`.

|

||||

|

||||

And `max_episode_steps` determines the number of steps per episode before `truncation`, here we set it to 1000 to be consistent with the based `Gymnasium/MuJoCo` environments, but if you need something higher you can set it so.

|

||||

|

||||

|

||||

```py

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(0, np.inf),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1, # set to avoid policy overfitting

|

||||

frame_skip=25, # set dt=0.05

|

||||

max_episode_steps=1000, # kept at 1000

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

Step 2.2 - Tweaking the Environment Termination Parameters

|

||||

-------------------------

|

||||

Termination is important for robot environments to avoid sampling "useless" time steps.

|

||||

|

||||

The arguments of interest are `terminate_when_unhealthy` and `healthy_z_range`.

|

||||

|

||||

We want to set `healthy_z_range` to terminate the environment when the robot falls over, or jumps really high, here we have to choose a value that is logical for the height of the robot, for `Go1` we choose `(0.195, 0.75)`.

|

||||

Note: `healthy_z_range` checks the absolute value of the height of the robot, so if your scene contains different levels of elevation it should be set to `(-np.inf, np.inf)`

|

||||

|

||||

We could also set `terminate_when_unhealthy=False` to disable termination altogether, which is not desirable in the case of `Go1`.

|

||||

|

||||

```py

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(0.195, 0.75), # set to avoid sampling steps where the robot has fallen or jumped too high

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

```

|

||||

|

||||

Note: If you need a different termination condition, you can write your own `TerminationWrapper` (see the [documentation](https://gymnasium.farama.org/main/api/wrappers/)).

|

||||

|

||||

|

||||

|

||||

Step 2.3 - Tweaking the Environment Reward Parameters

|

||||

-------------------------

|

||||

The arguments of interest are `forward_reward_weight`, `ctrl_cost_weight`, `contact_cost_weight`, `healthy_reward`, and `main_body`.

|

||||

|

||||

For the arguments `forward_reward_weight`, `ctrl_cost_weight`, `contact_cost_weight` and `healthy_reward` we have to pick values that make sense for our robot, you can use the default `MuJoCo/Ant` parameters for references and tweak them if a change is needed for your environment. In the case of `Go1` we only change the `ctrl_cost_weight` since it has a higher actuator force range.

|

||||

|

||||

For the argument `main_body` we have to choose which body part is the main body (usually called something like "torso" or "trunk" in the model file) for the calculation of the `forward_reward`, in the case of `Go1` it is the `"trunk"` (Note: in most cases including this one, it can be left at the default value).

|

||||

|

||||

```py

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=1, # kept the same as the 'Ant' environment

|

||||

ctrl_cost_weight=0.05, # changed because of the stronger motors of `Go1`

|

||||

contact_cost_weight=5e-4, # kept the same as the 'Ant' environment

|

||||

healthy_reward=1, # kept the same as the 'Ant' environment

|

||||

main_body=1, # represents the "trunk" of the `Go1` robot

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

```

|

||||

|

||||

Note: If you need a different reward function, you can write your own `RewardWrapper` (see the [documentation](https://gymnasium.farama.org/main/api/wrappers/reward_wrappers/)).

|

||||

|

||||

|

||||

|

||||

Step 2.4 - Tweaking the Environment Observation Parameters

|

||||

-------------------------

|

||||

The arguments of interest are `include_cfrc_ext_in_observation` and `exclude_current_positions_from_observation`.

|

||||

|

||||

Here for `Go1` we have no particular reason to change them.

|

||||

|

||||

```py

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=1,

|

||||

ctrl_cost_weight=0.05,

|

||||

contact_cost_weight=5e-4,

|

||||

healthy_reward=1,

|

||||

main_body=1,

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True, # kept the game as the 'Ant' environment

|

||||

exclude_current_positions_from_observation=False, # kept the game as the 'Ant' environment

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

Note: If you need additional observation elements (such as additional sensors), you can write your own `ObservationWrapper` (see the [documentation](https://gymnasium.farama.org/main/api/wrappers/observation_wrappers/)).

|

||||

|

||||

|

||||

|

||||

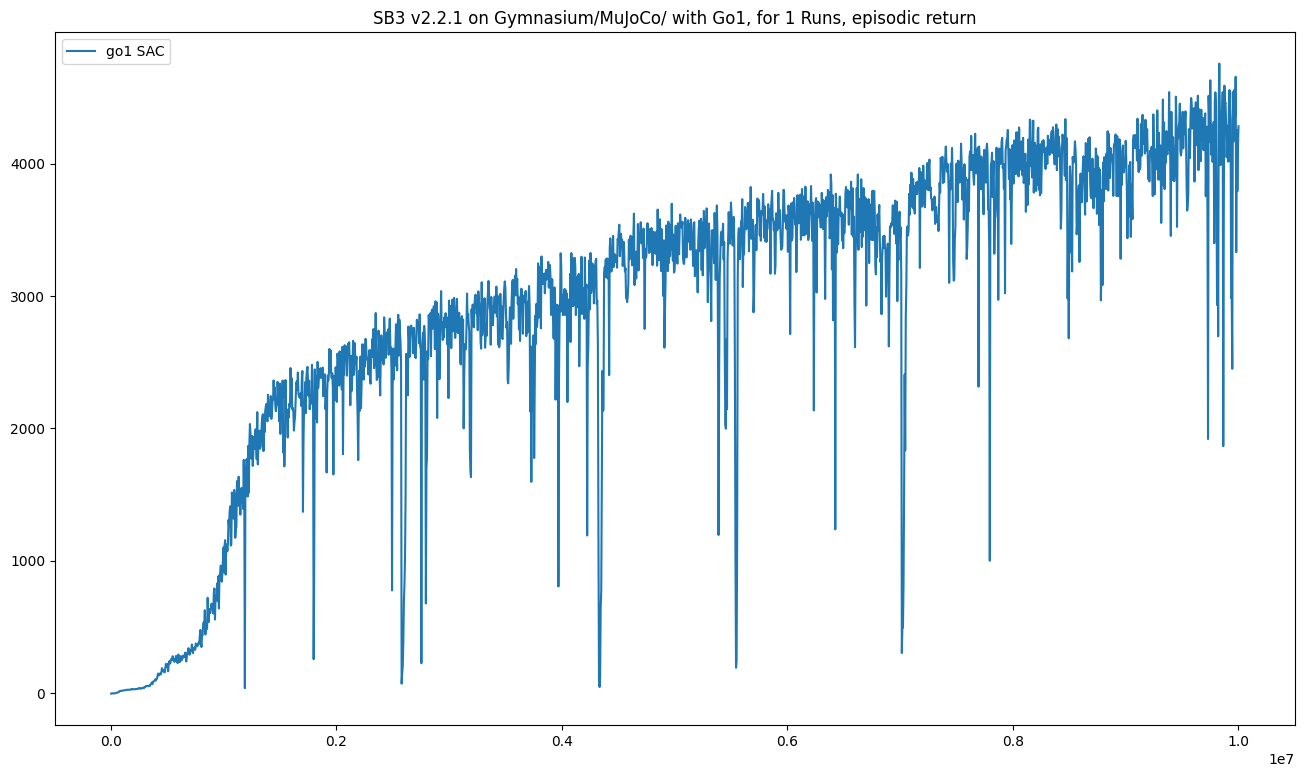

Step 3 - Train your Agent

|

||||

-------------------------

|

||||

Finally, we are done, we can use a RL algorithm to train an agent to walk/run the `Go1` robot.

|

||||

Note: If you have followed this guide with your own robot model, you may discover during training that some environment parameters were not as desired, feel free to go back to step 2 and change anything as needed.

|

||||

|

||||

```py

|

||||

import gymnasium

|

||||

|

||||

env = gymnasium.make(

|

||||

'Ant-v5',

|

||||

xml_file='./mujoco_menagerie/unitree_go1/scene.xml',

|

||||

forward_reward_weight=1,

|

||||

ctrl_cost_weight=0.05,

|

||||

contact_cost_weight=5e-4,

|

||||

healthy_reward=1,

|

||||

main_body=1,

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

... # run your RL algorithm

|

||||

```

|

||||

|

||||

|

||||

<iframe id="odysee-iframe" style="width:100%; aspect-ratio:16 / 9;" src="https://odysee.com/$/embed/@Kallinteris-Andreas:7/video0-step-0-to-step-1000:1?r=6fn5jA9uZQUZXGKVpwtqjz1eyJcS3hj3" allowfullscreen></iframe>

|

||||

<!--

|

||||

Which can run up to `4.7 m/s` according to the manufacturer

|

||||

-->

|

||||

|

||||

|

||||

Epilogue

|

||||

-------------------------

|

||||

You can follow this guide to create most quadruped environments.

|

||||

To create humanoid/bipedal robots, you can also follow this guide using the `Gymnasium/MuJoCo/Humnaoid-v5` framework.

|

||||

|

||||

Author: [@kallinteris-andreas](https://github.com/Kallinteris-Andreas)

|

||||

291

docs/tutorials/gymnasium_basics/load_quadruped_model.py

Normal file

291

docs/tutorials/gymnasium_basics/load_quadruped_model.py

Normal file

@@ -0,0 +1,291 @@

|

||||

"""

|

||||

Load custom quadruped robot environments

|

||||

========================================

|

||||

|

||||

In this tutorial we will see how to use the `MuJoCo/Ant-v5` framework to create a quadruped walking environment,

|

||||

using a model file (ending in `.xml`) without having to create a new class.

|

||||

|

||||

Steps:

|

||||

|

||||

0. Get your **MJCF** (or **URDF**) model file of your robot.

|

||||

- Create your own model (see the MuJoCo Guide) or,

|

||||

- Find a ready-made model (in this tutorial, we will use a model from the MuJoCo Menagerie collection).

|

||||

1. Load the model with the `xml_file` argument.

|

||||

2. Tweak the environment parameters to get the desired behavior.

|

||||

1. Tweak the environment simulation parameters.

|

||||

2. Tweak the environment termination parameters.

|

||||

3. Tweak the environment reward parameters.

|

||||

4. Tweak the environment observation parameters.

|

||||

3. Train an agent to move your robot.

|

||||

"""

|

||||

|

||||

# The reader is expected to be familiar with the `Gymnasium` API & library, the basics of robotics,

|

||||

# and the included `Gymnasium/MuJoCo` environments with the robot model they use.

|

||||

# Familiarity with the **MJCF** file model format and the `MuJoCo` simulator is not required but is recommended.

|

||||

|

||||

# %%

|

||||

# Setup

|

||||

# -----

|

||||

# We will need `gymnasium>=1.0.0`.

|

||||

|

||||

import numpy as np

|

||||

|

||||

import gymnasium as gym

|

||||

|

||||

|

||||

# Make sure Gymnasium is properly installed

|

||||

# You can run this in your terminal:

|

||||

# pip install "gymnasium>=1.0.0"

|

||||

|

||||

# %%

|

||||

# Step 0.1 - Download a Robot Model

|

||||

# ---------------------------------

|

||||

# In this tutorial we will load the Unitree Go1 robot from the excellent MuJoCo Menagerie robot model collection.

|

||||

# Go1 is a quadruped robot, controlling it to move is a significant learning problem,

|

||||

# much harder than the `Gymnasium/MuJoCo/Ant` environment.

|

||||

#

|

||||

# Note: The original tutorial includes an image of the Unitree Go1 robot in a flat terrain scene.

|

||||

# You can view this image at: https://github.com/google-deepmind/mujoco_menagerie/blob/main/unitree_go1/go1.png?raw=true

|

||||

|

||||

# You can download the whole MuJoCo Menagerie collection (which includes `Go1`):

|

||||

# git clone https://github.com/google-deepmind/mujoco_menagerie.git

|

||||

|

||||

# You can use any other quadruped robot with this tutorial, just adjust the environment parameter values for your robot.

|

||||

|

||||

# %%

|

||||

# Step 1 - Load the model

|

||||

# -----------------------

|

||||

# To load the model, all we have to do is use the `xml_file` argument with the `Ant-v5` framework.

|

||||

|

||||

# Basic loading (uncomment to use)

|

||||

# env = gym.make('Ant-v5', xml_file='./mujoco_menagerie/unitree_go1/scene.xml')

|

||||

|

||||

# Although this is enough to load the model, we will need to tweak some environment parameters

|

||||

# to get the desired behavior for our environment, so we will also explicitly set the simulation,

|

||||

# termination, reward and observation arguments, which we will tweak in the next step.

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(0, np.inf),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0,

|

||||

frame_skip=1,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

|

||||

# %%

|

||||

# Step 2 - Tweaking the Environment Parameters

|

||||

# --------------------------------------------

|

||||

# Tweaking the environment parameters is essential to get the desired behavior for learning.

|

||||

# In the following subsections, the reader is encouraged to consult the documentation of

|

||||

# the arguments for more detailed information.

|

||||

|

||||

# %%

|

||||

# Step 2.1 - Tweaking the Environment Simulation Parameters

|

||||

# ---------------------------------------------------------

|

||||

# The arguments of interest are `frame_skip`, `reset_noise_scale` and `max_episode_steps`.

|

||||

|

||||

# We want to tweak the `frame_skip` parameter to get `dt` to an acceptable value

|

||||

# (typical values are `dt` ∈ [0.01, 0.1] seconds),

|

||||

|

||||

# Reminder: dt = frame_skip × model.opt.timestep, where `model.opt.timestep` is the integrator

|

||||

# time step selected in the MJCF model file.

|

||||

|

||||

# The `Go1` model we are using has an integrator timestep of `0.002`, so by selecting

|

||||

# `frame_skip=25` we can set the value of `dt` to `0.05s`.

|

||||

|

||||

# To avoid overfitting the policy, `reset_noise_scale` should be set to a value appropriate

|

||||

# to the size of the robot, we want the value to be as large as possible without the initial

|

||||

# distribution of states being invalid (`Terminal` regardless of control actions),

|

||||

# for `Go1` we choose a value of `0.1`.

|

||||

|

||||

# And `max_episode_steps` determines the number of steps per episode before `truncation`,

|

||||

# here we set it to 1000 to be consistent with the based `Gymnasium/MuJoCo` environments,

|

||||

# but if you need something higher you can set it so.

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(0, np.inf),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1, # set to avoid policy overfitting

|

||||

frame_skip=25, # set dt=0.05

|

||||

max_episode_steps=1000, # kept at 1000

|

||||

)

|

||||

|

||||

# %%

|

||||

# Step 2.2 - Tweaking the Environment Termination Parameters

|

||||

# ----------------------------------------------------------

|

||||

# Termination is important for robot environments to avoid sampling "useless" time steps.

|

||||

|

||||

# The arguments of interest are `terminate_when_unhealthy` and `healthy_z_range`.

|

||||

|

||||

# We want to set `healthy_z_range` to terminate the environment when the robot falls over,

|

||||

# or jumps really high, here we have to choose a value that is logical for the height of the robot,

|

||||

# for `Go1` we choose `(0.195, 0.75)`.

|

||||

# Note: `healthy_z_range` checks the absolute value of the height of the robot,

|

||||

# so if your scene contains different levels of elevation it should be set to `(-np.inf, np.inf)`

|

||||

|

||||

# We could also set `terminate_when_unhealthy=False` to disable termination altogether,

|

||||

# which is not desirable in the case of `Go1`.

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=0,

|

||||

ctrl_cost_weight=0,

|

||||

contact_cost_weight=0,

|

||||

healthy_reward=0,

|

||||

main_body=1,

|

||||

healthy_z_range=(

|

||||

0.195,

|

||||

0.75,

|

||||

), # set to avoid sampling steps where the robot has fallen or jumped too high

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

|

||||

# Note: If you need a different termination condition, you can write your own `TerminationWrapper`

|

||||

# (see the documentation).

|

||||

|

||||

# %%

|

||||

# Step 2.3 - Tweaking the Environment Reward Parameters

|

||||

# -----------------------------------------------------

|

||||

# The arguments of interest are `forward_reward_weight`, `ctrl_cost_weight`, `contact_cost_weight`,

|

||||

# `healthy_reward`, and `main_body`.

|

||||

|

||||

# For the arguments `forward_reward_weight`, `ctrl_cost_weight`, `contact_cost_weight` and `healthy_reward`

|

||||

# we have to pick values that make sense for our robot, you can use the default `MuJoCo/Ant`

|

||||

# parameters for references and tweak them if a change is needed for your environment.

|

||||

# In the case of `Go1` we only change the `ctrl_cost_weight` since it has a higher actuator force range.

|

||||

|

||||

# For the argument `main_body` we have to choose which body part is the main body

|

||||

# (usually called something like "torso" or "trunk" in the model file) for the calculation

|

||||

# of the `forward_reward`, in the case of `Go1` it is the `"trunk"`

|

||||

# (Note: in most cases including this one, it can be left at the default value).

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=1, # kept the same as the 'Ant' environment

|

||||

ctrl_cost_weight=0.05, # changed because of the stronger motors of `Go1`

|

||||

contact_cost_weight=5e-4, # kept the same as the 'Ant' environment

|

||||

healthy_reward=1, # kept the same as the 'Ant' environment

|

||||

main_body=1, # represents the "trunk" of the `Go1` robot

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

|

||||

# Note: If you need a different reward function, you can write your own `RewardWrapper`

|

||||

# (see the documentation).

|

||||

|

||||

# %%

|

||||

# Step 2.4 - Tweaking the Environment Observation Parameters

|

||||

# ----------------------------------------------------------

|

||||

# The arguments of interest are `include_cfrc_ext_in_observation` and

|

||||

# `exclude_current_positions_from_observation`.

|

||||

|

||||

# Here for `Go1` we have no particular reason to change them.

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=1,

|

||||

ctrl_cost_weight=0.05,

|

||||

contact_cost_weight=5e-4,

|

||||

healthy_reward=1,

|

||||

main_body=1,

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True, # kept the same as the 'Ant' environment

|

||||

exclude_current_positions_from_observation=False, # kept the same as the 'Ant' environment

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

)

|

||||

|

||||

|

||||

# Note: If you need additional observation elements (such as additional sensors),

|

||||

# you can write your own `ObservationWrapper` (see the documentation).

|

||||

|

||||

# %%

|

||||

# Step 3 - Train your Agent

|

||||

# -------------------------

|

||||

# Finally, we are done, we can use a RL algorithm to train an agent to walk/run the `Go1` robot.

|

||||

# Note: If you have followed this guide with your own robot model, you may discover

|

||||

# during training that some environment parameters were not as desired,

|

||||

# feel free to go back to step 2 and change anything as needed.

|

||||

|

||||

|

||||

def main():

|

||||

"""Run the final Go1 environment setup."""

|

||||

# Note: The original tutorial includes an image showing the Go1 robot in the environment.

|

||||

# The image is available at: https://github.com/Kallinteris-Andreas/Gymnasium-kalli/assets/30759571/bf1797a3-264d-47de-b14c-e3c16072f695

|

||||

|

||||

env = gym.make(

|

||||

"Ant-v5",

|

||||

xml_file="./mujoco_menagerie/unitree_go1/scene.xml",

|

||||

forward_reward_weight=1,

|

||||

ctrl_cost_weight=0.05,

|

||||

contact_cost_weight=5e-4,

|

||||

healthy_reward=1,

|

||||

main_body=1,

|

||||

healthy_z_range=(0.195, 0.75),

|

||||

include_cfrc_ext_in_observation=True,

|

||||

exclude_current_positions_from_observation=False,

|

||||

reset_noise_scale=0.1,

|

||||

frame_skip=25,

|

||||

max_episode_steps=1000,

|

||||

render_mode="rgb_array", # Change to "human" to visualize

|

||||

)

|

||||

|

||||

# Example of running the environment for a few steps

|

||||

obs, info = env.reset()

|

||||

|

||||

for _ in range(100):

|

||||

action = env.action_space.sample() # Replace with your agent's action

|

||||

obs, reward, terminated, truncated, info = env.step(action)

|

||||

|

||||

if terminated or truncated:

|

||||

obs, info = env.reset()

|

||||

|

||||

env.close()

|

||||

print("Environment tested successfully!")

|

||||

|

||||

# Now you would typically:

|

||||

# 1. Set up your RL algorithm

|

||||

# 2. Train the agent

|

||||

# 3. Evaluate the agent's performance

|

||||

|

||||

|

||||

# %%

|

||||

# Epilogue

|

||||

# -------------------------

|

||||

# You can follow this guide to create most quadruped environments.

|

||||

# To create humanoid/bipedal robots, you can also follow this guide using the `Gymnasium/MuJoCo/Humnaoid-v5` framework.

|

||||

#

|

||||

# Note: The original tutorial includes a video demonstration of the trained Go1 robot walking.

|

||||

# The video shows the robot achieving a speed of up to 4.7 m/s according to the manufacturer.

|

||||

# In the original tutorial, this video is embedded from:

|

||||

# https://odysee.com/$/embed/@Kallinteris-Andreas:7/video0-step-0-to-step-1000:1?r=6fn5jA9uZQUZXGKVpwtqjz1eyJcS3hj3

|

||||

|

||||

# Author: @kallinteris-andreas (https://github.com/Kallinteris-Andreas)

|

||||

@@ -1,7 +1,10 @@

|

||||

Training Agents links in the Gymnasium Documentation

|

||||

-----------------------------------------------------

|

||||

Solving Blackjack with Q-Learning link: https://gymnasium.farama.org/tutorials/training_agents/blackjack_tutorial/

|

||||

Training Agents

|

||||

---------------

|

||||

|

||||

Frozen Lake Benchmark link: https://gymnasium.farama.org/tutorials/training_agents/FrozenLake_tuto/

|

||||

.. toctree::

|

||||

:hidden:

|

||||

|

||||

Training using REINFORCE for Mujoco link: https://gymnasium.farama.org/tutorials/training_agents/reinforce_invpend_gym_v26/

|

||||

blackjack_q_learning

|

||||

frozenlake_q_learning

|

||||

mujoco_reinforce

|

||||

vector_a2c

|

||||

|

||||

@@ -275,7 +275,7 @@ agent = BlackjackAgent(

|

||||

#

|

||||

|

||||

|

||||

env = gym.wrappers.RecordEpisodeStatistics(env, deque_size=n_episodes)

|

||||

env = gym.wrappers.RecordEpisodeStatistics(env, buffer_length=n_episodes)

|

||||

for episode in tqdm(range(n_episodes)):

|

||||

obs, info = env.reset()

|

||||

done = False

|

||||

@@ -10,14 +10,6 @@ Frozenlake benchmark

|

||||

# environment from the reinforcement learning

|

||||

# `Gymnasium <https://gymnasium.farama.org/>`__ package using the

|

||||

# Q-learning algorithm.

|

||||

#

|

||||

|

||||

|

||||

# %%

|

||||

# Dependencies

|

||||

# ------------

|

||||

#

|

||||

|

||||

|

||||

# %%

|

||||

# Let's first import a few dependencies we'll need.

|

||||

@@ -26,8 +18,6 @@ Frozenlake benchmark

|

||||

# Author: Andrea Pierré

|

||||

# License: MIT License

|

||||

|

||||

|

||||

from pathlib import Path

|

||||

from typing import NamedTuple

|

||||

|

||||

import matplotlib.pyplot as plt

|

||||

@@ -63,7 +53,6 @@ class Params(NamedTuple):

|

||||

action_size: int # Number of possible actions

|

||||

state_size: int # Number of possible states

|

||||

proba_frozen: float # Probability that a tile is frozen

|

||||

savefig_folder: Path # Root folder where plots are saved

|

||||

|

||||

|

||||

params = Params(

|

||||

@@ -78,17 +67,12 @@ params = Params(

|

||||

action_size=None,

|

||||

state_size=None,

|

||||

proba_frozen=0.9,

|

||||

savefig_folder=Path("../../_static/img/tutorials/"),

|

||||

)

|

||||

params

|

||||

|

||||

# Set the seed

|

||||

rng = np.random.default_rng(params.seed)

|

||||

|

||||

# Create the figure folder if it doesn't exist

|

||||

params.savefig_folder.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

|

||||

# %%

|

||||

# The FrozenLake environment

|

||||

# --------------------------

|

||||

@@ -333,8 +317,6 @@ def plot_q_values_map(qtable, env, map_size):

|

||||

spine.set_visible(True)

|

||||

spine.set_linewidth(0.7)

|

||||

spine.set_color("black")

|

||||

img_title = f"frozenlake_q_values_{map_size}x{map_size}.png"

|

||||

fig.savefig(params.savefig_folder / img_title, bbox_inches="tight")

|

||||

plt.show()

|

||||

|

||||

|

||||

@@ -355,8 +337,6 @@ def plot_states_actions_distribution(states, actions, map_size):

|

||||

ax[1].set_xticks(list(labels.values()), labels=labels.keys())

|

||||

ax[1].set_title("Actions")

|

||||

fig.tight_layout()

|

||||

img_title = f"frozenlake_states_actions_distrib_{map_size}x{map_size}.png"

|

||||

fig.savefig(params.savefig_folder / img_title, bbox_inches="tight")

|

||||

plt.show()

|

||||

|

||||

|

||||

@@ -505,8 +485,6 @@ def plot_steps_and_rewards(rewards_df, steps_df):

|

||||

for axi in ax:

|

||||

axi.legend(title="map size")

|

||||

fig.tight_layout()

|

||||

img_title = "frozenlake_steps_and_rewards.png"

|

||||

fig.savefig(params.savefig_folder / img_title, bbox_inches="tight")

|

||||

plt.show()

|

||||

|

||||

|

||||

@@ -3,7 +3,7 @@

|

||||

Training using REINFORCE for Mujoco

|

||||

===================================

|

||||

|

||||

.. image:: /_static/img/tutorials/reinforce_invpend_gym_v26_fig1.gif

|

||||

.. image:: /_static/img/tutorials/mujoco_reinforce_fig1.gif

|

||||

:width: 400

|

||||

:alt: agent-environment-diagram

|

||||

|

||||

@@ -62,7 +62,7 @@ plt.rcParams["figure.figsize"] = (10, 5)

|

||||

# Policy Network

|

||||

# ~~~~~~~~~~~~~~

|

||||

#

|

||||

# .. image:: /_static/img/tutorials/reinforce_invpend_gym_v26_fig2.png

|

||||

# .. image:: /_static/img/tutorials/mujoco_reinforce_fig2.png

|

||||

#

|

||||

# We start by building a policy that the agent will learn using REINFORCE.

|

||||

# A policy is a mapping from the current environment observation to a probability distribution of the actions to be taken.

|

||||

@@ -131,7 +131,7 @@ class Policy_Network(nn.Module):

|

||||

# Building an agent

|

||||

# ~~~~~~~~~~~~~~~~~

|

||||

#

|

||||

# .. image:: /_static/img/tutorials/reinforce_invpend_gym_v26_fig3.jpeg

|

||||

# .. image:: /_static/img/tutorials/mujoco_reinforce_fig3.jpeg

|

||||

#

|

||||

# Now that we are done building the policy, let us develop **REINFORCE** which gives life to the policy network.

|

||||

# The algorithm of REINFORCE could be found above. As mentioned before, REINFORCE aims to maximize the Monte-Carlo returns.

|

||||

@@ -278,6 +278,7 @@ for seed in [1, 2, 3, 5, 8]: # Fibonacci seeds

|

||||

# End the episode when either truncated or terminated is true

|

||||

# - truncated: The episode duration reaches max number of timesteps

|

||||

# - terminated: Any of the state space values is no longer finite.

|

||||

#

|

||||

done = terminated or truncated

|

||||

|

||||

reward_over_episodes.append(wrapped_env.return_queue[-1])

|

||||

@@ -304,7 +305,7 @@ sns.lineplot(x="episodes", y="reward", data=df1).set(

|

||||

plt.show()

|

||||

|

||||

# %%

|

||||

# .. image:: /_static/img/tutorials/reinforce_invpend_gym_v26_fig4.png

|

||||

# .. image:: /_static/img/tutorials/mujoco_reinforce_fig4.png

|

||||

#

|

||||

# Author: Siddarth Chandrasekar

|

||||

#

|

||||

@@ -180,7 +180,7 @@ class A2C(nn.Module):

|

||||

actions = action_pd.sample()

|

||||

action_log_probs = action_pd.log_prob(actions)

|

||||

entropy = action_pd.entropy()

|

||||

return (actions, action_log_probs, state_values, entropy)

|

||||

return actions, action_log_probs, state_values, entropy

|

||||

|

||||

def get_losses(

|

||||

self,

|

||||

@@ -268,7 +268,7 @@ class A2C(nn.Module):

|

||||

# The simplest way to create vector environments is by calling `gym.vector.make`, which creates multiple instances of the same environment:

|

||||

#

|

||||

|

||||

envs = gym.vector.make("LunarLander-v3", num_envs=3, max_episode_steps=600)

|

||||

envs = gym.make_vec("LunarLander-v3", num_envs=3, max_episode_steps=600)

|

||||

|

||||

|

||||

# %%

|

||||

@@ -281,7 +281,7 @@ envs = gym.vector.make("LunarLander-v3", num_envs=3, max_episode_steps=600)

|

||||

# Manually setting up 3 parallel 'LunarLander-v3' envs with different parameters:

|

||||

|

||||

|

||||

envs = gym.vector.AsyncVectorEnv(

|

||||

envs = gym.vector.SyncVectorEnv(

|

||||

[

|

||||

lambda: gym.make(

|

||||

"LunarLander-v3",

|

||||

@@ -314,7 +314,7 @@ envs = gym.vector.AsyncVectorEnv(

|

||||

#

|

||||

|

||||

|

||||

envs = gym.vector.AsyncVectorEnv(

|

||||

envs = gym.vector.SyncVectorEnv(

|

||||

[

|

||||

lambda: gym.make(

|

||||

"LunarLander-v3",

|

||||

@@ -393,7 +393,7 @@ if randomize_domain:

|

||||

)

|

||||

|

||||

else:

|

||||

envs = gym.vector.make("LunarLander-v3", num_envs=n_envs, max_episode_steps=600)

|

||||

envs = gym.make_vec("LunarLander-v3", num_envs=n_envs, max_episode_steps=600)

|

||||

|

||||

|

||||

obs_shape = envs.single_observation_space.shape[0]

|

||||

@@ -425,7 +425,9 @@ agent = A2C(obs_shape, action_shape, device, critic_lr, actor_lr, n_envs)

|

||||

#

|

||||

|

||||

# create a wrapper environment to save episode returns and episode lengths

|

||||

envs_wrapper = gym.wrappers.RecordEpisodeStatistics(envs, deque_size=n_envs * n_updates)

|

||||

envs_wrapper = gym.wrappers.vector.RecordEpisodeStatistics(

|

||||

envs, buffer_length=n_envs * n_updates

|

||||

)

|

||||

|

||||

critic_losses = []

|

||||

actor_losses = []

|

||||

Reference in New Issue

Block a user