Compare commits

216 Commits

v0.6.0

...

v0.7.0-alp

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

be5f2ef9b9 | ||

|

|

adfcb79387 | ||

|

|

73c4c0ac5f | ||

|

|

6fc601f696 | ||

|

|

07111fb7bb | ||

|

|

a06d2170b0 | ||

|

|

7f53ea3bf3 | ||

|

|

b2accd1c2a | ||

|

|

8ef8a8dea7 | ||

|

|

e929404676 | ||

|

|

c2258bedae | ||

|

|

215fdbb7ed | ||

|

|

ee998f6882 | ||

|

|

826e95afca | ||

|

|

47583d48e7 | ||

|

|

e759cdf061 | ||

|

|

88503c2a09 | ||

|

|

d5be23dffe | ||

|

|

80c01dc085 | ||

|

|

45b2549fa9 | ||

|

|

c7ce454188 | ||

|

|

7059ea42d6 | ||

|

|

8ea1c29c9b | ||

|

|

33bbfdbc9b | ||

|

|

5de54f8853 | ||

|

|

a1ac41218a | ||

|

|

55fc647568 | ||

|

|

e83e898eed | ||

|

|

eb07e4588b | ||

|

|

563f834c96 | ||

|

|

183178681d | ||

|

|

8dba53e494 | ||

|

|

e4782b19a3 | ||

|

|

ec86b1dffa | ||

|

|

6cb8266c7b | ||

|

|

9c50302a39 | ||

|

|

3313c69898 | ||

|

|

530c6ca7ec | ||

|

|

07ed2fb523 | ||

|

|

d9ec380a15 | ||

|

|

b60eb3a899 | ||

|

|

b4df69791b | ||

|

|

c21b8a22b9 | ||

|

|

475a76e656 | ||

|

|

7ba5d5ef86 | ||

|

|

737dc1ddde | ||

|

|

164bf19b36 | ||

|

|

25976771d9 | ||

|

|

f2198c2e9a | ||

|

|

eec19c6d2c | ||

|

|

30e03feb5f | ||

|

|

58cd3bde9f | ||

|

|

662bfb7b88 | ||

|

|

5f3e3a17d3 | ||

|

|

feba2d9975 | ||

|

|

e3e3a1c457 | ||

|

|

90628f3c8d | ||

|

|

f6bcadb79d | ||

|

|

d4ac16773c | ||

|

|

96f044d2bf | ||

|

|

f31868b913 | ||

|

|

73b0ff5b55 | ||

|

|

64cf69045a | ||

|

|

e57dae0f31 | ||

|

|

6386e7d5cf | ||

|

|

4bad103da9 | ||

|

|

30a26adb7c | ||

|

|

8be4adfc0a | ||

|

|

fed4cc3965 | ||

|

|

7d1e074683 | ||

|

|

00516e50a1 | ||

|

|

e83d76fbd9 | ||

|

|

304f152315 | ||

|

|

3a82ebf7fd | ||

|

|

0253d34467 | ||

|

|

9209f9acde | ||

|

|

3dbbb398df | ||

|

|

17e8ad110f | ||

|

|

5e91d31ed3 | ||

|

|

fad9d20820 | ||

|

|

fe9a1c8580 | ||

|

|

cd6d7d5198 | ||

|

|

771478bc68 | ||

|

|

c4a59896f8 | ||

|

|

3eb1608403 | ||

|

|

8fde70d4dc | ||

|

|

5a047833ed | ||

|

|

f6c28e6be1 | ||

|

|

0ebf10d19d | ||

|

|

d3005d3ef3 | ||

|

|

effcef2184 | ||

|

|

89fc0ad7a9 | ||

|

|

410272ee1d | ||

|

|

1c97bf50b6 | ||

|

|

4ecd2c9d0b | ||

|

|

e592243a09 | ||

|

|

2f4a92e352 | ||

|

|

ceafc29040 | ||

|

|

b20efabfd2 | ||

|

|

85b6e7293c | ||

|

|

6aced927ad | ||

|

|

75997e6c08 | ||

|

|

9040d00110 | ||

|

|

8ebc5c6b07 | ||

|

|

d4807790ff | ||

|

|

0de5e7a285 | ||

|

|

c40000aeda | ||

|

|

31198bc105 | ||

|

|

92599acfca | ||

|

|

f6e70779fe | ||

|

|

3017bde686 | ||

|

|

9d84ec4bb3 | ||

|

|

586141adb2 | ||

|

|

3f763f99e2 | ||

|

|

15c7f36ea3 | ||

|

|

04d1a083fa | ||

|

|

327ee1dae8 | ||

|

|

22885c3e64 | ||

|

|

94ededb54c | ||

|

|

af6a07697a | ||

|

|

5f1d8c95eb | ||

|

|

7d9e032407 | ||

|

|

bc918a5ad5 | ||

|

|

ee54ce4727 | ||

|

|

e85bf2f2d5 | ||

|

|

a7460ffbd1 | ||

|

|

7fe1fd2f95 | ||

|

|

d30670e92e | ||

|

|

9b202c6e1e | ||

|

|

87946eafd5 | ||

|

|

7575d3c726 | ||

|

|

8b9713a934 | ||

|

|

ec713c18c4 | ||

|

|

c24b0a1a3f | ||

|

|

34e0cb0092 | ||

|

|

7b7c7cba21 | ||

|

|

c45343dd30 | ||

|

|

b7f6603c1f | ||

|

|

2d3b052dea | ||

|

|

dcb6234771 | ||

|

|

e44d423e83 | ||

|

|

5435bb734c | ||

|

|

13f59adf61 | ||

|

|

0fce3368d3 | ||

|

|

1ee5c81267 | ||

|

|

3bb9d5eb50 | ||

|

|

efb23f7cf9 | ||

|

|

013f4674de | ||

|

|

6966b25d9c | ||

|

|

d513f56c8c | ||

|

|

7aa05618a3 | ||

|

|

cdfbbe5e60 | ||

|

|

fe7d1cb81c | ||

|

|

c2a9395a4b | ||

|

|

586279bcfc | ||

|

|

8bd10e7c4c | ||

|

|

928e6165bc | ||

|

|

77c9e801aa | ||

|

|

c78132417f | ||

|

|

849928887e | ||

|

|

ba1163d49f | ||

|

|

6f9c89af39 | ||

|

|

246b8b1242 | ||

|

|

f0db68cb75 | ||

|

|

f0d1fdfb46 | ||

|

|

3b8b2e030a | ||

|

|

b4fee677a5 | ||

|

|

fe706583f9 | ||

|

|

d0e0c17ece | ||

|

|

5aaa38bcaf | ||

|

|

6ff9b27f8e | ||

|

|

3f4e035506 | ||

|

|

57d9fbb927 | ||

|

|

ee44e51b30 | ||

|

|

5011f24123 | ||

|

|

d1eda334f3 | ||

|

|

2ae5ce9f2c | ||

|

|

4f5ac78b7e | ||

|

|

074c9af020 | ||

|

|

2da2d4e365 | ||

|

|

8eb76ab2a5 | ||

|

|

a710d95243 | ||

|

|

a06535d7ed | ||

|

|

f511ac9be7 | ||

|

|

e28ad2177e | ||

|

|

cb16fe84cd | ||

|

|

ec3569aa39 | ||

|

|

246edecf53 | ||

|

|

34834c5af9 | ||

|

|

b845245614 | ||

|

|

5711fb9969 | ||

|

|

d1eaecde9a | ||

|

|

00c8505d1e | ||

|

|

33f01efe69 | ||

|

|

377d312c81 | ||

|

|

badf5d5412 | ||

|

|

0339f90b40 | ||

|

|

5455e8e6a9 | ||

|

|

6843b71a0d | ||

|

|

634408b5e8 | ||

|

|

d053f78b74 | ||

|

|

93b6fceb2f | ||

|

|

ac7860c35d | ||

|

|

b0eab8729f | ||

|

|

cb81f80b31 | ||

|

|

ea97529185 | ||

|

|

f1075191fe | ||

|

|

74c479fbc9 | ||

|

|

7e788d3a17 | ||

|

|

69b3c75f0d | ||

|

|

b2c2fa40a2 | ||

|

|

50458d9524 | ||

|

|

9679e3e356 | ||

|

|

6db9f92b8a | ||

|

|

4a44498d45 | ||

|

|

216510c573 |

@@ -1,2 +1,5 @@

|

||||

ignore:

|

||||

- "src/bin"

|

||||

coverage:

|

||||

status:

|

||||

patch: off

|

||||

|

||||

32

Cargo.toml

32

Cargo.toml

@@ -1,9 +1,10 @@

|

||||

[package]

|

||||

name = "solana"

|

||||

description = "Blockchain Rebuilt for Scale"

|

||||

version = "0.6.0"

|

||||

description = "Blockchain, Rebuilt for Scale"

|

||||

version = "0.7.0-alpha"

|

||||

documentation = "https://docs.rs/solana"

|

||||

homepage = "http://solana.com/"

|

||||

readme = "README.md"

|

||||

repository = "https://github.com/solana-labs/solana"

|

||||

authors = [

|

||||

"Anatoly Yakovenko <anatoly@solana.com>",

|

||||

@@ -40,6 +41,10 @@ path = "src/bin/mint.rs"

|

||||

name = "solana-mint-demo"

|

||||

path = "src/bin/mint-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-drone"

|

||||

path = "src/bin/drone.rs"

|

||||

|

||||

[badges]

|

||||

codecov = { repository = "solana-labs/solana", branch = "master", service = "github" }

|

||||

|

||||

@@ -52,7 +57,7 @@ erasure = []

|

||||

[dependencies]

|

||||

rayon = "1.0.0"

|

||||

sha2 = "0.7.0"

|

||||

generic-array = { version = "0.9.0", default-features = false, features = ["serde"] }

|

||||

generic-array = { version = "0.11.1", default-features = false, features = ["serde"] }

|

||||

serde = "1.0.27"

|

||||

serde_derive = "1.0.27"

|

||||

serde_json = "1.0.10"

|

||||

@@ -60,12 +65,15 @@ ring = "0.12.1"

|

||||

untrusted = "0.5.1"

|

||||

bincode = "1.0.0"

|

||||

chrono = { version = "0.4.0", features = ["serde"] }

|

||||

log = "^0.4.1"

|

||||

env_logger = "^0.4.1"

|

||||

matches = "^0.1.6"

|

||||

byteorder = "^1.2.1"

|

||||

libc = "^0.2.1"

|

||||

getopts = "^0.2"

|

||||

isatty = "0.1"

|

||||

rand = "0.4.2"

|

||||

pnet = "^0.21.0"

|

||||

log = "0.4.2"

|

||||

env_logger = "0.5.10"

|

||||

matches = "0.1.6"

|

||||

byteorder = "1.2.1"

|

||||

libc = "0.2.1"

|

||||

getopts = "0.2"

|

||||

atty = "0.2"

|

||||

rand = "0.5.1"

|

||||

pnet_datalink = "0.21.0"

|

||||

tokio = "0.1"

|

||||

tokio-codec = "0.1"

|

||||

tokio-io = "0.1"

|

||||

|

||||

2

LICENSE

2

LICENSE

@@ -1,4 +1,4 @@

|

||||

Copyright 2018 Anatoly Yakovenko, Greg Fitzgerald and Stephen Akridge

|

||||

Copyright 2018 Solana Labs, Inc.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

|

||||

123

README.md

123

README.md

@@ -1,19 +1,19 @@

|

||||

[](https://crates.io/crates/solana)

|

||||

[](https://docs.rs/solana)

|

||||

[](https://buildkite.com/solana-labs/solana)

|

||||

[](https://solana-ci-gate.herokuapp.com/buildkite_public_log?https://buildkite.com/solana-labs/solana/builds/latest/master)

|

||||

[](https://codecov.io/gh/solana-labs/solana)

|

||||

|

||||

Blockchain, Rebuilt for Scale

|

||||

===

|

||||

|

||||

Solana™ is a new blockchain architecture built from the ground up for scale. The architecture supports

|

||||

up to 710 thousand transactions per second on a gigabit network.

|

||||

|

||||

Disclaimer

|

||||

===

|

||||

|

||||

All claims, content, designs, algorithms, estimates, roadmaps, specifications, and performance measurements described in this project are done with the author's best effort. It is up to the reader to check and validate their accuracy and truthfulness. Furthermore nothing in this project constitutes a solicitation for investment.

|

||||

|

||||

Solana: Blockchain Rebuilt for Scale

|

||||

===

|

||||

|

||||

Solana™ is a new blockchain architecture built from the ground up for scale. The architecture supports

|

||||

up to 710 thousand transactions per second on a gigabit network.

|

||||

|

||||

Introduction

|

||||

===

|

||||

|

||||

@@ -58,50 +58,39 @@ your odds of success if you check out the

|

||||

before proceeding:

|

||||

|

||||

```bash

|

||||

$ git checkout v0.6.0

|

||||

$ git checkout v0.6.1

|

||||

```

|

||||

|

||||

Configuration Setup

|

||||

---

|

||||

|

||||

The network is initialized with a genesis ledger and leader/validator configuration files.

|

||||

These files can be generated by running the following script.

|

||||

|

||||

```bash

|

||||

$ ./multinode-demo/setup.sh

|

||||

```

|

||||

|

||||

Singlenode Testnet

|

||||

---

|

||||

|

||||

The fullnode server is initialized with a ledger from stdin and

|

||||

generates new ledger entries on stdout. To create the input ledger, we'll need

|

||||

to create *the mint* and use it to generate a *genesis ledger*. It's done in

|

||||

two steps because the mint-demo.json file contains private keys that will be

|

||||

used later in this demo.

|

||||

|

||||

```bash

|

||||

$ echo 1000000000 | cargo run --release --bin solana-mint-demo > mint-demo.json

|

||||

$ cat mint-demo.json | cargo run --release --bin solana-genesis-demo > genesis.log

|

||||

```

|

||||

|

||||

Before you start a fullnode, make sure you know the IP address of the machine you

|

||||

want to be the leader for the demo, and make sure that udp ports 8000-10000 are

|

||||

open on all the machines you want to test with.

|

||||

|

||||

Generate a leader configuration file with:

|

||||

|

||||

```bash

|

||||

cargo run --release --bin solana-fullnode-config > leader.json

|

||||

```

|

||||

|

||||

Now start the server:

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/leader.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cargo run --release --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

$ ./multinode-demo/leader.sh > leader-txs.log

|

||||

$ ./multinode-demo/leader.sh

|

||||

```

|

||||

|

||||

To run a performance-enhanced fullnode on Linux, download `libcuda_verify_ed25519.a`. Enable

|

||||

it by adding `--features=cuda` to the line that runs `solana-fullnode` in `leader.sh`.

|

||||

it by adding `--features=cuda` to the line that runs `solana-fullnode` in

|

||||

`leader.sh`. [CUDA 9.2](https://developer.nvidia.com/cuda-downloads) must be installed on your system.

|

||||

|

||||

```bash

|

||||

$ wget https://solana-build-artifacts.s3.amazonaws.com/v0.6.0/libcuda_verify_ed25519.a

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

$ ./fetch-perf-libs.sh

|

||||

$ cargo run --release --features=cuda --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

```

|

||||

|

||||

Wait a few seconds for the server to initialize. It will print "Ready." when it's ready to

|

||||

@@ -113,22 +102,14 @@ Multinode Testnet

|

||||

To run a multinode testnet, after starting a leader node, spin up some validator nodes:

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/validator.sh

|

||||

#!/bin/bash

|

||||

rsync -v -e ssh $1/mint-demo.json .

|

||||

rsync -v -e ssh $1/leader.json .

|

||||

rsync -v -e ssh $1/genesis.log .

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cargo run --release --bin solana-fullnode -- -l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

$ ./multinode-demo/validator.sh ubuntu@10.0.1.51:~/solana > validator-txs.log #The leader machine

|

||||

$ ./multinode-demo/validator.sh ubuntu@10.0.1.51:~/solana #The leader machine

|

||||

```

|

||||

|

||||

As with the leader node, you can run a performance-enhanced validator fullnode by adding

|

||||

`--features=cuda` to the line that runs `solana-fullnode` in `validator.sh`.

|

||||

|

||||

```bash

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- -l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

$ cargo run --release --features=cuda --bin solana-fullnode -- -l validator.json -v leader.json < genesis.log

|

||||

```

|

||||

|

||||

|

||||

@@ -139,13 +120,7 @@ Now that your singlenode or multinode testnet is up and running, in a separate s

|

||||

the JSON configuration file here, not the genesis ledger.

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/client.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

rsync -v -e ssh $1/leader.json .

|

||||

rsync -v -e ssh $1/mint-demo.json .

|

||||

cat mint-demo.json | cargo run --release --bin solana-client-demo -- -l leader.json

|

||||

$ ./multinode-demo/client.sh ubuntu@10.0.1.51:~/solana #The leader machine

|

||||

$ ./multinode-demo/client.sh ubuntu@10.0.1.51:~/solana 2 #The leader machine and the total number of nodes in the network

|

||||

```

|

||||

|

||||

What just happened? The client demo spins up several threads to send 500,000 transactions

|

||||

@@ -157,6 +132,26 @@ demo completes after it has convinced itself the testnet won't process any addit

|

||||

transactions. You should see several TPS measurements printed to the screen. In the

|

||||

multinode variation, you'll see TPS measurements for each validator node as well.

|

||||

|

||||

Linux Snap

|

||||

---

|

||||

A Linux [Snap](https://snapcraft.io/) is available, which can be used to

|

||||

easily get Solana running on supported Linux systems without building anything

|

||||

from source. The `edge` Snap channel is updated daily with the latest

|

||||

development from the `master` branch. To install:

|

||||

```bash

|

||||

$ sudo snap install solana --edge --devmode

|

||||

```

|

||||

(`--devmode` flag is required only for `solana.fullnode-cuda`)

|

||||

|

||||

Once installed the usual Solana programs will be available as `solona.*` instead

|

||||

of `solana-*`. For example, `solana.fullnode` instead of `solana-fullnode`.

|

||||

|

||||

Update to the latest version at any time with

|

||||

```bash

|

||||

$ snap info solana

|

||||

$ sudo snap refresh solana --devmode

|

||||

```

|

||||

|

||||

Developing

|

||||

===

|

||||

|

||||

@@ -210,6 +205,17 @@ to see the debug and info sections for streamer and server respectively. General

|

||||

we are using debug for infrequent debug messages, trace for potentially frequent messages and

|

||||

info for performance-related logging.

|

||||

|

||||

Attaching to a running process with gdb

|

||||

|

||||

```

|

||||

$ sudo gdb

|

||||

attach <PID>

|

||||

set logging on

|

||||

thread apply all bt

|

||||

```

|

||||

|

||||

This will dump all the threads stack traces into gdb.txt

|

||||

|

||||

Benchmarking

|

||||

---

|

||||

|

||||

@@ -228,12 +234,21 @@ $ cargo +nightly bench --features="unstable"

|

||||

Code coverage

|

||||

---

|

||||

|

||||

To generate code coverage statistics, run kcov via Docker:

|

||||

To generate code coverage statistics, install cargo-cov. Note: the tool currently only works

|

||||

in Rust nightly.

|

||||

|

||||

```bash

|

||||

$ ./ci/coverage.sh

|

||||

$ cargo +nightly install cargo-cov

|

||||

```

|

||||

The coverage report will be written to `./target/cov/index.html`

|

||||

|

||||

Run cargo-cov and generate a report:

|

||||

|

||||

```bash

|

||||

$ cargo +nightly cov test

|

||||

$ cargo +nightly cov report --open

|

||||

```

|

||||

|

||||

The coverage report will be written to `./target/cov/report/index.html`

|

||||

|

||||

|

||||

Why coverage? While most see coverage as a code quality metric, we see it primarily as a developer

|

||||

|

||||

1

build.rs

1

build.rs

@@ -11,5 +11,6 @@ fn main() {

|

||||

}

|

||||

if !env::var("CARGO_FEATURE_ERASURE").is_err() {

|

||||

println!("cargo:rustc-link-lib=dylib=Jerasure");

|

||||

println!("cargo:rustc-link-lib=dylib=gf_complete");

|

||||

}

|

||||

}

|

||||

|

||||

1

ci/.gitignore

vendored

1

ci/.gitignore

vendored

@@ -1,2 +1,3 @@

|

||||

/node_modules/

|

||||

/package-lock.json

|

||||

/snapcraft.credentials

|

||||

|

||||

88

ci/README.md

Normal file

88

ci/README.md

Normal file

@@ -0,0 +1,88 @@

|

||||

|

||||

Our CI infrastructure is built around [BuildKite](https://buildkite.com) with some

|

||||

additional GitHub integration provided by https://github.com/mvines/ci-gate

|

||||

|

||||

## Agent Queues

|

||||

|

||||

We define two [Agent Queues](https://buildkite.com/docs/agent/v3/queues):

|

||||

`queue=default` and `queue=cuda`. The `default` queue should be favored and

|

||||

runs on lower-cost CPU instances. The `cuda` queue is only necessary for

|

||||

running **tests** that depend on GPU (via CUDA) access -- CUDA builds may still

|

||||

be run on the `default` queue, and the [buildkite artifact

|

||||

system](https://buildkite.com/docs/builds/artifacts) used to transfer build

|

||||

products over to a GPU instance for testing.

|

||||

|

||||

## Buildkite Agent Management

|

||||

|

||||

### Buildkite GCP Setup

|

||||

|

||||

CI runs on Google Cloud Platform via two Compute Engine Instance groups:

|

||||

`ci-default` and `ci-cuda`. Autoscaling is currently disabled and the number of

|

||||

VM Instances in each group is manually adjusted.

|

||||

|

||||

#### Updating a CI Disk Image

|

||||

|

||||

Each Instance group has its own disk image, `ci-default-vX` and

|

||||

`ci-cuda-vY`, where *X* and *Y* are incremented each time the image is changed.

|

||||

|

||||

The process to update a disk image is as follows (TODO: make this less manual):

|

||||

|

||||

1. Create a new VM Instance using the disk image to modify.

|

||||

2. Once the VM boots, ssh to it and modify the disk as desired.

|

||||

3. Stop the VM Instance running the modified disk. Remember the name of the VM disk

|

||||

4. From another machine, `gcloud auth login`, then create a new Disk Image based

|

||||

off the modified VM Instance:

|

||||

```

|

||||

$ gcloud compute images create ci-default-v5 --source-disk xxx --source-disk-zone us-east1-b

|

||||

```

|

||||

or

|

||||

```

|

||||

$ gcloud compute images create ci-cuda-v5 --source-disk xxx --source-disk-zone us-east1-b

|

||||

```

|

||||

5. Delete the new VM instance.

|

||||

6. Go to the Instance templates tab, find the existing template named

|

||||

`ci-default-vX` or `ci-cuda-vY` and select it. Use the "Copy" button to create

|

||||

a new Instance template called `ci-default-vX+1` or `ci-cuda-vY+1` with the

|

||||

newly created Disk image.

|

||||

7. Go to the Instance Groups tag and find the applicable group, `ci-default` or

|

||||

`ci-cuda`. Edit the Instance Group in two steps: (a) Set the number of

|

||||

instances to 0 and wait for them all to terminate, (b) Update the Instance

|

||||

template and restore the number of instances to the original value.

|

||||

8. Clean up the previous version by deleting it from Instance Templates and

|

||||

Images.

|

||||

|

||||

|

||||

## Reference

|

||||

|

||||

### Buildkite AWS CloudFormation Setup

|

||||

|

||||

**AWS CloudFormation is currently inactive, although it may be restored in the

|

||||

future**

|

||||

|

||||

AWS CloudFormation can be used to scale machines up and down based on the

|

||||

current CI load. If no machine is currently running it can take up to 60

|

||||

seconds to spin up a new instance, please remain calm during this time.

|

||||

|

||||

#### AMI

|

||||

We use a custom AWS AMI built via https://github.com/solana-labs/elastic-ci-stack-for-aws/tree/solana/cuda.

|

||||

|

||||

Use the following process to update this AMI as dependencies change:

|

||||

```bash

|

||||

$ export AWS_ACCESS_KEY_ID=my_access_key

|

||||

$ export AWS_SECRET_ACCESS_KEY=my_secret_access_key

|

||||

$ git clone https://github.com/solana-labs/elastic-ci-stack-for-aws.git -b solana/cuda

|

||||

$ cd elastic-ci-stack-for-aws/

|

||||

$ make build

|

||||

$ make build-ami

|

||||

```

|

||||

|

||||

Watch for the *"amazon-ebs: AMI:"* log message to extract the name of the new

|

||||

AMI. For example:

|

||||

```

|

||||

amazon-ebs: AMI: ami-07118545e8b4ce6dc

|

||||

```

|

||||

The new AMI should also now be visible in your EC2 Dashboard. Go to the desired

|

||||

AWS CloudFormation stack, update the **ImageId** field to the new AMI id, and

|

||||

*apply* the stack changes.

|

||||

|

||||

|

||||

@@ -1,16 +1,31 @@

|

||||

steps:

|

||||

- command: "ci/coverage.sh"

|

||||

name: "coverage [public]"

|

||||

- command: "ci/docker-run.sh rust ci/test-stable.sh"

|

||||

name: "stable [public]"

|

||||

- command: "ci/docker-run.sh rustlang/rust:nightly ci/test-nightly.sh || true"

|

||||

name: "nightly - FAILURES IGNORED [public]"

|

||||

- command: "ci/docker-run.sh rust ci/test-ignored.sh"

|

||||

name: "ignored [public]"

|

||||

- command: "ci/test-cuda.sh"

|

||||

name: "cuda"

|

||||

timeout_in_minutes: 20

|

||||

- command: "ci/shellcheck.sh"

|

||||

name: "shellcheck [public]"

|

||||

timeout_in_minutes: 20

|

||||

- wait

|

||||

- command: "ci/publish.sh"

|

||||

name: "publish release artifacts"

|

||||

- command: "ci/docker-run.sh rustlang/rust:nightly ci/test-nightly.sh"

|

||||

name: "nightly [public]"

|

||||

timeout_in_minutes: 20

|

||||

- command: "ci/test-stable-perf.sh"

|

||||

name: "stable-perf [public]"

|

||||

timeout_in_minutes: 20

|

||||

retry:

|

||||

automatic:

|

||||

- exit_status: "*"

|

||||

limit: 2

|

||||

agents:

|

||||

- "queue=cuda"

|

||||

- command: "ci/snap.sh [public]"

|

||||

timeout_in_minutes: 20

|

||||

name: "snap [public]"

|

||||

- wait

|

||||

- command: "ci/publish-crate.sh [public]"

|

||||

timeout_in_minutes: 20

|

||||

name: "publish crate"

|

||||

- command: "ci/hoover.sh [public]"

|

||||

timeout_in_minutes: 20

|

||||

name: "clean agent"

|

||||

|

||||

|

||||

@@ -1,21 +0,0 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

ci/docker-run.sh evilmachines/rust-cargo-kcov \

|

||||

bash -exc "\

|

||||

export RUST_BACKTRACE=1; \

|

||||

cargo build --verbose; \

|

||||

cargo kcov --lib --verbose; \

|

||||

"

|

||||

|

||||

echo Coverage report:

|

||||

ls -l target/cov/index.html

|

||||

|

||||

if [[ -z "$CODECOV_TOKEN" ]]; then

|

||||

echo CODECOV_TOKEN undefined

|

||||

else

|

||||

bash <(curl -s https://codecov.io/bash)

|

||||

fi

|

||||

|

||||

exit 0

|

||||

@@ -19,7 +19,12 @@ fi

|

||||

docker pull "$IMAGE"

|

||||

shift

|

||||

|

||||

ARGS=(--workdir /solana --volume "$PWD:/solana" --rm)

|

||||

ARGS=(

|

||||

--workdir /solana

|

||||

--volume "$PWD:/solana"

|

||||

--env "HOME=/solana"

|

||||

--rm

|

||||

)

|

||||

|

||||

ARGS+=(--env "CARGO_HOME=/solana/.cargo")

|

||||

|

||||

@@ -28,14 +33,18 @@ ARGS+=(--env "CARGO_HOME=/solana/.cargo")

|

||||

ARGS+=(--security-opt "seccomp=unconfined")

|

||||

|

||||

# Ensure files are created with the current host uid/gid

|

||||

ARGS+=(--user "$(id -u):$(id -g)")

|

||||

if [[ -z "$SOLANA_DOCKER_RUN_NOSETUID" ]]; then

|

||||

ARGS+=(--user "$(id -u):$(id -g)")

|

||||

fi

|

||||

|

||||

# Environment variables to propagate into the container

|

||||

ARGS+=(

|

||||

--env BUILDKITE_BRANCH

|

||||

--env BUILDKITE_TAG

|

||||

--env CODECOV_TOKEN

|

||||

--env CRATES_IO_TOKEN

|

||||

--env SNAPCRAFT_CREDENTIALS_KEY

|

||||

)

|

||||

|

||||

set -x

|

||||

docker run "${ARGS[@]}" "$IMAGE" "$@"

|

||||

exec docker run "${ARGS[@]}" "$IMAGE" "$@"

|

||||

|

||||

7

ci/docker-snapcraft/Dockerfile

Normal file

7

ci/docker-snapcraft/Dockerfile

Normal file

@@ -0,0 +1,7 @@

|

||||

FROM snapcraft/xenial-amd64

|

||||

|

||||

# Update snapcraft to latest version

|

||||

RUN apt-get update -qq \

|

||||

&& apt-get install -y snapcraft \

|

||||

&& rm -rf /var/lib/apt/lists/* \

|

||||

&& snapcraft --version

|

||||

6

ci/docker-snapcraft/build.sh

Executable file

6

ci/docker-snapcraft/build.sh

Executable file

@@ -0,0 +1,6 @@

|

||||

#!/bin/bash -ex

|

||||

|

||||

cd "$(dirname "$0")"

|

||||

|

||||

docker build -t solanalabs/snapcraft .

|

||||

docker push solanalabs/snapcraft

|

||||

57

ci/hoover.sh

Executable file

57

ci/hoover.sh

Executable file

@@ -0,0 +1,57 @@

|

||||

#!/bin/bash

|

||||

#

|

||||

# Regular maintenance performed on a buildkite agent to control disk usage

|

||||

#

|

||||

|

||||

echo --- Delete all exited containers first

|

||||

(

|

||||

set -x

|

||||

exited=$(docker ps -aq --no-trunc --filter "status=exited")

|

||||

if [[ -n "$exited" ]]; then

|

||||

# shellcheck disable=SC2086 # Don't want to double quote "$exited"

|

||||

docker rm $exited

|

||||

fi

|

||||

)

|

||||

|

||||

echo --- Delete untagged images

|

||||

(

|

||||

set -x

|

||||

untagged=$(docker images | grep '<none>'| awk '{ print $3 }')

|

||||

if [[ -n "$untagged" ]]; then

|

||||

# shellcheck disable=SC2086 # Don't want to double quote "$untagged"

|

||||

docker rmi $untagged

|

||||

fi

|

||||

)

|

||||

|

||||

echo --- Delete all dangling images

|

||||

(

|

||||

set -x

|

||||

dangling=$(docker images --filter 'dangling=true' -q --no-trunc | sort | uniq)

|

||||

if [[ -n "$dangling" ]]; then

|

||||

# shellcheck disable=SC2086 # Don't want to double quote "$dangling"

|

||||

docker rmi $dangling

|

||||

fi

|

||||

)

|

||||

|

||||

echo --- Remove unused docker networks

|

||||

(

|

||||

set -x

|

||||

docker network prune -f

|

||||

)

|

||||

|

||||

echo "--- Delete /tmp files older than 1 day owned by $(whoami)"

|

||||

(

|

||||

set -x

|

||||

find /tmp -maxdepth 1 -user "$(whoami)" -mtime +1 -print0 | xargs -0 rm -rf

|

||||

)

|

||||

|

||||

echo --- System Status

|

||||

(

|

||||

set -x

|

||||

docker images

|

||||

docker ps

|

||||

docker network ls

|

||||

df -h

|

||||

)

|

||||

|

||||

exit 0

|

||||

@@ -16,4 +16,4 @@ if [[ ! -x $BKRUN ]]; then

|

||||

fi

|

||||

|

||||

set -x

|

||||

./ci/node_modules/.bin/bkrun ci/buildkite.yml

|

||||

exec ./ci/node_modules/.bin/bkrun ci/buildkite.yml

|

||||

|

||||

40

ci/snap.sh

Executable file

40

ci/snap.sh

Executable file

@@ -0,0 +1,40 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

DRYRUN=

|

||||

if [[ -z $BUILDKITE_BRANCH || $BUILDKITE_BRANCH =~ pull/* ]]; then

|

||||

DRYRUN="echo"

|

||||

fi

|

||||

|

||||

if [[ -z "$BUILDKITE_TAG" ]]; then

|

||||

SNAP_CHANNEL=edge

|

||||

else

|

||||

SNAP_CHANNEL=beta

|

||||

fi

|

||||

|

||||

if [[ -z $DRYRUN ]]; then

|

||||

[[ -n $SNAPCRAFT_CREDENTIALS_KEY ]] || {

|

||||

echo SNAPCRAFT_CREDENTIALS_KEY not defined

|

||||

exit 1;

|

||||

}

|

||||

(

|

||||

openssl aes-256-cbc -d \

|

||||

-in ci/snapcraft.credentials.enc \

|

||||

-out ci/snapcraft.credentials \

|

||||

-k "$SNAPCRAFT_CREDENTIALS_KEY"

|

||||

|

||||

snapcraft login --with ci/snapcraft.credentials

|

||||

) || {

|

||||

rm -f ci/snapcraft.credentials;

|

||||

exit 1

|

||||

}

|

||||

fi

|

||||

|

||||

set -x

|

||||

|

||||

echo --- build

|

||||

snapcraft

|

||||

|

||||

echo --- publish

|

||||

$DRYRUN snapcraft push solana_*.snap --release $SNAP_CHANNEL

|

||||

BIN

ci/snapcraft.credentials.enc

Normal file

BIN

ci/snapcraft.credentials.enc

Normal file

Binary file not shown.

@@ -1,22 +0,0 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

LIB=libcuda_verify_ed25519.a

|

||||

if [[ ! -r $LIB ]]; then

|

||||

if [[ -z "${libcuda_verify_ed25519_URL:-}" ]]; then

|

||||

echo "$0 skipped. Unable to locate $LIB"

|

||||

exit 0

|

||||

fi

|

||||

|

||||

export LD_LIBRARY_PATH=/usr/local/cuda/lib64

|

||||

export PATH=$PATH:/usr/local/cuda/bin

|

||||

curl -X GET -o $LIB "$libcuda_verify_ed25519_URL"

|

||||

fi

|

||||

|

||||

# shellcheck disable=SC1090 # <-- shellcheck can't follow ~

|

||||

source ~/.cargo/env

|

||||

export RUST_BACKTRACE=1

|

||||

cargo test --features=cuda

|

||||

|

||||

exit 0

|

||||

@@ -1,9 +0,0 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

cargo test -- --ignored

|

||||

@@ -2,13 +2,31 @@

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustup component add rustfmt-preview

|

||||

cargo build --verbose --features unstable

|

||||

cargo test --verbose --features unstable

|

||||

cargo bench --verbose --features unstable

|

||||

_() {

|

||||

echo "--- $*"

|

||||

"$@"

|

||||

}

|

||||

|

||||

_ cargo build --verbose --features unstable

|

||||

_ cargo test --verbose --features unstable

|

||||

_ cargo bench --verbose --features unstable

|

||||

|

||||

|

||||

# Coverage ...

|

||||

_ cargo install --force cargo-cov

|

||||

_ cargo cov test

|

||||

_ cargo cov report

|

||||

|

||||

echo --- Coverage report:

|

||||

ls -l target/cov/report/index.html

|

||||

|

||||

if [[ -z "$CODECOV_TOKEN" ]]; then

|

||||

echo CODECOV_TOKEN undefined

|

||||

else

|

||||

bash <(curl -s https://codecov.io/bash) -x 'llvm-cov gcov'

|

||||

fi

|

||||

|

||||

exit 0

|

||||

|

||||

12

ci/test-stable-perf.sh

Executable file

12

ci/test-stable-perf.sh

Executable file

@@ -0,0 +1,12 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

./fetch-perf-libs.sh

|

||||

|

||||

export LD_LIBRARY_PATH=$PWD:/usr/local/cuda/lib64

|

||||

export PATH=$PATH:/usr/local/cuda/bin

|

||||

export RUST_BACKTRACE=1

|

||||

|

||||

set -x

|

||||

exec cargo test --features=cuda,erasure

|

||||

@@ -2,13 +2,17 @@

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustup component add rustfmt-preview

|

||||

cargo fmt -- --write-mode=diff

|

||||

cargo build --verbose

|

||||

cargo test --verbose

|

||||

_() {

|

||||

echo "--- $*"

|

||||

"$@"

|

||||

}

|

||||

|

||||

exit 0

|

||||

_ rustup component add rustfmt-preview

|

||||

_ cargo fmt -- --write-mode=diff

|

||||

_ cargo build --verbose

|

||||

_ cargo test --verbose

|

||||

_ cargo test -- --ignored

|

||||

|

||||

@@ -1,65 +0,0 @@

|

||||

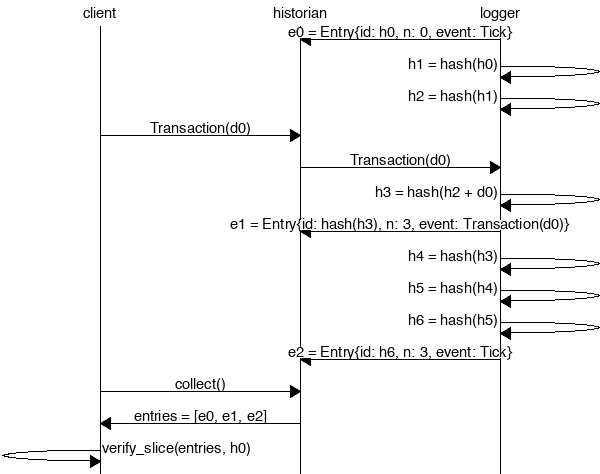

The Historian

|

||||

===

|

||||

|

||||

Create a *Historian* and send it *events* to generate an *event log*, where each *entry*

|

||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||

|

||||

|

||||

|

||||

```rust

|

||||

extern crate solana;

|

||||

|

||||

use solana::historian::Historian;

|

||||

use solana::ledger::{Block, Entry, Hash};

|

||||

use solana::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_ledger(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

sleep(Duration::from_millis(15));

|

||||

let tokens = 42;

|

||||

let keypair = generate_keypair();

|

||||

let event0 = Event::new_claim(get_pubkey(&keypair), tokens, sign_claim_data(&tokens, &keypair));

|

||||

hist.sender.send(event0)?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn main() {

|

||||

let seed = Hash::default();

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_ledger(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry<Hash>> = hist.receiver.iter().collect();

|

||||

for entry in &entries {

|

||||

println!("{:?}", entry);

|

||||

}

|

||||

// Proof-of-History: Verify the historian learned about the events

|

||||

// in the same order they appear in the vector.

|

||||

assert!(entries[..].verify(&seed));

|

||||

}

|

||||

```

|

||||

|

||||

Running the program should produce a ledger similar to:

|

||||

|

||||

```rust

|

||||

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { tokens: 42 } }

|

||||

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

||||

```

|

||||

|

||||

Proof-of-History

|

||||

---

|

||||

|

||||

Take note of the last line:

|

||||

|

||||

```rust

|

||||

assert!(entries[..].verify(&seed));

|

||||

```

|

||||

|

||||

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

||||

historian, we can verify that `id` is the result of applying a sha256 hash to the previous `id`

|

||||

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

||||

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

||||

@@ -1,18 +0,0 @@

|

||||

msc {

|

||||

client,historian,recorder;

|

||||

|

||||

recorder=>historian [ label = "e0 = Entry{id: h0, n: 0, event: Tick}" ] ;

|

||||

recorder=>recorder [ label = "h1 = hash(h0)" ] ;

|

||||

recorder=>recorder [ label = "h2 = hash(h1)" ] ;

|

||||

client=>historian [ label = "Transaction(d0)" ] ;

|

||||

historian=>recorder [ label = "Transaction(d0)" ] ;

|

||||

recorder=>recorder [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

recorder=>historian [ label = "e1 = Entry{id: hash(h3), n: 3, event: Transaction(d0)}" ] ;

|

||||

recorder=>recorder [ label = "h4 = hash(h3)" ] ;

|

||||

recorder=>recorder [ label = "h5 = hash(h4)" ] ;

|

||||

recorder=>recorder [ label = "h6 = hash(h5)" ] ;

|

||||

recorder=>historian [ label = "e2 = Entry{id: h6, n: 3, event: Tick}" ] ;

|

||||

client=>historian [ label = "collect()" ] ;

|

||||

historian=>client [ label = "entries = [e0, e1, e2]" ] ;

|

||||

client=>client [ label = "entries.verify(h0)" ] ;

|

||||

}

|

||||

37

fetch-perf-libs.sh

Executable file

37

fetch-perf-libs.sh

Executable file

@@ -0,0 +1,37 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

if [[ $(uname) != Linux ]]; then

|

||||

echo Performance libraries are only available for Linux

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [[ $(uname -m) != x86_64 ]]; then

|

||||

echo Performance libraries are only available for x86_64 architecture

|

||||

exit 1

|

||||

fi

|

||||

|

||||

(

|

||||

set -x

|

||||

curl -o solana-perf.tgz \

|

||||

https://solana-perf.s3.amazonaws.com/master/x86_64-unknown-linux-gnu/solana-perf.tgz

|

||||

tar zxvf solana-perf.tgz

|

||||

)

|

||||

|

||||

if [[ -r /usr/local/cuda/version.txt && -r cuda-version.txt ]]; then

|

||||

if ! diff /usr/local/cuda/version.txt cuda-version.txt > /dev/null; then

|

||||

echo ==============================================

|

||||

echo Warning: possible CUDA version mismatch

|

||||

echo

|

||||

echo "Expected version: $(cat cuda-version.txt)"

|

||||

echo "Detected version: $(cat /usr/local/cuda/version.txt)"

|

||||

echo ==============================================

|

||||

fi

|

||||

else

|

||||

echo ==============================================

|

||||

echo Warning: unable to validate CUDA version

|

||||

echo ==============================================

|

||||

fi

|

||||

|

||||

echo "Downloaded solana-perf version: $(cat solana-perf-HEAD.txt)"

|

||||

|

||||

exit 0

|

||||

@@ -1,16 +1,17 @@

|

||||

#!/bin/bash -e

|

||||

#!/bin/bash

|

||||

|

||||

if [[ -z "$1" ]]; then

|

||||

echo "usage: $0 [network path to solana repo on leader machine]"

|

||||

exit 1

|

||||

if [[ -z $1 ]]; then

|

||||

echo "usage: $0 [network path to solana repo on leader machine] <number of nodes in the network>"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

LEADER="$1"

|

||||

LEADER=$1

|

||||

COUNT=${2:-1}

|

||||

|

||||

set -x

|

||||

export RUST_LOG=solana=info

|

||||

rsync -v -e ssh "$LEADER/leader.json" .

|

||||

rsync -v -e ssh "$LEADER/mint-demo.json" .

|

||||

rsync -vz "$LEADER"/{leader.json,mint-demo.json} . || exit $?

|

||||

|

||||

# if RUST_LOG is unset, default to info

|

||||

export RUST_LOG=${RUST_LOG:-solana=info}

|

||||

|

||||

cargo run --release --bin solana-client-demo -- \

|

||||

-l leader.json < mint-demo.json 2>&1 | tee client.log

|

||||

-n "$COUNT" -l leader.json -d < mint-demo.json 2>&1 | tee client.log

|

||||

|

||||

@@ -1,4 +1,28 @@

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cargo run --release --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

here=$(dirname "$0")

|

||||

|

||||

# shellcheck source=/dev/null

|

||||

. "${here}"/myip.sh

|

||||

|

||||

myip=$(myip) || exit $?

|

||||

|

||||

[[ -f leader-"${myip}".json ]] || {

|

||||

echo "I can't find a matching leader config file for \"${myip}\"...

|

||||

Please run ${here}/setup.sh first.

|

||||

"

|

||||

exit 1

|

||||

}

|

||||

|

||||

# if RUST_LOG is unset, default to info

|

||||

export RUST_LOG=${RUST_LOG:-solana=info}

|

||||

|

||||

[[ $(uname) = Linux ]] && sudo sysctl -w net.core.rmem_max=26214400 1>/dev/null 2>/dev/null

|

||||

|

||||

# this makes a leader.json file available alongside genesis, etc. for

|

||||

# validators and clients

|

||||

cp leader-"${myip}".json leader.json

|

||||

|

||||

cargo run --release --bin solana-fullnode -- \

|

||||

-l leader-"${myip}".json \

|

||||

< genesis.log tx-*.log \

|

||||

> tx-"$(date -u +%Y%m%d%H%M%S%N)".log

|

||||

|

||||

58

multinode-demo/myip.sh

Executable file

58

multinode-demo/myip.sh

Executable file

@@ -0,0 +1,58 @@

|

||||

#!/bin/bash

|

||||

|

||||

function myip()

|

||||

{

|

||||

declare ipaddrs=( )

|

||||

|

||||

# query interwebs

|

||||

mapfile -t ipaddrs < <(curl -s ifconfig.co)

|

||||

|

||||

# machine's interfaces

|

||||

mapfile -t -O "${#ipaddrs[*]}" ipaddrs < \

|

||||

<(ifconfig | awk '/inet(6)? (addr:)?/ {print $2}')

|

||||

|

||||

ipaddrs=( "${extips[@]}" "${ipaddrs[@]}" )

|

||||

|

||||

if (( ! ${#ipaddrs[*]} ))

|

||||

then

|

||||

echo "

|

||||

myip: error: I'm having trouble determining what our IP address is...

|

||||

Are we connected to a network?

|

||||

|

||||

"

|

||||

return 1

|

||||

fi

|

||||

|

||||

|

||||

declare prompt="

|

||||

Please choose the IP address you want to advertise to the network:

|

||||

|

||||

0) ${ipaddrs[0]} <====== this one was returned by the interwebs...

|

||||

"

|

||||

|

||||

for ((i=1; i < ${#ipaddrs[*]}; i++))

|

||||

do

|

||||

prompt+=" $i) ${ipaddrs[i]}

|

||||

"

|

||||

done

|

||||

|

||||

while read -r -p "${prompt}

|

||||

please enter a number [0 for default]: " which

|

||||

do

|

||||

[[ -z ${which} ]] && break;

|

||||

[[ ${which} =~ [0-9]+ ]] && (( which < ${#ipaddrs[*]} )) && break;

|

||||

echo "Ug. invalid entry \"${which}\"...

|

||||

"

|

||||

sleep 1

|

||||

done

|

||||

|

||||

which=${which:-0}

|

||||

|

||||

echo "${ipaddrs[which]}"

|

||||

|

||||

}

|

||||

|

||||

if [[ ${0} == "${BASH_SOURCE[0]}" ]]

|

||||

then

|

||||

myip "$@"

|

||||

fi

|

||||

15

multinode-demo/setup.sh

Executable file

15

multinode-demo/setup.sh

Executable file

@@ -0,0 +1,15 @@

|

||||

#!/bin/bash

|

||||

here=$(dirname "$0")

|

||||

|

||||

# shellcheck source=/dev/null

|

||||

. "${here}"/myip.sh

|

||||

|

||||

myip=$(myip) || exit $?

|

||||

|

||||

num_tokens=${1:-1000000000}

|

||||

|

||||

cargo run --release --bin solana-mint-demo <<<"${num_tokens}" > mint-demo.json

|

||||

cargo run --release --bin solana-genesis-demo < mint-demo.json > genesis.log

|

||||

|

||||

cargo run --release --bin solana-fullnode-config -- -d > leader-"${myip}".json

|

||||

cargo run --release --bin solana-fullnode-config -- -b 9000 -d > validator-"${myip}".json

|

||||

@@ -1,21 +1,32 @@

|

||||

#!/bin/bash -e

|

||||

#!/bin/bash

|

||||

here=$(dirname "$0")

|

||||

|

||||

if [[ -z "$1" ]]; then

|

||||

# shellcheck source=/dev/null

|

||||

. "${here}"/myip.sh

|

||||

|

||||

leader=$1

|

||||

|

||||

[[ -z ${leader} ]] && {

|

||||

echo "usage: $0 [network path to solana repo on leader machine]"

|

||||

exit 1

|

||||

fi

|

||||

}

|

||||

|

||||

LEADER="$1"

|

||||

myip=$(myip) || exit $?

|

||||

|

||||

set -x

|

||||

[[ -f validator-"$myip".json ]] || {

|

||||

echo "I can't find a matching validator config file for \"${myip}\"...

|

||||

Please run ${here}/setup.sh first.

|

||||

"

|

||||

exit 1

|

||||

}

|

||||

|

||||

rsync -v -e ssh "$LEADER/mint-demo.json" .

|

||||

rsync -v -e ssh "$LEADER/leader.json" .

|

||||

rsync -v -e ssh "$LEADER/genesis.log" .

|

||||

rsync -vz "${leader}"/{mint-demo.json,leader.json,genesis.log,tx-*.log} . || exit $?

|

||||

|

||||

export RUST_LOG=solana=info

|

||||

[[ $(uname) = Linux ]] && sudo sysctl -w net.core.rmem_max=26214400 1>/dev/null 2>/dev/null

|

||||

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

# if RUST_LOG is unset, default to info

|

||||

export RUST_LOG=${RUST_LOG:-solana=info}

|

||||

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- \

|

||||

-l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

cargo run --release --bin solana-fullnode -- \

|

||||

-l validator-"${myip}".json -v leader.json \

|

||||

< genesis.log tx-*.log

|

||||

|

||||

182

rfcs/rfc-001-smart-contracts-engine.md

Normal file

182

rfcs/rfc-001-smart-contracts-engine.md

Normal file

@@ -0,0 +1,182 @@

|

||||

# Smart Contracts Engine

|

||||

|

||||

The goal of this RFC is to define a set of constraints for APIs and runtime such that we can execute our smart contracts safely on massively parallel hardware such as a GPU. Our runtime is built around an OS *syscall* primitive. The difference in blockchain is that now the OS does a cryptographic check of memory region ownership before accessing the memory in the Solana kernel.

|

||||

|

||||

## Toolchain Stack

|

||||

|

||||

+---------------------+ +---------------------+

|

||||

| | | |

|

||||

| +------------+ | | +------------+ |

|

||||

| | | | | | | |

|

||||

| | frontend | | | | verifier | |

|

||||

| | | | | | | |

|

||||

| +-----+------+ | | +-----+------+ |

|

||||

| | | | | |

|

||||

| | | | | |

|

||||

| +-----+------+ | | +-----+------+ |

|

||||

| | | | | | | |

|

||||

| | llvm | | | | loader | |

|

||||

| | | +------>+ | | |

|

||||

| +-----+------+ | | +-----+------+ |

|

||||

| | | | | |

|

||||

| | | | | |

|

||||

| +-----+------+ | | +-----+------+ |

|

||||

| | | | | | | |

|

||||

| | ELF | | | | runtime | |

|

||||

| | | | | | | |

|

||||

| +------------+ | | +------------+ |

|

||||

| | | |

|

||||

| client | | solana |

|

||||

+---------------------+ +---------------------+

|

||||

|

||||

[Figure 1. Smart Contracts Stack]

|

||||

|

||||

In Figure 1 an untrusted client, creates a program in the front-end language of her choice, (like C/C++/Rust/Lua), and compiles it with LLVM to a position independent shared object ELF, targeting BPF bytecode. Solana will safely load and execute the ELF.

|

||||

|

||||

## Bytecode

|

||||

|

||||

Our bytecode is based on Berkley Packet Filter. The requirements for BPF overlap almost exactly with the requirements we have:

|

||||

|

||||

1. Deterministic amount of time to execute the code

|

||||

2. Bytecode that is portable between machine instruction sets

|

||||

3. Verified memory accesses

|

||||

4. Fast to load the object, verify the bytecode and JIT to local machine instruction set

|

||||

|

||||

For 1, that means that loops are unrolled, and for any jumps back we can guard them with a check against the number of instruction that have been executed at this point. If the limit is reached, the program yields its execution. This involves saving the stack and current instruction index.

|

||||

|

||||

For 2, the BPF bytecode already easily maps to x86–64, arm64 and other instruction sets.

|

||||

|

||||

For 3, every load and store that is relative can be checked to be within the expected memory that is passed into the ELF. Dynamic load and stores can do a runtime check against available memory, these will be slow and should be avoided.

|

||||

|

||||

For 4, Fully linked PIC ELF with just a single RX segment. Effectively we are linking a shared object with `-fpic -target bpf` and with a linker script to collect everything into a single RX segment. Writable globals are not supported.

|

||||

|

||||

## Loader

|

||||

The loader is our first smart contract. The job of this contract is to load the actual program with its own instance data. The loader will verify the bytecode and that the object implements the expected entry points.

|

||||

|

||||

Since there is only one RX segment, the context for the contract instance is passed into each entry point as well as the event data for that entry point.

|

||||

|

||||

A client will create a transaction to create a new loader instance:

|

||||

|

||||

`Solana_NewLoader(Loader Instance PubKey, proof of key ownership, space I need for my elf)`

|

||||

|

||||

A client will then do a bunch of transactions to load its elf into the loader instance they created:

|

||||

|

||||

`Loader_UploadElf(Loader Instance PubKey, proof of key ownership, pos start, pos end, data)`

|

||||

|

||||

At this point the client can create a new instance of the module with its own instance address:

|

||||

|

||||

`Loader_NewInstance(Loader Instance PubKey, proof of key ownership, Instance PubKey, proof of key ownership)`

|

||||

|

||||

Once the instance has been created, the client may need to upload more user data to solana to configure this instance:

|

||||

|

||||

`Instance_UploadModuleData(Instance PubKey, proof of key ownership, pos start, pos end, data)`

|

||||

|

||||

Now clients can `start` the instance:

|

||||

|

||||

`Instance_Start(Instance PubKey, proof of key ownership)`

|

||||

|

||||

## Runtime

|

||||

|

||||

Our goal with the runtime is to have a general purpose execution environment that is highly parallelizable and doesn't require dynamic resource management. We want to execute as many contracts as we can in parallel, and have them pass or fail without a destructive state change.

|

||||

|

||||

### State and Entry Point

|

||||

|

||||

State is addressed by an account which is at the moment simply the PubKey. Our goal is to eliminate dynamic memory allocation in the smart contract itself, so the contract is a function that takes a mapping of [(PubKey,State)] and returns [(PubKey, State')]. The output of keys is a subset of the input. Three basic kinds of state exist:

|

||||

|

||||

* Instance State

|

||||

* Participant State

|

||||

* Caller State

|

||||

|

||||

There isn't any difference in how each is implemented, but conceptually Participant State is memory that is allocated for each participant in the contract. Instance State is memory that is allocated for the contract itself, and Caller State is memory that the transactions caller has allocated.

|

||||

|

||||

|

||||

### Call

|

||||

|

||||

```

|

||||

void call(

|

||||

const struct instance_data *data,

|

||||

const uint8_t kind[], //instance|participant|caller|read|write

|

||||

const uint8_t *keys[],

|

||||

uint8_t *data[],

|

||||

int num,

|

||||

uint8_t dirty[], //dirty memory bits

|

||||

uint8_t *userdata, //current transaction data

|

||||

);

|

||||

```

|

||||

|

||||

To call this operation, the transaction that is destined to the contract instance specifies what keyed state it should present to the `call` function. To allocate the state memory or a call context, the client has to first call a function on the contract with the designed address that will own the state.

|

||||

|

||||

At its core, this is a system call that requires cryptographic proof of ownership of memory regions instead of an OS that checks page tables for access rights.

|

||||

|

||||

* `Instance_AllocateContext(Instance PubKey, My PubKey, Proof of key ownership)`

|

||||

|

||||

Any transaction can then call `call` on the contract with a set of keys. It's up to the contract itself to manage ownership:

|

||||

|

||||

* `Instance_Call(Instance PubKey, [Context PubKeys], proofs of ownership, userdata...)`

|

||||

|

||||

Contracts should be able to read any state that is part of solana, but only write to state that the contract allocated.

|

||||

|

||||

#### Caller State

|

||||

|

||||

Caller `state` is memory allocated for the `call` that belongs to the public key that is issuing the `call`. This is the caller's context.

|

||||

|

||||

#### Instance State

|

||||

|

||||

Instance `state` is memory that belongs to this contract instance. We may also need module-wide `state` as well.

|

||||

|

||||

#### Participant State

|

||||

|

||||

Participant `state` is any other memory. In some cases it may make sense to have these allocated as part of the call by the caller.

|

||||

|

||||

### Reduce

|

||||

|

||||

Some operations on the contract will require iteration over all the keys. To make this parallelizable the iteration is broken up into reduce calls which are combined.

|

||||

|

||||

```

|

||||

void reduce_m(

|

||||

const struct instance_data *data,

|

||||

const uint8_t *keys[],

|

||||

const uint8_t *data[],

|

||||

int num,

|

||||

uint8_t *reduce_data,

|

||||

);

|

||||

|

||||

void reduce_r(

|

||||

const struct instance_data *data,

|

||||

const uint8_t *reduce_data[],

|

||||

int num,

|

||||

uint8_t *reduce_data,

|

||||

);

|

||||

```

|

||||

|

||||

### Execution

|

||||

|

||||

Transactions are batched and processed in parallel at each stage.

|

||||

```

|

||||

+-----------+ +--------------+ +-----------+ +---------------+

|

||||

| sigverify |-+->| debit commit |---+->| execution |-+->| memory commit |

|

||||

+-----------+ | +--------------+ | +-----------+ | +---------------+

|

||||

| | |

|

||||

| +---------------+ | | +--------------+

|

||||

|->| memory verify |->+ +->| debit undo |

|

||||

+---------------+ | +--------------+

|

||||

|

|

||||

| +---------------+

|

||||

+->| credit commit |

|

||||

+---------------+

|

||||

|

||||

|

||||

```

|

||||

The `debit verify` stage is very similar to `memory verify`. Proof of key ownership is used to check if the callers key has some state allocated with the contract, then the memory is loaded and executed. After execution stage, the dirty pages are written back by the contract. Because know all the memory accesses during execution, we can batch transactions that do not interfere with each other. We can also apply the `debit undo` and `credit commit` stages of the transaction. `debit undo` is run in case of an exception during contract execution, only transfers may be reversed, fees are commited to solana.

|

||||

|

||||

### GPU execution

|

||||

|

||||

A single contract can read and write to separate key pairs without interference. These separate calls to the same contract can execute on the same GPU thread over different memory using different SIMD lanes.

|

||||

|

||||

## Notes

|

||||

|

||||

1. There is no dynamic memory allocation.

|

||||

2. Persistant Memory is allocated to a Key with ownership

|

||||

3. Contracts can `call` to update key owned state

|

||||

4. Contracts can `reduce` over the memory to aggregate state

|

||||

5. `call` is just a *syscall* that does a cryptographic check of memory owndershp

|

||||

43

scripts/perf-plot.py

Executable file

43

scripts/perf-plot.py

Executable file

@@ -0,0 +1,43 @@

|

||||

#!/usr/bin/env python

|

||||

|

||||

import matplotlib

|

||||

matplotlib.use('Agg')

|

||||

|

||||

import matplotlib.pyplot as plt

|

||||

import json

|

||||

import sys

|

||||

|

||||

stages_to_counters = {}

|

||||

stages_to_time = {}

|

||||

|

||||

if len(sys.argv) != 2:

|

||||

print("USAGE: {} <input file>".format(sys.argv[0]))

|

||||

sys.exit(1)

|

||||

|

||||

with open(sys.argv[1]) as fh:

|

||||

for line in fh.readlines():

|

||||

if "COUNTER" in line:

|

||||

json_part = line[line.find("{"):]

|

||||

x = json.loads(json_part)

|

||||

counter = x['name']

|

||||

if not (counter in stages_to_counters):

|

||||

stages_to_counters[counter] = []

|

||||

stages_to_time[counter] = []

|

||||

stages_to_counters[counter].append(x['counts'])

|

||||

stages_to_time[counter].append(x['now'])

|

||||

|

||||

fig, ax = plt.subplots()

|

||||

|

||||

for stage in stages_to_counters.keys():

|

||||

plt.plot(stages_to_time[stage], stages_to_counters[stage], label=stage)

|

||||

|

||||

plt.xlabel('ms')

|

||||

plt.ylabel('count')

|

||||

|

||||

plt.legend(bbox_to_anchor=(0., 1.02, 1., .102), loc=3,

|

||||

ncol=2, mode="expand", borderaxespad=0.)

|

||||

|

||||

plt.locator_params(axis='x', nbins=10)

|

||||

plt.grid(True)

|

||||

|

||||

plt.savefig("perf.pdf")

|

||||

69

snap/snapcraft.yaml

Normal file

69

snap/snapcraft.yaml

Normal file

@@ -0,0 +1,69 @@

|

||||

name: solana

|

||||

version: git

|

||||

summary: Blockchain, Rebuilt for Scale

|

||||

description: |

|

||||

710,000 tx/s with off-the-shelf hardware and no sharding.

|

||||

Scales with Moore's Law.

|

||||

grade: devel

|

||||

|

||||

# TODO: solana-perf-fullnode does not yet run with 'strict' confinement due to the

|

||||