Compare commits

15 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 66e016c6dc | |||

| 74b12e35bf | |||

| 388d8ccd9f | |||

| 9720da34db | |||

| 090197227f | |||

| 48857ae88e | |||

| ad77f43b9d | |||

| 9d96d9bef5 | |||

| 7101f65a8b | |||

| af3b5e9ce1 | |||

| 7deac5693c | |||

| d5f6ee4620 | |||

| fb73e6cb9b | |||

| a6e64aea67 | |||

| b3f21ddbba |

28

CHANGELOG.md

28

CHANGELOG.md

@ -1,20 +1,36 @@

|

||||

## v0.4.3 (Unreleased)

|

||||

## v0.4.3 (July 25, 2019)

|

||||

|

||||

### Notes

|

||||

|

||||

### Features

|

||||

- **Docker users:** The `$PASSWORD` and `$DATADIR` environment variables are not supported anymore since this release. From now on you should mount the password or data dirctories as a volume. For example:

|

||||

```bash

|

||||

$ docker run -it -v $PWD/hostdata:/data \

|

||||

-v $PWD/password:/password \

|

||||

ethersphere/swarm:0.4.3 \

|

||||

--datadir /data \

|

||||

--password /password

|

||||

```

|

||||

|

||||

### Improvements

|

||||

### Bug fixes and improvements

|

||||

|

||||

### Bug fixes

|

||||

* [#1586](https://github.com/ethersphere/swarm/pull/1586): network: structured output for kademlia table

|

||||

* [#1582](https://github.com/ethersphere/swarm/pull/1582): client: add bzz client, update smoke tests

|

||||

* [#1578](https://github.com/ethersphere/swarm/pull/1578): swarm-smoke: fix check max prox hosts for pull/push sync modes

|

||||

* [#1557](https://github.com/ethersphere/swarm/pull/1557): cmd/swarm: allow using a network interface by name for nat purposes

|

||||

* [#1534](https://github.com/ethersphere/swarm/pull/1534): api, network: count chunk deliveries per peer

|

||||

* [#1537](https://github.com/ethersphere/swarm/pull/1537): swarm: fix bzz_info.port when using dynamic port allocation

|

||||

* [#1531](https://github.com/ethersphere/swarm/pull/1531): cmd/swarm: make bzzaccount flag optional and add bzzkeyhex flag

|

||||

* [#1536](https://github.com/ethersphere/swarm/pull/1536): cmd/swarm: use only one function to parse flags

|

||||

* [#1530](https://github.com/ethersphere/swarm/pull/1530): network/bitvector: Multibit set/unset + string rep

|

||||

* [#1555](https://github.com/ethersphere/swarm/pull/1555): PoC: Network simulation framework

|

||||

|

||||

## v0.4.2 (28 June 2019)

|

||||

## v0.4.2 (June 28, 2019)

|

||||

|

||||

### Notes

|

||||

|

||||

This release is not backward compatible with the previous versions of Swarm due to changes to the wire protocol of the Retrieve Request messages. Please update your nodes.

|

||||

|

||||

### Bug fixes and Improvements

|

||||

### Bug fixes and improvements

|

||||

|

||||

* [#1503](https://github.com/ethersphere/swarm/pull/1503): network/simulation: add ExecAdapter capability to swarm simulations

|

||||

* [#1495](https://github.com/ethersphere/swarm/pull/1495): build: enable ubuntu ppa disco (19.04) builds

|

||||

|

||||

@ -10,5 +10,4 @@ FROM alpine:3.9

|

||||

RUN apk --no-cache add ca-certificates && update-ca-certificates

|

||||

COPY --from=builder /swarm/build/bin/swarm /usr/local/bin/

|

||||

COPY --from=geth /usr/local/bin/geth /usr/local/bin/

|

||||

COPY docker/run.sh /run.sh

|

||||

ENTRYPOINT ["/run.sh"]

|

||||

ENTRYPOINT ["/usr/local/bin/swarm"]

|

||||

|

||||

@ -10,6 +10,5 @@ FROM alpine:3.9

|

||||

RUN apk --no-cache add ca-certificates

|

||||

COPY --from=builder /swarm/build/bin/* /usr/local/bin/

|

||||

COPY --from=geth /usr/local/bin/geth /usr/local/bin/

|

||||

COPY docker/run.sh /run.sh

|

||||

COPY docker/run-smoke.sh /run-smoke.sh

|

||||

ENTRYPOINT ["/run.sh"]

|

||||

ENTRYPOINT ["/usr/local/bin/swarm"]

|

||||

|

||||

79

README.md

79

README.md

@ -9,26 +9,25 @@ Swarm is a distributed storage platform and content distribution service, a nati

|

||||

|

||||

## Table of Contents <!-- omit in toc -->

|

||||

|

||||

- [Building the source](#building-the-source)

|

||||

- [Running Swarm](#running-swarm)

|

||||

- [Verifying that your local Swarm node is running](#verifying-that-your-local-swarm-node-is-running)

|

||||

- [Ethereum Name Service resolution](#ethereum-name-service-resolution)

|

||||

- [Documentation](#documentation)

|

||||

- [Docker](#docker)

|

||||

- [Docker tags](#docker-tags)

|

||||

- [Environment variables](#environment-variables)

|

||||

- [Swarm command line arguments](#swarm-command-line-arguments)

|

||||

- [Developers Guide](#developers-guide)

|

||||

- [Go Environment](#go-environment)

|

||||

- [Vendored Dependencies](#vendored-dependencies)

|

||||

- [Testing](#testing)

|

||||

- [Profiling Swarm](#profiling-swarm)

|

||||

- [Metrics and Instrumentation in Swarm](#metrics-and-instrumentation-in-swarm)

|

||||

- [Visualizing metrics](#visualizing-metrics)

|

||||

- [Public Gateways](#public-gateways)

|

||||

- [Swarm Dapps](#swarm-dapps)

|

||||

- [Contributing](#contributing)

|

||||

- [License](#license)

|

||||

- [Building the source](#Building-the-source)

|

||||

- [Running Swarm](#Running-Swarm)

|

||||

- [Verifying that your local Swarm node is running](#Verifying-that-your-local-Swarm-node-is-running)

|

||||

- [Ethereum Name Service resolution](#Ethereum-Name-Service-resolution)

|

||||

- [Documentation](#Documentation)

|

||||

- [Docker](#Docker)

|

||||

- [Docker tags](#Docker-tags)

|

||||

- [Swarm command line arguments](#Swarm-command-line-arguments)

|

||||

- [Developers Guide](#Developers-Guide)

|

||||

- [Go Environment](#Go-Environment)

|

||||

- [Vendored Dependencies](#Vendored-Dependencies)

|

||||

- [Testing](#Testing)

|

||||

- [Profiling Swarm](#Profiling-Swarm)

|

||||

- [Metrics and Instrumentation in Swarm](#Metrics-and-Instrumentation-in-Swarm)

|

||||

- [Visualizing metrics](#Visualizing-metrics)

|

||||

- [Public Gateways](#Public-Gateways)

|

||||

- [Swarm Dapps](#Swarm-Dapps)

|

||||

- [Contributing](#Contributing)

|

||||

- [License](#License)

|

||||

|

||||

## Building the source

|

||||

|

||||

@ -53,15 +52,11 @@ $ go install github.com/ethersphere/swarm/cmd/swarm

|

||||

|

||||

## Running Swarm

|

||||

|

||||

Going through all the possible command line flags is out of scope here, but we've enumerated a few common parameter combos to get you up to speed quickly on how you can run your own Swarm node.

|

||||

|

||||

To run Swarm you need an Ethereum account. Download and install [Geth](https://geth.ethereum.org) if you don't have it on your system. You can create a new Ethereum account by running the following command:

|

||||

|

||||

```bash

|

||||

$ geth account new

|

||||

$ swarm

|

||||

```

|

||||

|

||||

You will be prompted for a password:

|

||||

If you don't have an account yet, then you will be prompted to create one and secure it with a password:

|

||||

|

||||

```

|

||||

Your new account is locked with a password. Please give a password. Do not forget this password.

|

||||

@ -69,18 +64,12 @@ Passphrase:

|

||||

Repeat passphrase:

|

||||

```

|

||||

|

||||

Once you have specified the password, the output will be the Ethereum address representing that account. For example:

|

||||

|

||||

```

|

||||

Address: {2f1cd699b0bf461dcfbf0098ad8f5587b038f0f1}

|

||||

```

|

||||

|

||||

Using this account, connect to Swarm with

|

||||

If you have multiple accounts created, then you'll have to choose one of the accounts by using the `--bzzaccount` flag.

|

||||

|

||||

```bash

|

||||

$ swarm --bzzaccount <your-account-here>

|

||||

|

||||

# in our example

|

||||

# example

|

||||

$ swarm --bzzaccount 2f1cd699b0bf461dcfbf0098ad8f5587b038f0f1

|

||||

```

|

||||

|

||||

@ -119,11 +108,6 @@ Swarm container images are available at Docker Hub: [ethersphere/swarm](https://

|

||||

* `edge` - latest build from `master`

|

||||

* `v0.x.y` - specific stable release

|

||||

|

||||

### Environment variables

|

||||

|

||||

* `PASSWORD` - *required* - Used to setup a sample Ethereum account in the data directory. If a data directory is mounted with a volume, the first Ethereum account from it is loaded, and Swarm will try to decrypt it non-interactively with `PASSWORD`

|

||||

* `DATADIR` - *optional* - Defaults to `/root/.ethereum`

|

||||

|

||||

### Swarm command line arguments

|

||||

|

||||

All Swarm command line arguments are supported and can be sent as part of the CMD field to the Docker container.

|

||||

@ -133,15 +117,16 @@ All Swarm command line arguments are supported and can be sent as part of the CM

|

||||

Running a Swarm container from the command line

|

||||

|

||||

```bash

|

||||

$ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

$ docker run -it ethersphere/swarm \

|

||||

--debug \

|

||||

--verbosity 4

|

||||

```

|

||||

|

||||

|

||||

Running a Swarm container with custom ENS endpoint

|

||||

|

||||

```bash

|

||||

$ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

$ docker run -it ethersphere/swarm \

|

||||

--ens-api http://1.2.3.4:8545 \

|

||||

--debug \

|

||||

--verbosity 4

|

||||

@ -150,7 +135,7 @@ $ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

Running a Swarm container with metrics enabled

|

||||

|

||||

```bash

|

||||

$ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

$ docker run -it ethersphere/swarm \

|

||||

--debug \

|

||||

--metrics \

|

||||

--metrics.influxdb.export \

|

||||

@ -165,7 +150,7 @@ $ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

Running a Swarm container with tracing and pprof server enabled

|

||||

|

||||

```bash

|

||||

$ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

$ docker run -it ethersphere/swarm \

|

||||

--debug \

|

||||

--tracing \

|

||||

--tracing.endpoint 127.0.0.1:6831 \

|

||||

@ -175,10 +160,14 @@ $ docker run -e PASSWORD=password123 -t ethersphere/swarm \

|

||||

--pprofport 6060

|

||||

```

|

||||

|

||||

Running a Swarm container with custom data directory mounted from a volume

|

||||

Running a Swarm container with a custom data directory mounted from a volume and a password file to unlock the swarm account

|

||||

|

||||

```bash

|

||||

$ docker run -e DATADIR=/data -e PASSWORD=password123 -v /tmp/hostdata:/data -t ethersphere/swarm \

|

||||

$ docker run -it -v $PWD/hostdata:/data \

|

||||

-v $PWD/password:/password \

|

||||

ethersphere/swarm \

|

||||

--datadir /data \

|

||||

--password /password \

|

||||

--debug \

|

||||

--verbosity 4

|

||||

```

|

||||

|

||||

@ -39,19 +39,16 @@ func NewInspector(api *API, hive *network.Hive, netStore *storage.NetStore) *Ins

|

||||

}

|

||||

|

||||

// Hive prints the kademlia table

|

||||

func (inspector *Inspector) Hive() string {

|

||||

return inspector.hive.String()

|

||||

func (i *Inspector) Hive() string {

|

||||

return i.hive.String()

|

||||

}

|

||||

|

||||

func (inspector *Inspector) ListKnown() []string {

|

||||

res := []string{}

|

||||

for _, v := range inspector.hive.Kademlia.ListKnown() {

|

||||

res = append(res, fmt.Sprintf("%v", v))

|

||||

}

|

||||

return res

|

||||

// KademliaInfo returns structured output of the Kademlia state that we can check for equality

|

||||

func (i *Inspector) KademliaInfo() network.KademliaInfo {

|

||||

return i.hive.KademliaInfo()

|

||||

}

|

||||

|

||||

func (inspector *Inspector) IsSyncing() bool {

|

||||

func (i *Inspector) IsPullSyncing() bool {

|

||||

lastReceivedChunksMsg := metrics.GetOrRegisterGauge("network.stream.received_chunks", nil)

|

||||

|

||||

// last received chunks msg time

|

||||

@ -63,13 +60,30 @@ func (inspector *Inspector) IsSyncing() bool {

|

||||

return lrct.After(time.Now().Add(-15 * time.Second))

|

||||

}

|

||||

|

||||

// DeliveriesPerPeer returns the sum of chunks we received from a given peer

|

||||

func (i *Inspector) DeliveriesPerPeer() map[string]int64 {

|

||||

res := map[string]int64{}

|

||||

|

||||

// iterate connection in kademlia

|

||||

i.hive.Kademlia.EachConn(nil, 255, func(p *network.Peer, po int) bool {

|

||||

// get how many chunks we receive for retrieve requests per peer

|

||||

peermetric := fmt.Sprintf("chunk.delivery.%x", p.Over()[:16])

|

||||

|

||||

res[fmt.Sprintf("%x", p.Over()[:16])] = metrics.GetOrRegisterCounter(peermetric, nil).Count()

|

||||

|

||||

return true

|

||||

})

|

||||

|

||||

return res

|

||||

}

|

||||

|

||||

// Has checks whether each chunk address is present in the underlying datastore,

|

||||

// the bool in the returned structs indicates if the underlying datastore has

|

||||

// the chunk stored with the given address (true), or not (false)

|

||||

func (inspector *Inspector) Has(chunkAddresses []storage.Address) string {

|

||||

func (i *Inspector) Has(chunkAddresses []storage.Address) string {

|

||||

hostChunks := []string{}

|

||||

for _, addr := range chunkAddresses {

|

||||

has, err := inspector.netStore.Has(context.Background(), addr)

|

||||

has, err := i.netStore.Has(context.Background(), addr)

|

||||

if err != nil {

|

||||

log.Error(err.Error())

|

||||

}

|

||||

|

||||

81

client/bzz.go

Normal file

81

client/bzz.go

Normal file

@ -0,0 +1,81 @@

|

||||

package client

|

||||

|

||||

import (

|

||||

"github.com/ethereum/go-ethereum/rpc"

|

||||

"github.com/ethersphere/swarm"

|

||||

"github.com/ethersphere/swarm/log"

|

||||

"github.com/ethersphere/swarm/storage"

|

||||

)

|

||||

|

||||

type Bzz struct {

|

||||

client *rpc.Client

|

||||

}

|

||||

|

||||

// NewBzz is a constructor for a Bzz API

|

||||

func NewBzz(client *rpc.Client) *Bzz {

|

||||

return &Bzz{

|

||||

client: client,

|

||||

}

|

||||

}

|

||||

|

||||

// GetChunksBitVector returns a bit vector of presence for a given slice of chunks

|

||||

func (b *Bzz) GetChunksBitVector(addrs []storage.Address) (string, error) {

|

||||

var hostChunks string

|

||||

const trackChunksPageSize = 7500

|

||||

|

||||

for len(addrs) > 0 {

|

||||

var pageChunks string

|

||||

// get current page size, so that we avoid a slice out of bounds on the last page

|

||||

pagesize := trackChunksPageSize

|

||||

if len(addrs) < trackChunksPageSize {

|

||||

pagesize = len(addrs)

|

||||

}

|

||||

|

||||

err := b.client.Call(&pageChunks, "bzz_has", addrs[:pagesize])

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

hostChunks += pageChunks

|

||||

addrs = addrs[pagesize:]

|

||||

}

|

||||

|

||||

return hostChunks, nil

|

||||

}

|

||||

|

||||

// GetBzzAddr returns the bzzAddr of the node

|

||||

func (b *Bzz) GetBzzAddr() (string, error) {

|

||||

var info swarm.Info

|

||||

|

||||

err := b.client.Call(&info, "bzz_info")

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

|

||||

return info.BzzKey[2:], nil

|

||||

}

|

||||

|

||||

// IsPullSyncing is checking if the node is still receiving chunk deliveries due to pull syncing

|

||||

func (b *Bzz) IsPullSyncing() (bool, error) {

|

||||

var isSyncing bool

|

||||

|

||||

err := b.client.Call(&isSyncing, "bzz_isPullSyncing")

|

||||

if err != nil {

|

||||

log.Error("error calling host for isPullSyncing", "err", err)

|

||||

return false, err

|

||||

}

|

||||

|

||||

return isSyncing, nil

|

||||

}

|

||||

|

||||

// IsPushSynced checks if the given `tag` is done syncing, i.e. we've received receipts for all chunks

|

||||

func (b *Bzz) IsPushSynced(tagname string) (bool, error) {

|

||||

var isSynced bool

|

||||

|

||||

err := b.client.Call(&isSynced, "bzz_isPushSynced", tagname)

|

||||

if err != nil {

|

||||

log.Error("error calling host for isPushSynced", "err", err)

|

||||

return false, err

|

||||

}

|

||||

|

||||

return isSynced, nil

|

||||

}

|

||||

@ -37,22 +37,23 @@ var (

|

||||

)

|

||||

|

||||

var (

|

||||

allhosts string

|

||||

hosts []string

|

||||

filesize int

|

||||

syncDelay bool

|

||||

inputSeed int

|

||||

httpPort int

|

||||

wsPort int

|

||||

verbosity int

|

||||

timeout int

|

||||

single bool

|

||||

onlyUpload bool

|

||||

debug bool

|

||||

allhosts string

|

||||

hosts []string

|

||||

filesize int

|

||||

syncDelay bool

|

||||

pushsyncDelay bool

|

||||

syncMode string

|

||||

inputSeed int

|

||||

httpPort int

|

||||

wsPort int

|

||||

verbosity int

|

||||

timeout int

|

||||

single bool

|

||||

onlyUpload bool

|

||||

debug bool

|

||||

)

|

||||

|

||||

func main() {

|

||||

|

||||

app := cli.NewApp()

|

||||

app.Name = "smoke-test"

|

||||

app.Usage = ""

|

||||

@ -88,6 +89,17 @@ func main() {

|

||||

Usage: "file size for generated random file in KB",

|

||||

Destination: &filesize,

|

||||

},

|

||||

cli.StringFlag{

|

||||

Name: "sync-mode",

|

||||

Value: "pullsync",

|

||||

Usage: "sync mode - pushsync or pullsync or both",

|

||||

Destination: &syncMode,

|

||||

},

|

||||

cli.BoolFlag{

|

||||

Name: "pushsync-delay",

|

||||

Usage: "wait for content to be push synced",

|

||||

Destination: &pushsyncDelay,

|

||||

},

|

||||

cli.BoolFlag{

|

||||

Name: "sync-delay",

|

||||

Usage: "wait for content to be synced",

|

||||

|

||||

@ -24,7 +24,6 @@ import (

|

||||

"io/ioutil"

|

||||

"math/rand"

|

||||

"os"

|

||||

"strings"

|

||||

"sync"

|

||||

"sync/atomic"

|

||||

"time"

|

||||

@ -33,8 +32,11 @@ import (

|

||||

"github.com/ethereum/go-ethereum/metrics"

|

||||

"github.com/ethereum/go-ethereum/rpc"

|

||||

"github.com/ethersphere/swarm/chunk"

|

||||

"github.com/ethersphere/swarm/client"

|

||||

"github.com/ethersphere/swarm/storage"

|

||||

"github.com/ethersphere/swarm/testutil"

|

||||

"github.com/pborman/uuid"

|

||||

"golang.org/x/sync/errgroup"

|

||||

|

||||

cli "gopkg.in/urfave/cli.v1"

|

||||

)

|

||||

@ -116,14 +118,16 @@ func trackChunks(testData []byte, submitMetrics bool) error {

|

||||

return

|

||||

}

|

||||

|

||||

hostChunks, err := getChunksBitVectorFromHost(rpcClient, addrs)

|

||||

bzzClient := client.NewBzz(rpcClient)

|

||||

|

||||

hostChunks, err := bzzClient.GetChunksBitVector(addrs)

|

||||

if err != nil {

|

||||

log.Error("error getting chunks bit vector from host", "err", err, "host", httpHost)

|

||||

hasErr = true

|

||||

return

|

||||

}

|

||||

|

||||

bzzAddr, err := getBzzAddrFromHost(rpcClient)

|

||||

bzzAddr, err := bzzClient.GetBzzAddr()

|

||||

if err != nil {

|

||||

log.Error("error getting bzz addrs from host", "err", err, "host", httpHost)

|

||||

hasErr = true

|

||||

@ -175,46 +179,6 @@ func trackChunks(testData []byte, submitMetrics bool) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

// getChunksBitVectorFromHost returns a bit vector of presence for a given slice of chunks from a given host

|

||||

func getChunksBitVectorFromHost(client *rpc.Client, addrs []storage.Address) (string, error) {

|

||||

var hostChunks string

|

||||

const trackChunksPageSize = 7500

|

||||

|

||||

for len(addrs) > 0 {

|

||||

var pageChunks string

|

||||

// get current page size, so that we avoid a slice out of bounds on the last page

|

||||

pagesize := trackChunksPageSize

|

||||

if len(addrs) < trackChunksPageSize {

|

||||

pagesize = len(addrs)

|

||||

}

|

||||

|

||||

err := client.Call(&pageChunks, "bzz_has", addrs[:pagesize])

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

hostChunks += pageChunks

|

||||

addrs = addrs[pagesize:]

|

||||

}

|

||||

|

||||

return hostChunks, nil

|

||||

}

|

||||

|

||||

// getBzzAddrFromHost returns the bzzAddr for a given host

|

||||

func getBzzAddrFromHost(client *rpc.Client) (string, error) {

|

||||

var hive string

|

||||

|

||||

err := client.Call(&hive, "bzz_hive")

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

|

||||

// we make an ugly assumption about the output format of the hive.String() method

|

||||

// ideally we should replace this with an API call that returns the bzz addr for a given host,

|

||||

// but this also works for now (provided we don't change the hive.String() method, which we haven't in some time

|

||||

ss := strings.Split(strings.Split(hive, "\n")[3], " ")

|

||||

return ss[len(ss)-1], nil

|

||||

}

|

||||

|

||||

// checkChunksVsMostProxHosts is checking:

|

||||

// 1. whether a chunk has been found at less than 2 hosts. Considering our NN size, this should not happen.

|

||||

// 2. if a chunk is not found at its closest node. This should also not happen.

|

||||

@ -232,7 +196,7 @@ func checkChunksVsMostProxHosts(addrs []storage.Address, allHostChunks map[strin

|

||||

for i := range addrs {

|

||||

var foundAt int

|

||||

maxProx := -1

|

||||

var maxProxHost string

|

||||

var maxProxHosts []string

|

||||

for host := range allHostChunks {

|

||||

if allHostChunks[host][i] == '1' {

|

||||

foundAt++

|

||||

@ -247,19 +211,43 @@ func checkChunksVsMostProxHosts(addrs []storage.Address, allHostChunks map[strin

|

||||

prox := chunk.Proximity(addrs[i], ba)

|

||||

if prox > maxProx {

|

||||

maxProx = prox

|

||||

maxProxHost = host

|

||||

maxProxHosts = []string{host}

|

||||

} else if prox == maxProx {

|

||||

maxProxHosts = append(maxProxHosts, host)

|

||||

}

|

||||

}

|

||||

|

||||

if allHostChunks[maxProxHost][i] == '0' {

|

||||

log.Error("chunk not found at max prox host", "ref", addrs[i], "host", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

} else {

|

||||

log.Trace("chunk present at max prox host", "ref", addrs[i], "host", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

log.Debug("sync mode", "sync mode", syncMode)

|

||||

|

||||

if syncMode == "pullsync" || syncMode == "both" {

|

||||

for _, maxProxHost := range maxProxHosts {

|

||||

if allHostChunks[maxProxHost][i] == '0' {

|

||||

log.Error("chunk not found at max prox host", "ref", addrs[i], "host", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

} else {

|

||||

log.Trace("chunk present at max prox host", "ref", addrs[i], "host", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

}

|

||||

}

|

||||

|

||||

// if chunk found at less than 2 hosts, which is actually less that the min size of a NN

|

||||

if foundAt < 2 {

|

||||

log.Error("chunk found at less than two hosts", "foundAt", foundAt, "ref", addrs[i])

|

||||

}

|

||||

}

|

||||

|

||||

// if chunk found at less than 2 hosts

|

||||

if foundAt < 2 {

|

||||

log.Error("chunk found at less than two hosts", "foundAt", foundAt, "ref", addrs[i])

|

||||

if syncMode == "pushsync" {

|

||||

var found bool

|

||||

for _, maxProxHost := range maxProxHosts {

|

||||

if allHostChunks[maxProxHost][i] == '1' {

|

||||

found = true

|

||||

log.Trace("chunk present at max prox host", "ref", addrs[i], "host", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

}

|

||||

}

|

||||

|

||||

if !found {

|

||||

for _, maxProxHost := range maxProxHosts {

|

||||

log.Error("chunk not found at any max prox host", "ref", addrs[i], "hosts", maxProxHost, "bzzAddr", bzzAddrs[maxProxHost])

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -284,7 +272,8 @@ func uploadAndSync(c *cli.Context, randomBytes []byte) error {

|

||||

log.Info("uploading to "+httpEndpoint(hosts[0])+" and syncing", "seed", seed)

|

||||

|

||||

t1 := time.Now()

|

||||

hash, err := upload(randomBytes, httpEndpoint(hosts[0]))

|

||||

tag := uuid.New()[:8]

|

||||

hash, err := uploadWithTag(randomBytes, httpEndpoint(hosts[0]), tag)

|

||||

if err != nil {

|

||||

log.Error(err.Error())

|

||||

return err

|

||||

@ -300,6 +289,11 @@ func uploadAndSync(c *cli.Context, randomBytes []byte) error {

|

||||

|

||||

log.Info("uploaded successfully", "hash", hash, "took", t2, "digest", fmt.Sprintf("%x", fhash))

|

||||

|

||||

// wait to push sync sync

|

||||

if pushsyncDelay {

|

||||

waitToPushSynced(tag)

|

||||

}

|

||||

|

||||

// wait to sync and log chunks before fetch attempt, only if syncDelay is set to true

|

||||

if syncDelay {

|

||||

waitToSync()

|

||||

@ -338,27 +332,31 @@ func uploadAndSync(c *cli.Context, randomBytes []byte) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

func isSyncing(wsHost string) (bool, error) {

|

||||

rpcClient, err := rpc.Dial(wsHost)

|

||||

if rpcClient != nil {

|

||||

defer rpcClient.Close()

|

||||

func waitToPushSynced(tagname string) {

|

||||

for {

|

||||

time.Sleep(200 * time.Millisecond)

|

||||

|

||||

rpcClient, err := rpc.Dial(wsEndpoint(hosts[0]))

|

||||

if rpcClient != nil {

|

||||

defer rpcClient.Close()

|

||||

}

|

||||

if err != nil {

|

||||

log.Error("error dialing host", "err", err)

|

||||

continue

|

||||

}

|

||||

|

||||

bzzClient := client.NewBzz(rpcClient)

|

||||

|

||||

synced, err := bzzClient.IsPushSynced(tagname)

|

||||

if err != nil {

|

||||

log.Error(err.Error())

|

||||

continue

|

||||

}

|

||||

|

||||

if synced {

|

||||

return

|

||||

}

|

||||

}

|

||||

|

||||

if err != nil {

|

||||

log.Error("error dialing host", "err", err)

|

||||

return false, err

|

||||

}

|

||||

|

||||

var isSyncing bool

|

||||

err = rpcClient.Call(&isSyncing, "bzz_isSyncing")

|

||||

if err != nil {

|

||||

log.Error("error calling host for isSyncing", "err", err)

|

||||

return false, err

|

||||

}

|

||||

|

||||

log.Debug("isSyncing result", "host", wsHost, "isSyncing", isSyncing)

|

||||

|

||||

return isSyncing, nil

|

||||

}

|

||||

|

||||

func waitToSync() {

|

||||

@ -370,22 +368,39 @@ func waitToSync() {

|

||||

time.Sleep(3 * time.Second)

|

||||

|

||||

notSynced := uint64(0)

|

||||

var wg sync.WaitGroup

|

||||

wg.Add(len(hosts))

|

||||

|

||||

var g errgroup.Group

|

||||

for i := 0; i < len(hosts); i++ {

|

||||

i := i

|

||||

go func(idx int) {

|

||||

stillSyncing, err := isSyncing(wsEndpoint(hosts[idx]))

|

||||

g.Go(func() error {

|

||||

rpcClient, err := rpc.Dial(wsEndpoint(hosts[i]))

|

||||

if rpcClient != nil {

|

||||

defer rpcClient.Close()

|

||||

}

|

||||

if err != nil {

|

||||

log.Error("error dialing host", "err", err)

|

||||

return err

|

||||

}

|

||||

|

||||

if stillSyncing || err != nil {

|

||||

bzzClient := client.NewBzz(rpcClient)

|

||||

|

||||

stillSyncing, err := bzzClient.IsPullSyncing()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

if stillSyncing {

|

||||

atomic.AddUint64(¬Synced, 1)

|

||||

}

|

||||

wg.Done()

|

||||

}(i)

|

||||

}

|

||||

wg.Wait()

|

||||

|

||||

ns = atomic.LoadUint64(¬Synced)

|

||||

return nil

|

||||

})

|

||||

}

|

||||

|

||||

// Wait for all RPC calls to complete.

|

||||

if err := g.Wait(); err == nil {

|

||||

ns = atomic.LoadUint64(¬Synced)

|

||||

}

|

||||

}

|

||||

|

||||

t2 := time.Since(t1)

|

||||

|

||||

@ -38,6 +38,7 @@ import (

|

||||

"github.com/ethersphere/swarm/api/client"

|

||||

"github.com/ethersphere/swarm/spancontext"

|

||||

opentracing "github.com/opentracing/opentracing-go"

|

||||

"github.com/pborman/uuid"

|

||||

cli "gopkg.in/urfave/cli.v1"

|

||||

)

|

||||

|

||||

@ -193,8 +194,13 @@ func fetch(hash string, endpoint string, original []byte, ruid string) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

// upload an arbitrary byte as a plaintext file to `endpoint` using the api client

|

||||

// upload an arbitrary byte as a plaintext file to `endpoint` using the api client

|

||||

func upload(data []byte, endpoint string) (string, error) {

|

||||

return uploadWithTag(data, endpoint, uuid.New()[:8])

|

||||

}

|

||||

|

||||

// uploadWithTag an arbitrary byte as a plaintext file to `endpoint` using the api client with a given tag

|

||||

func uploadWithTag(data []byte, endpoint string, tag string) (string, error) {

|

||||

swarm := client.NewClient(endpoint)

|

||||

f := &client.File{

|

||||

ReadCloser: ioutil.NopCloser(bytes.NewReader(data)),

|

||||

@ -203,10 +209,10 @@ func upload(data []byte, endpoint string) (string, error) {

|

||||

Mode: 0660,

|

||||

Size: int64(len(data)),

|

||||

},

|

||||

Tag: tag,

|

||||

}

|

||||

|

||||

// upload data to bzz:// and retrieve the content-addressed manifest hash, hex-encoded.

|

||||

return swarm.Upload(f, "", false)

|

||||

return swarm.TarUpload("", &client.FileUploader{f}, "", false)

|

||||

}

|

||||

|

||||

func digest(r io.Reader) ([]byte, error) {

|

||||

|

||||

@ -106,7 +106,7 @@ func accessNewPass(ctx *cli.Context) {

|

||||

accessKey []byte

|

||||

err error

|

||||

ref = args[0]

|

||||

password = getPassPhrase("", 0, makePasswordList(ctx))

|

||||

password = getPassPhrase("", false, 0, makePasswordList(ctx))

|

||||

dryRun = ctx.Bool(SwarmDryRunFlag.Name)

|

||||

)

|

||||

accessKey, ae, err = api.DoPassword(ctx, password, salt)

|

||||

|

||||

@ -17,6 +17,7 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"crypto/ecdsa"

|

||||

"errors"

|

||||

"fmt"

|

||||

"io"

|

||||

@ -24,7 +25,6 @@ import (

|

||||

"reflect"

|

||||

"strconv"

|

||||

"strings"

|

||||

"time"

|

||||

"unicode"

|

||||

|

||||

cli "gopkg.in/urfave/cli.v1"

|

||||

@ -61,6 +61,7 @@ var (

|

||||

const (

|

||||

SwarmEnvChequebookAddr = "SWARM_CHEQUEBOOK_ADDR"

|

||||

SwarmEnvAccount = "SWARM_ACCOUNT"

|

||||

SwarmEnvBzzKeyHex = "SWARM_BZZ_KEY_HEX"

|

||||

SwarmEnvListenAddr = "SWARM_LISTEN_ADDR"

|

||||

SwarmEnvPort = "SWARM_PORT"

|

||||

SwarmEnvNetworkID = "SWARM_NETWORK_ID"

|

||||

@ -80,6 +81,7 @@ const (

|

||||

SwarmEnvStoreCapacity = "SWARM_STORE_CAPACITY"

|

||||

SwarmEnvStoreCacheCapacity = "SWARM_STORE_CACHE_CAPACITY"

|

||||

SwarmEnvBootnodeMode = "SWARM_BOOTNODE_MODE"

|

||||

SwarmEnvNATInterface = "SWARM_NAT_INTERFACE"

|

||||

SwarmAccessPassword = "SWARM_ACCESS_PASSWORD"

|

||||

SwarmAutoDefaultPath = "SWARM_AUTO_DEFAULTPATH"

|

||||

SwarmGlobalstoreAPI = "SWARM_GLOBALSTORE_API"

|

||||

@ -112,10 +114,8 @@ func buildConfig(ctx *cli.Context) (config *bzzapi.Config, err error) {

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

//override settings provided by environment variables

|

||||

config = envVarsOverride(config)

|

||||

//override settings provided by command line

|

||||

config = cmdLineOverride(config, ctx)

|

||||

//override settings provided by flags

|

||||

config = flagsOverride(config, ctx)

|

||||

//validate configuration parameters

|

||||

err = validateConfig(config)

|

||||

|

||||

@ -124,9 +124,9 @@ func buildConfig(ctx *cli.Context) (config *bzzapi.Config, err error) {

|

||||

|

||||

//finally, after the configuration build phase is finished, initialize

|

||||

func initSwarmNode(config *bzzapi.Config, stack *node.Node, ctx *cli.Context, nodeconfig *node.Config) error {

|

||||

//at this point, all vars should be set in the Config

|

||||

//get the account for the provided swarm account

|

||||

prvkey := getAccount(config.BzzAccount, ctx, stack)

|

||||

var prvkey *ecdsa.PrivateKey

|

||||

config.BzzAccount, prvkey = getOrCreateAccount(ctx, stack)

|

||||

//set the resolved config path (geth --datadir)

|

||||

config.Path = expandPath(stack.InstanceDir())

|

||||

//finally, initialize the configuration

|

||||

@ -170,9 +170,9 @@ func configFileOverride(config *bzzapi.Config, ctx *cli.Context) (*bzzapi.Config

|

||||

return config, err

|

||||

}

|

||||

|

||||

// cmdLineOverride overrides the current config with whatever is provided through the command line

|

||||

// flagsOverride overrides the current config with whatever is provided through flags (cli or env vars)

|

||||

// most values are not allowed a zero value (empty string), if not otherwise noted

|

||||

func cmdLineOverride(currentConfig *bzzapi.Config, ctx *cli.Context) *bzzapi.Config {

|

||||

func flagsOverride(currentConfig *bzzapi.Config, ctx *cli.Context) *bzzapi.Config {

|

||||

if keyid := ctx.GlobalString(SwarmAccountFlag.Name); keyid != "" {

|

||||

currentConfig.BzzAccount = keyid

|

||||

}

|

||||

@ -275,122 +275,6 @@ func cmdLineOverride(currentConfig *bzzapi.Config, ctx *cli.Context) *bzzapi.Con

|

||||

|

||||

}

|

||||

|

||||

// envVarsOverride overrides the current config with whatver is provided in environment variables

|

||||

// most values are not allowed a zero value (empty string), if not otherwise noted

|

||||

func envVarsOverride(currentConfig *bzzapi.Config) (config *bzzapi.Config) {

|

||||

if keyid := os.Getenv(SwarmEnvAccount); keyid != "" {

|

||||

currentConfig.BzzAccount = keyid

|

||||

}

|

||||

|

||||

if chbookaddr := os.Getenv(SwarmEnvChequebookAddr); chbookaddr != "" {

|

||||

currentConfig.Contract = common.HexToAddress(chbookaddr)

|

||||

}

|

||||

|

||||

if networkid := os.Getenv(SwarmEnvNetworkID); networkid != "" {

|

||||

id, err := strconv.ParseUint(networkid, 10, 64)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvNetworkID, err)

|

||||

}

|

||||

if id != 0 {

|

||||

currentConfig.NetworkID = id

|

||||

}

|

||||

}

|

||||

|

||||

if datadir := os.Getenv(GethEnvDataDir); datadir != "" {

|

||||

currentConfig.Path = expandPath(datadir)

|

||||

}

|

||||

|

||||

bzzport := os.Getenv(SwarmEnvPort)

|

||||

if len(bzzport) > 0 {

|

||||

currentConfig.Port = bzzport

|

||||

}

|

||||

|

||||

if bzzaddr := os.Getenv(SwarmEnvListenAddr); bzzaddr != "" {

|

||||

currentConfig.ListenAddr = bzzaddr

|

||||

}

|

||||

|

||||

if swapenable := os.Getenv(SwarmEnvSwapEnable); swapenable != "" {

|

||||

swap, err := strconv.ParseBool(swapenable)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvSwapEnable, err)

|

||||

}

|

||||

currentConfig.SwapEnabled = swap

|

||||

}

|

||||

|

||||

if syncdisable := os.Getenv(SwarmEnvSyncDisable); syncdisable != "" {

|

||||

sync, err := strconv.ParseBool(syncdisable)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvSyncDisable, err)

|

||||

}

|

||||

currentConfig.SyncEnabled = !sync

|

||||

}

|

||||

|

||||

if v := os.Getenv(SwarmEnvDeliverySkipCheck); v != "" {

|

||||

skipCheck, err := strconv.ParseBool(v)

|

||||

if err != nil {

|

||||

currentConfig.DeliverySkipCheck = skipCheck

|

||||

}

|

||||

}

|

||||

|

||||

if v := os.Getenv(SwarmEnvSyncUpdateDelay); v != "" {

|

||||

d, err := time.ParseDuration(v)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvSyncUpdateDelay, err)

|

||||

}

|

||||

currentConfig.SyncUpdateDelay = d

|

||||

}

|

||||

|

||||

if max := os.Getenv(SwarmEnvMaxStreamPeerServers); max != "" {

|

||||

m, err := strconv.Atoi(max)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvMaxStreamPeerServers, err)

|

||||

}

|

||||

currentConfig.MaxStreamPeerServers = m

|

||||

}

|

||||

|

||||

if lne := os.Getenv(SwarmEnvLightNodeEnable); lne != "" {

|

||||

lightnode, err := strconv.ParseBool(lne)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvLightNodeEnable, err)

|

||||

}

|

||||

currentConfig.LightNodeEnabled = lightnode

|

||||

}

|

||||

|

||||

if swapapi := os.Getenv(SwarmEnvSwapAPI); swapapi != "" {

|

||||

currentConfig.SwapAPI = swapapi

|

||||

}

|

||||

|

||||

if currentConfig.SwapEnabled && currentConfig.SwapAPI == "" {

|

||||

utils.Fatalf(SwarmErrSwapSetNoAPI)

|

||||

}

|

||||

|

||||

if ensapi := os.Getenv(SwarmEnvENSAPI); ensapi != "" {

|

||||

currentConfig.EnsAPIs = strings.Split(ensapi, ",")

|

||||

}

|

||||

|

||||

if ensaddr := os.Getenv(SwarmEnvENSAddr); ensaddr != "" {

|

||||

currentConfig.EnsRoot = common.HexToAddress(ensaddr)

|

||||

}

|

||||

|

||||

if cors := os.Getenv(SwarmEnvCORS); cors != "" {

|

||||

currentConfig.Cors = cors

|

||||

}

|

||||

|

||||

if bm := os.Getenv(SwarmEnvBootnodeMode); bm != "" {

|

||||

bootnodeMode, err := strconv.ParseBool(bm)

|

||||

if err != nil {

|

||||

utils.Fatalf("invalid environment variable %s: %v", SwarmEnvBootnodeMode, err)

|

||||

}

|

||||

currentConfig.BootnodeMode = bootnodeMode

|

||||

}

|

||||

|

||||

if api := os.Getenv(SwarmGlobalstoreAPI); api != "" {

|

||||

currentConfig.GlobalStoreAPI = api

|

||||

}

|

||||

|

||||

return currentConfig

|

||||

}

|

||||

|

||||

// dumpConfig is the dumpconfig command.

|

||||

// writes a default config to STDOUT

|

||||

func dumpConfig(ctx *cli.Context) error {

|

||||

|

||||

@ -17,6 +17,7 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"encoding/hex"

|

||||

"fmt"

|

||||

"io"

|

||||

"io/ioutil"

|

||||

@ -28,6 +29,8 @@ import (

|

||||

|

||||

"github.com/docker/docker/pkg/reexec"

|

||||

"github.com/ethereum/go-ethereum/cmd/utils"

|

||||

"github.com/ethereum/go-ethereum/crypto"

|

||||

"github.com/ethereum/go-ethereum/node"

|

||||

"github.com/ethereum/go-ethereum/rpc"

|

||||

"github.com/ethersphere/swarm"

|

||||

"github.com/ethersphere/swarm/api"

|

||||

@ -48,6 +51,7 @@ func TestConfigFailsSwapEnabledNoSwapApi(t *testing.T) {

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "54545",

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", SwarmSwapEnabledFlag.Name),

|

||||

}

|

||||

|

||||

@ -56,15 +60,161 @@ func TestConfigFailsSwapEnabledNoSwapApi(t *testing.T) {

|

||||

swarm.ExpectExit()

|

||||

}

|

||||

|

||||

func TestConfigFailsNoBzzAccount(t *testing.T) {

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "54545",

|

||||

func TestBzzKeyFlag(t *testing.T) {

|

||||

key, err := crypto.GenerateKey()

|

||||

if err != nil {

|

||||

t.Fatal("unable to generate key")

|

||||

}

|

||||

hexKey := hex.EncodeToString(crypto.FromECDSA(key))

|

||||

bzzaccount := crypto.PubkeyToAddress(key.PublicKey).Hex()

|

||||

|

||||

dir, err := ioutil.TempDir("", "bzztest")

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

defer os.RemoveAll(dir)

|

||||

|

||||

conf := &node.Config{

|

||||

DataDir: dir,

|

||||

IPCPath: "testbzzkeyflag.ipc",

|

||||

NoUSB: true,

|

||||

}

|

||||

|

||||

swarm := runSwarm(t, flags...)

|

||||

swarm.Expect("Fatal: " + SwarmErrNoBZZAccount + "\n")

|

||||

swarm.ExpectExit()

|

||||

node := &testNode{Dir: dir}

|

||||

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.DataDirFlag.Name), dir,

|

||||

fmt.Sprintf("--%s", utils.IPCPathFlag.Name), conf.IPCPath,

|

||||

fmt.Sprintf("--%s", SwarmBzzKeyHexFlag.Name), hexKey,

|

||||

}

|

||||

|

||||

node.Cmd = runSwarm(t, flags...)

|

||||

defer func() {

|

||||

node.Shutdown()

|

||||

}()

|

||||

|

||||

node.Cmd.InputLine(testPassphrase)

|

||||

|

||||

// wait for the node to start

|

||||

for start := time.Now(); time.Since(start) < 10*time.Second; time.Sleep(50 * time.Millisecond) {

|

||||

node.Client, err = rpc.Dial(conf.IPCEndpoint())

|

||||

if err == nil {

|

||||

break

|

||||

}

|

||||

}

|

||||

if node.Client == nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

// load info

|

||||

var info swarm.Info

|

||||

if err := node.Client.Call(&info, "bzz_info"); err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

if info.BzzAccount != bzzaccount {

|

||||

t.Fatalf("Expected account to be %s, got %s", bzzaccount, info.BzzAccount)

|

||||

}

|

||||

}

|

||||

func TestEmptyBzzAccountFlagMultipleAccounts(t *testing.T) {

|

||||

dir, err := ioutil.TempDir("", "bzztest")

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

defer os.RemoveAll(dir)

|

||||

|

||||

// Create two accounts on disk

|

||||

getTestAccount(t, dir)

|

||||

getTestAccount(t, dir)

|

||||

|

||||

node := &testNode{Dir: dir}

|

||||

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.DataDirFlag.Name), dir,

|

||||

}

|

||||

|

||||

node.Cmd = runSwarm(t, flags...)

|

||||

|

||||

node.Cmd.ExpectRegexp(fmt.Sprintf("Please choose one of the accounts by running swarm with the --%s flag.", SwarmAccountFlag.Name))

|

||||

}

|

||||

|

||||

func TestEmptyBzzAccountFlagSingleAccount(t *testing.T) {

|

||||

dir, err := ioutil.TempDir("", "bzztest")

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

defer os.RemoveAll(dir)

|

||||

|

||||

conf, account := getTestAccount(t, dir)

|

||||

node := &testNode{Dir: dir}

|

||||

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.DataDirFlag.Name), dir,

|

||||

fmt.Sprintf("--%s", utils.IPCPathFlag.Name), conf.IPCPath,

|

||||

}

|

||||

|

||||

node.Cmd = runSwarm(t, flags...)

|

||||

defer func() {

|

||||

node.Shutdown()

|

||||

}()

|

||||

|

||||

node.Cmd.InputLine(testPassphrase)

|

||||

|

||||

// wait for the node to start

|

||||

for start := time.Now(); time.Since(start) < 10*time.Second; time.Sleep(50 * time.Millisecond) {

|

||||

node.Client, err = rpc.Dial(conf.IPCEndpoint())

|

||||

if err == nil {

|

||||

break

|

||||

}

|

||||

}

|

||||

if node.Client == nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

// load info

|

||||

var info swarm.Info

|

||||

if err := node.Client.Call(&info, "bzz_info"); err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

if info.BzzAccount != account.Address.Hex() {

|

||||

t.Fatalf("Expected account to be %s, got %s", account.Address.Hex(), info.BzzAccount)

|

||||

}

|

||||

}

|

||||

|

||||

func TestEmptyBzzAccountFlagNoAccountWrongPassword(t *testing.T) {

|

||||

dir, err := ioutil.TempDir("", "bzztest")

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

defer os.RemoveAll(dir)

|

||||

|

||||

node := &testNode{Dir: dir}

|

||||

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", utils.DataDirFlag.Name), dir,

|

||||

}

|

||||

|

||||

node.Cmd = runSwarm(t, flags...)

|

||||

|

||||

// Set password

|

||||

node.Cmd.InputLine("goodpassword")

|

||||

// Confirm password

|

||||

node.Cmd.InputLine("wrongpassword")

|

||||

|

||||

node.Cmd.ExpectRegexp("Passphrases do not match")

|

||||

}

|

||||

|

||||

func TestConfigCmdLineOverrides(t *testing.T) {

|

||||

@ -86,6 +236,7 @@ func TestConfigCmdLineOverrides(t *testing.T) {

|

||||

flags := []string{

|

||||

fmt.Sprintf("--%s", SwarmNetworkIdFlag.Name), "42",

|

||||

fmt.Sprintf("--%s", SwarmPortFlag.Name), httpPort,

|

||||

fmt.Sprintf("--%s", utils.ListenPortFlag.Name), "0",

|

||||

fmt.Sprintf("--%s", SwarmSyncDisabledFlag.Name),

|

||||

fmt.Sprintf("--%s", CorsStringFlag.Name), "*",

|

||||

fmt.Sprintf("--%s", SwarmAccountFlag.Name), account.Address.String(),

|

||||

|

||||

@ -101,7 +101,7 @@ func download(ctx *cli.Context) {

|

||||

return nil

|

||||

}

|

||||

if passwords := makePasswordList(ctx); passwords != nil {

|

||||

password := getPassPhrase(fmt.Sprintf("Downloading %s is restricted", uri), 0, passwords)

|

||||

password := getPassPhrase(fmt.Sprintf("Downloading %s is restricted", uri), false, 0, passwords)

|

||||

err = dl(password)

|

||||

} else {

|

||||

err = dl("")

|

||||

|

||||

@ -30,6 +30,11 @@ var (

|

||||

Usage: "Swarm account key file",

|

||||

EnvVar: SwarmEnvAccount,

|

||||

}

|

||||

SwarmBzzKeyHexFlag = cli.StringFlag{

|

||||

Name: "bzzkeyhex",

|

||||

Usage: "BzzAccount key in hex (for testing)",

|

||||

EnvVar: SwarmEnvBzzKeyHex,

|

||||

}

|

||||

SwarmListenAddrFlag = cli.StringFlag{

|

||||

Name: "httpaddr",

|

||||

Usage: "Swarm HTTP API listening interface",

|

||||

@ -40,6 +45,11 @@ var (

|

||||

Usage: "Swarm local http api port",

|

||||

EnvVar: SwarmEnvPort,

|

||||

}

|

||||

SwarmNATInterfaceFlag = cli.StringFlag{

|

||||

Name: "natif",

|

||||

Usage: "Announce the IP address of a given network interface (e.g. eth0)",

|

||||

EnvVar: SwarmEnvNATInterface,

|

||||

}

|

||||

SwarmNetworkIdFlag = cli.IntFlag{

|

||||

Name: "bzznetworkid",

|

||||

Usage: "Network identifier (integer, default 3=swarm testnet)",

|

||||

|

||||

@ -21,6 +21,7 @@ import (

|

||||

"encoding/hex"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"net"

|

||||

"os"

|

||||

"os/signal"

|

||||

"runtime"

|

||||

@ -38,6 +39,7 @@ import (

|

||||

"github.com/ethereum/go-ethereum/log"

|

||||

"github.com/ethereum/go-ethereum/node"

|

||||

"github.com/ethereum/go-ethereum/p2p/enode"

|

||||

"github.com/ethereum/go-ethereum/p2p/nat"

|

||||

"github.com/ethereum/go-ethereum/rpc"

|

||||

"github.com/ethersphere/swarm"

|

||||

bzzapi "github.com/ethersphere/swarm/api"

|

||||

@ -170,6 +172,7 @@ func init() {

|

||||

utils.IPCDisabledFlag,

|

||||

utils.IPCPathFlag,

|

||||

utils.PasswordFileFlag,

|

||||

SwarmNATInterfaceFlag,

|

||||

// bzzd-specific flags

|

||||

CorsStringFlag,

|

||||

EnsAPIFlag,

|

||||

@ -184,6 +187,7 @@ func init() {

|

||||

SwarmListenAddrFlag,

|

||||

SwarmPortFlag,

|

||||

SwarmAccountFlag,

|

||||

SwarmBzzKeyHexFlag,

|

||||

SwarmNetworkIdFlag,

|

||||

ChequebookAddrFlag,

|

||||

// upload flags

|

||||

@ -290,6 +294,9 @@ func bzzd(ctx *cli.Context) error {

|

||||

//disable dynamic dialing from p2p/discovery

|

||||

cfg.P2P.NoDial = true

|

||||

|

||||

//optionally set the NAT IP from a network interface

|

||||

setSwarmNATFromInterface(ctx, &cfg)

|

||||

|

||||

stack, err := node.New(&cfg)

|

||||

if err != nil {

|

||||

utils.Fatalf("can't create node: %v", err)

|

||||

@ -351,21 +358,62 @@ func registerBzzService(bzzconfig *bzzapi.Config, stack *node.Node) {

|

||||

}

|

||||

}

|

||||

|

||||

func getAccount(bzzaccount string, ctx *cli.Context, stack *node.Node) *ecdsa.PrivateKey {

|

||||

//an account is mandatory

|

||||

if bzzaccount == "" {

|

||||

utils.Fatalf(SwarmErrNoBZZAccount)

|

||||

// getOrCreateAccount returns the address and associated private key for a bzzaccount

|

||||

// If no account exists, it will create an account for you.

|

||||

func getOrCreateAccount(ctx *cli.Context, stack *node.Node) (string, *ecdsa.PrivateKey) {

|

||||

var bzzaddr string

|

||||

|

||||

// Check if a key was provided

|

||||

if hexkey := ctx.GlobalString(SwarmBzzKeyHexFlag.Name); hexkey != "" {

|

||||

key, err := crypto.HexToECDSA(hexkey)

|

||||

if err != nil {

|

||||

utils.Fatalf("failed using %s: %v", SwarmBzzKeyHexFlag.Name, err)

|

||||

}

|

||||

bzzaddr := crypto.PubkeyToAddress(key.PublicKey).Hex()

|

||||

log.Info(fmt.Sprintf("Swarm account key loaded from %s", SwarmBzzKeyHexFlag.Name), "address", bzzaddr)

|

||||

return bzzaddr, key

|

||||

}

|

||||

// Try to load the arg as a hex key file.

|

||||

if key, err := crypto.LoadECDSA(bzzaccount); err == nil {

|

||||

log.Info("Swarm account key loaded", "address", crypto.PubkeyToAddress(key.PublicKey))

|

||||

return key

|

||||

}

|

||||

// Otherwise try getting it from the keystore.

|

||||

|

||||

am := stack.AccountManager()

|

||||

ks := am.Backends(keystore.KeyStoreType)[0].(*keystore.KeyStore)

|

||||

|

||||

return decryptStoreAccount(ks, bzzaccount, utils.MakePasswordList(ctx))

|

||||

// Check if an address was provided

|

||||

if bzzaddr = ctx.GlobalString(SwarmAccountFlag.Name); bzzaddr != "" {

|

||||

// Try to load the arg as a hex key file.

|

||||

if key, err := crypto.LoadECDSA(bzzaddr); err == nil {

|

||||

bzzaddr := crypto.PubkeyToAddress(key.PublicKey).Hex()

|

||||

log.Info("Swarm account key loaded", "address", bzzaddr)

|

||||

return bzzaddr, key

|

||||

}

|

||||

return bzzaddr, decryptStoreAccount(ks, bzzaddr, utils.MakePasswordList(ctx))

|

||||

}

|

||||

|

||||

// No address or key were provided

|

||||

accounts := ks.Accounts()

|

||||

|

||||

switch l := len(accounts); l {

|

||||

case 0:

|

||||

// Create an account

|

||||

log.Info("You don't have an account yet. Creating one...")

|

||||

password := getPassPhrase("Your new account is locked with a password. Please give a password. Do not forget this password.", true, 0, utils.MakePasswordList(ctx))

|

||||

account, err := ks.NewAccount(password)

|

||||

if err != nil {

|

||||

utils.Fatalf("failed creating an account: %v", err)

|

||||

}

|

||||

bzzaddr = account.Address.Hex()

|

||||

case 1:

|

||||

// Use existing account

|

||||

bzzaddr = accounts[0].Address.Hex()

|

||||

default:

|

||||

// Inform user about multiple accounts

|

||||

log.Info(fmt.Sprintf("Multiple (%d) accounts were found in your keystore.", l))

|

||||

for _, a := range accounts {

|

||||

log.Info(fmt.Sprintf("Account: %s", a.Address.Hex()))

|

||||

}

|

||||

utils.Fatalf(fmt.Sprintf("Please choose one of the accounts by running swarm with the --%s flag.", SwarmAccountFlag.Name))

|

||||

}

|

||||

|

||||

return bzzaddr, decryptStoreAccount(ks, bzzaddr, utils.MakePasswordList(ctx))

|

||||

}

|

||||

|

||||

// getPrivKey returns the private key of the specified bzzaccount

|

||||

@ -387,7 +435,9 @@ func getPrivKey(ctx *cli.Context) *ecdsa.PrivateKey {

|

||||

}

|

||||

defer stack.Close()

|

||||

|

||||

return getAccount(bzzconfig.BzzAccount, ctx, stack)

|

||||

var privkey *ecdsa.PrivateKey

|

||||

bzzconfig.BzzAccount, privkey = getOrCreateAccount(ctx, stack)

|

||||

return privkey

|

||||

}

|

||||

|

||||

func decryptStoreAccount(ks *keystore.KeyStore, account string, passwords []string) *ecdsa.PrivateKey {

|

||||

@ -412,7 +462,7 @@ func decryptStoreAccount(ks *keystore.KeyStore, account string, passwords []stri

|

||||

utils.Fatalf("Can't load swarm account key: %v", err)

|

||||

}

|

||||

for i := 0; i < 3; i++ {

|

||||

password := getPassPhrase(fmt.Sprintf("Unlocking swarm account %s [%d/3]", a.Address.Hex(), i+1), i, passwords)

|

||||

password := getPassPhrase(fmt.Sprintf("Unlocking swarm account %s [%d/3]", a.Address.Hex(), i+1), false, i, passwords)

|

||||

key, err := keystore.DecryptKey(keyjson, password)

|

||||

if err == nil {

|

||||

return key.PrivateKey

|

||||

@ -422,18 +472,17 @@ func decryptStoreAccount(ks *keystore.KeyStore, account string, passwords []stri

|

||||

return nil

|

||||

}

|

||||

|

||||

// getPassPhrase retrieves the password associated with bzz account, either by fetching

|

||||

// from a list of pre-loaded passwords, or by requesting it interactively from user.

|

||||

func getPassPhrase(prompt string, i int, passwords []string) string {

|

||||

// non-interactive

|

||||

// getPassPhrase retrieves the password associated with a bzzaccount, either fetched

|

||||

// from a list of preloaded passphrases, or requested interactively from the user.

|

||||

func getPassPhrase(prompt string, confirmation bool, i int, passwords []string) string {

|

||||

// If a list of passwords was supplied, retrieve from them

|

||||

if len(passwords) > 0 {

|

||||

if i < len(passwords) {

|

||||

return passwords[i]

|

||||

}

|

||||

return passwords[len(passwords)-1]

|

||||

}

|

||||

|

||||

// fallback to interactive mode

|

||||

// Otherwise prompt the user for the password

|

||||

if prompt != "" {

|

||||

fmt.Println(prompt)

|

||||

}

|

||||

@ -441,6 +490,15 @@ func getPassPhrase(prompt string, i int, passwords []string) string {

|

||||

if err != nil {

|

||||

utils.Fatalf("Failed to read passphrase: %v", err)

|

||||

}

|

||||

if confirmation {

|

||||

confirm, err := console.Stdin.PromptPassword("Repeat passphrase: ")

|

||||

if err != nil {

|

||||

utils.Fatalf("Failed to read passphrase confirmation: %v", err)

|

||||

}

|

||||

if password != confirm {

|

||||

utils.Fatalf("Passphrases do not match")

|

||||

}

|

||||

}

|

||||

return password

|

||||

}

|

||||

|

||||

@ -473,3 +531,26 @@ func setSwarmBootstrapNodes(ctx *cli.Context, cfg *node.Config) {

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

func setSwarmNATFromInterface(ctx *cli.Context, cfg *node.Config) {

|

||||

ifacename := ctx.GlobalString(SwarmNATInterfaceFlag.Name)

|

||||

|

||||

if ifacename == "" {

|

||||

return

|

||||

}

|

||||

|

||||

iface, err := net.InterfaceByName(ifacename)

|

||||

if err != nil {

|

||||

utils.Fatalf("can't get network interface %s", ifacename)

|

||||

}

|

||||

addrs, err := iface.Addrs()

|

||||

if err != nil || len(addrs) == 0 {

|

||||

utils.Fatalf("could not get address from interface %s: %v", ifacename, err)

|

||||

}

|

||||

|

||||

ip, _, err := net.ParseCIDR(addrs[0].String())

|

||||

if err != nil {

|

||||

utils.Fatalf("could not parse IP addr from interface %s: %v", ifacename, err)

|

||||

}

|

||||

cfg.P2P.NAT = nat.ExtIP(ip)

|

||||

}

|

||||

|

||||

@ -1,26 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

set -o errexit

|

||||

set -o pipefail

|

||||

set -o nounset

|

||||

|

||||

PASSWORD=${PASSWORD:-}

|

||||

DATADIR=${DATADIR:-/root/.ethereum/}

|

||||

|

||||

if [ "$PASSWORD" == "" ]; then echo "Password must be set, in order to use swarm non-interactively." && exit 1; fi

|

||||

|

||||

echo $PASSWORD > /password

|

||||

|

||||

KEYFILE=`find $DATADIR | grep UTC | head -n 1` || true

|

||||

if [ ! -f "$KEYFILE" ]; then echo "No keyfile found. Generating..." && geth --datadir $DATADIR --password /password account new; fi

|

||||

KEYFILE=`find $DATADIR | grep UTC | head -n 1` || true

|

||||

if [ ! -f "$KEYFILE" ]; then echo "Could not find nor generate a BZZ keyfile." && exit 1; else echo "Found keyfile $KEYFILE"; fi

|

||||

|

||||

VERSION=`swarm version`

|

||||

echo "Running Swarm:"

|

||||

echo $VERSION

|

||||

|

||||

export BZZACCOUNT="`echo -n $KEYFILE | tail -c 40`" || true

|

||||

if [ "$BZZACCOUNT" == "" ]; then echo "Could not parse BZZACCOUNT from keyfile." && exit 1; fi

|

||||

|

||||

exec swarm --bzzaccount=$BZZACCOUNT --password /password --datadir $DATADIR $@ 2>&1

|

||||

@ -67,8 +67,8 @@ Wire Protocol Specifications

|

||||

|

||||

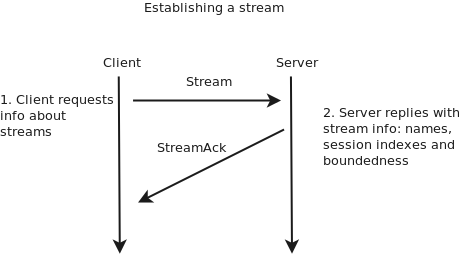

| Msg Name | From->To | Params | Example |

|

||||

| -------- | -------- | -------- | ------- |

|

||||

| StreamInfoReq | Client->Server | Streams`[]string` | `SYNC\|6, SYNC\|5` |

|

||||

| StreamInfoRes | Server->Client | Streams`[]StreamDescriptor` <br>Stream`string`<br>Cursor`uint64`<br>Bounded`bool` | `SYNC\|6;CUR=1632;bounded, SYNC\|7;CUR=18433;bounded` |

|

||||

| StreamInfoReq | Client->Server | Streams`[]ID` | `SYNC\|6, SYNC\|5` |

|

||||

| StreamInfoRes | Server->Client | Streams`[]StreamDescriptor` <br>Stream`ID`<br>Cursor`uint64`<br>Bounded`bool` | `SYNC\|6;CUR=1632;bounded, SYNC\|7;CUR=18433;bounded` |

|

||||

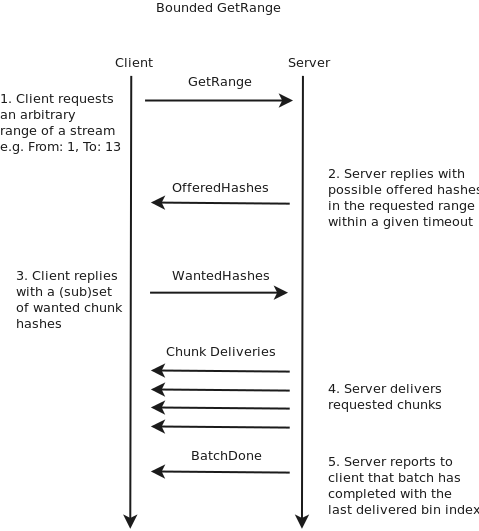

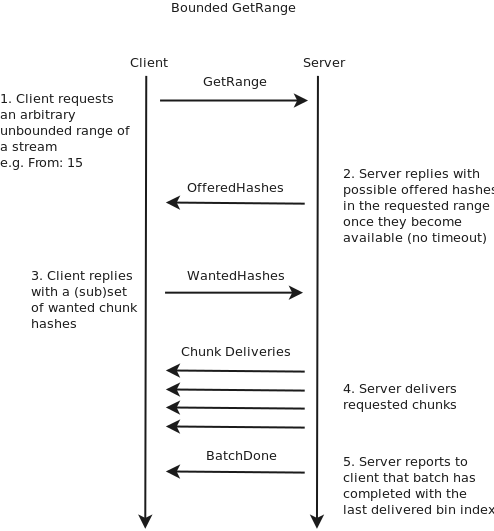

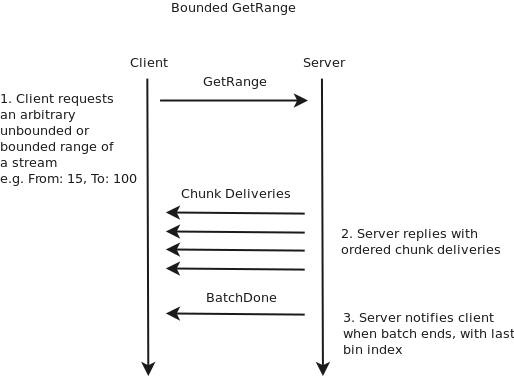

| GetRange | Client->Server| Ruid`uint`<br>Stream `string`<br>From`uint`<br>To`*uint`(nullable)<br>Roundtrip`bool` | `Ruid: 21321, Stream: SYNC\|6, From: 1, To: 100`(bounded), Roundtrip: true<br>`Stream: SYNC\|7, From: 109, Roundtrip: true`(unbounded) |

|

||||