Compare commits

66 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 7aa05618a3 | |||

| cdfbbe5e60 | |||

| fe7d1cb81c | |||

| c2a9395a4b | |||

| 586279bcfc | |||

| 8bd10e7c4c | |||

| 928e6165bc | |||

| 77c9e801aa | |||

| c78132417f | |||

| 849928887e | |||

| ba1163d49f | |||

| 6f9c89af39 | |||

| 246b8b1242 | |||

| f0db68cb75 | |||

| f0d1fdfb46 | |||

| 3b8b2e030a | |||

| b4fee677a5 | |||

| fe706583f9 | |||

| d0e0c17ece | |||

| 5aaa38bcaf | |||

| 6ff9b27f8e | |||

| 3f4e035506 | |||

| 57d9fbb927 | |||

| ee44e51b30 | |||

| 5011f24123 | |||

| d1eda334f3 | |||

| 2ae5ce9f2c | |||

| 4f5ac78b7e | |||

| 074c9af020 | |||

| 2da2d4e365 | |||

| 8eb76ab2a5 | |||

| a710d95243 | |||

| a06535d7ed | |||

| f511ac9be7 | |||

| e28ad2177e | |||

| cb16fe84cd | |||

| ec3569aa39 | |||

| 246edecf53 | |||

| 34834c5af9 | |||

| b845245614 | |||

| 5711fb9969 | |||

| d1eaecde9a | |||

| 00c8505d1e | |||

| 33f01efe69 | |||

| 377d312c81 | |||

| badf5d5412 | |||

| 0339f90b40 | |||

| 5455e8e6a9 | |||

| 6843b71a0d | |||

| 634408b5e8 | |||

| d053f78b74 | |||

| 93b6fceb2f | |||

| ac7860c35d | |||

| b0eab8729f | |||

| cb81f80b31 | |||

| ea97529185 | |||

| f1075191fe | |||

| 74c479fbc9 | |||

| 7e788d3a17 | |||

| 69b3c75f0d | |||

| b2c2fa40a2 | |||

| 50458d9524 | |||

| 9679e3e356 | |||

| 6db9f92b8a | |||

| 4a44498d45 | |||

| 216510c573 |

@ -1,9 +1,10 @@

|

||||

[package]

|

||||

name = "solana"

|

||||

description = "Blockchain Rebuilt for Scale"

|

||||

version = "0.6.0"

|

||||

description = "Blockchain, Rebuilt for Scale"

|

||||

version = "0.6.1"

|

||||

documentation = "https://docs.rs/solana"

|

||||

homepage = "http://solana.com/"

|

||||

readme = "README.md"

|

||||

repository = "https://github.com/solana-labs/solana"

|

||||

authors = [

|

||||

"Anatoly Yakovenko <anatoly@solana.com>",

|

||||

|

||||

56

README.md

56

README.md

@ -3,17 +3,17 @@

|

||||

[](https://buildkite.com/solana-labs/solana)

|

||||

[](https://codecov.io/gh/solana-labs/solana)

|

||||

|

||||

Disclaimer

|

||||

===

|

||||

|

||||

All claims, content, designs, algorithms, estimates, roadmaps, specifications, and performance measurements described in this project are done with the author's best effort. It is up to the reader to check and validate their accuracy and truthfulness. Furthermore nothing in this project constitutes a solicitation for investment.

|

||||

|

||||

Solana: Blockchain Rebuilt for Scale

|

||||

Blockchain, Rebuilt for Scale

|

||||

===

|

||||

|

||||

Solana™ is a new blockchain architecture built from the ground up for scale. The architecture supports

|

||||

up to 710 thousand transactions per second on a gigabit network.

|

||||

|

||||

Disclaimer

|

||||

===

|

||||

|

||||

All claims, content, designs, algorithms, estimates, roadmaps, specifications, and performance measurements described in this project are done with the author's best effort. It is up to the reader to check and validate their accuracy and truthfulness. Furthermore nothing in this project constitutes a solicitation for investment.

|

||||

|

||||

Introduction

|

||||

===

|

||||

|

||||

@ -82,17 +82,12 @@ open on all the machines you want to test with.

|

||||

Generate a leader configuration file with:

|

||||

|

||||

```bash

|

||||

cargo run --release --bin solana-fullnode-config > leader.json

|

||||

cargo run --release --bin solana-fullnode-config -- -d > leader.json

|

||||

```

|

||||

|

||||

Now start the server:

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/leader.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cargo run --release --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

$ ./multinode-demo/leader.sh > leader-txs.log

|

||||

```

|

||||

|

||||

@ -100,7 +95,7 @@ To run a performance-enhanced fullnode on Linux, download `libcuda_verify_ed2551

|

||||

it by adding `--features=cuda` to the line that runs `solana-fullnode` in `leader.sh`.

|

||||

|

||||

```bash

|

||||

$ wget https://solana-build-artifacts.s3.amazonaws.com/v0.6.0/libcuda_verify_ed25519.a

|

||||

$ wget https://solana-build-artifacts.s3.amazonaws.com/v0.5.0/libcuda_verify_ed25519.a

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- -l leader.json < genesis.log

|

||||

```

|

||||

|

||||

@ -112,15 +107,13 @@ Multinode Testnet

|

||||

|

||||

To run a multinode testnet, after starting a leader node, spin up some validator nodes:

|

||||

|

||||

Generate the validator's configuration file:

|

||||

|

||||

```bash

|

||||

cargo run --release --bin solana-fullnode-config -- -d > validator.json

|

||||

```

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/validator.sh

|

||||

#!/bin/bash

|

||||

rsync -v -e ssh $1/mint-demo.json .

|

||||

rsync -v -e ssh $1/leader.json .

|

||||

rsync -v -e ssh $1/genesis.log .

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cargo run --release --bin solana-fullnode -- -l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

$ ./multinode-demo/validator.sh ubuntu@10.0.1.51:~/solana > validator-txs.log #The leader machine

|

||||

```

|

||||

|

||||

@ -128,7 +121,7 @@ As with the leader node, you can run a performance-enhanced validator fullnode b

|

||||

`--features=cuda` to the line that runs `solana-fullnode` in `validator.sh`.

|

||||

|

||||

```bash

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- -l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- -l validator.json -v leader.json < genesis.log

|

||||

```

|

||||

|

||||

|

||||

@ -139,13 +132,7 @@ Now that your singlenode or multinode testnet is up and running, in a separate s

|

||||

the JSON configuration file here, not the genesis ledger.

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/client.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

rsync -v -e ssh $1/leader.json .

|

||||

rsync -v -e ssh $1/mint-demo.json .

|

||||

cat mint-demo.json | cargo run --release --bin solana-client-demo -- -l leader.json

|

||||

$ ./multinode-demo/client.sh ubuntu@10.0.1.51:~/solana #The leader machine

|

||||

$ ./multinode-demo/client.sh ubuntu@10.0.1.51:~/solana 2 #The leader machine and the total number of nodes in the network

|

||||

```

|

||||

|

||||

What just happened? The client demo spins up several threads to send 500,000 transactions

|

||||

@ -210,6 +197,17 @@ to see the debug and info sections for streamer and server respectively. General

|

||||

we are using debug for infrequent debug messages, trace for potentially frequent messages and

|

||||

info for performance-related logging.

|

||||

|

||||

Attaching to a running process with gdb

|

||||

|

||||

```

|

||||

$ sudo gdb

|

||||

attach <PID>

|

||||

set logging on

|

||||

thread apply all bt

|

||||

```

|

||||

|

||||

This will dump all the threads stack traces into gdb.txt

|

||||

|

||||

Benchmarking

|

||||

---

|

||||

|

||||

|

||||

@ -11,6 +11,8 @@ steps:

|

||||

name: "cuda"

|

||||

- command: "ci/shellcheck.sh"

|

||||

name: "shellcheck [public]"

|

||||

- command: "ci/test-erasure.sh"

|

||||

name: "erasure"

|

||||

- wait

|

||||

- command: "ci/publish.sh"

|

||||

name: "publish release artifacts"

|

||||

|

||||

29

ci/test-erasure.sh

Executable file

29

ci/test-erasure.sh

Executable file

@ -0,0 +1,29 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

set -o xtrace

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

if [[ -z "${libgf_complete_URL:-}" ]]; then

|

||||

echo libgf_complete_URL undefined

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [[ -z "${libJerasure_URL:-}" ]]; then

|

||||

echo libJerasure_URL undefined

|

||||

exit 1

|

||||

fi

|

||||

|

||||

curl -X GET -o libJerasure.so "$libJerasure_URL"

|

||||

curl -X GET -o libgf_complete.so "$libgf_complete_URL"

|

||||

|

||||

ln -s libJerasure.so libJerasure.so.2

|

||||

ln -s libJerasure.so libJerasure.so.2.0.0

|

||||

ln -s libgf_complete.so libgf_complete.so.1.0.0

|

||||

export LD_LIBRARY_PATH=$PWD:$LD_LIBRARY_PATH

|

||||

|

||||

# shellcheck disable=SC1090 # <-- shellcheck can't follow ~

|

||||

source ~/.cargo/env

|

||||

cargo test --features="erasure"

|

||||

|

||||

exit 0

|

||||

@ -1,65 +0,0 @@

|

||||

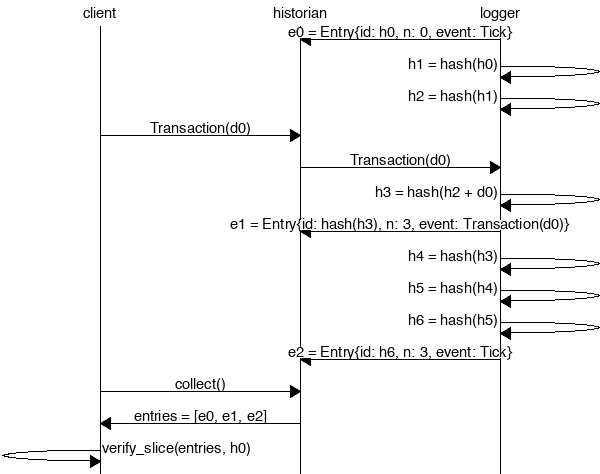

The Historian

|

||||

===

|

||||

|

||||

Create a *Historian* and send it *events* to generate an *event log*, where each *entry*

|

||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||

|

||||

|

||||

|

||||

```rust

|

||||

extern crate solana;

|

||||

|

||||

use solana::historian::Historian;

|

||||

use solana::ledger::{Block, Entry, Hash};

|

||||

use solana::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_ledger(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

sleep(Duration::from_millis(15));

|

||||

let tokens = 42;

|

||||

let keypair = generate_keypair();

|

||||

let event0 = Event::new_claim(get_pubkey(&keypair), tokens, sign_claim_data(&tokens, &keypair));

|

||||

hist.sender.send(event0)?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn main() {

|

||||

let seed = Hash::default();

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_ledger(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry<Hash>> = hist.receiver.iter().collect();

|

||||

for entry in &entries {

|

||||

println!("{:?}", entry);

|

||||

}

|

||||

// Proof-of-History: Verify the historian learned about the events

|

||||

// in the same order they appear in the vector.

|

||||

assert!(entries[..].verify(&seed));

|

||||

}

|

||||

```

|

||||

|

||||

Running the program should produce a ledger similar to:

|

||||

|

||||

```rust

|

||||

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { tokens: 42 } }

|

||||

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

||||

```

|

||||

|

||||

Proof-of-History

|

||||

---

|

||||

|

||||

Take note of the last line:

|

||||

|

||||

```rust

|

||||

assert!(entries[..].verify(&seed));

|

||||

```

|

||||

|

||||

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

||||

historian, we can verify that `id` is the result of applying a sha256 hash to the previous `id`

|

||||

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

||||

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

||||

@ -1,18 +0,0 @@

|

||||

msc {

|

||||

client,historian,recorder;

|

||||

|

||||

recorder=>historian [ label = "e0 = Entry{id: h0, n: 0, event: Tick}" ] ;

|

||||

recorder=>recorder [ label = "h1 = hash(h0)" ] ;

|

||||

recorder=>recorder [ label = "h2 = hash(h1)" ] ;

|

||||

client=>historian [ label = "Transaction(d0)" ] ;

|

||||

historian=>recorder [ label = "Transaction(d0)" ] ;

|

||||

recorder=>recorder [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

recorder=>historian [ label = "e1 = Entry{id: hash(h3), n: 3, event: Transaction(d0)}" ] ;

|

||||

recorder=>recorder [ label = "h4 = hash(h3)" ] ;

|

||||

recorder=>recorder [ label = "h5 = hash(h4)" ] ;

|

||||

recorder=>recorder [ label = "h6 = hash(h5)" ] ;

|

||||

recorder=>historian [ label = "e2 = Entry{id: h6, n: 3, event: Tick}" ] ;

|

||||

client=>historian [ label = "collect()" ] ;

|

||||

historian=>client [ label = "entries = [e0, e1, e2]" ] ;

|

||||

client=>client [ label = "entries.verify(h0)" ] ;

|

||||

}

|

||||

@ -1,11 +1,15 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

if [[ -z "$1" ]]; then

|

||||

echo "usage: $0 [network path to solana repo on leader machine]"

|

||||

echo "usage: $0 [network path to solana repo on leader machine] [number of nodes in the network if greater then 1]"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

LEADER="$1"

|

||||

COUNT="$2"

|

||||

if [[ -z "$2" ]]; then

|

||||

COUNT=1

|

||||

fi

|

||||

|

||||

set -x

|

||||

export RUST_LOG=solana=info

|

||||

@ -13,4 +17,4 @@ rsync -v -e ssh "$LEADER/leader.json" .

|

||||

rsync -v -e ssh "$LEADER/mint-demo.json" .

|

||||

|

||||

cargo run --release --bin solana-client-demo -- \

|

||||

-l leader.json < mint-demo.json 2>&1 | tee client.log

|

||||

-l leader.json -n $COUNT -d < mint-demo.json 2>&1 | tee client.log

|

||||

|

||||

@ -17,5 +17,5 @@ export RUST_LOG=solana=info

|

||||

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

|

||||

cargo run --release --features=cuda --bin solana-fullnode -- \

|

||||

-l validator.json -v leader.json -b 9000 -d < genesis.log

|

||||

cargo run --release --bin solana-fullnode -- \

|

||||

-l validator.json -v leader.json < genesis.log

|

||||

|

||||

102

src/bank.rs

102

src/bank.rs

@ -1,6 +1,6 @@

|

||||

//! The `bank` module tracks client balances, and the progress of pending

|

||||

//! transactions. It offers a high-level public API that signs transactions

|

||||

//! on behalf of the caller, and a private low-level API for when they have

|

||||

//! The `bank` module tracks client balances and the progress of smart

|

||||

//! contracts. It offers a high-level API that signs transactions

|

||||

//! on behalf of the caller, and a low-level API for when they have

|

||||

//! already been signed and verified.

|

||||

|

||||

extern crate libc;

|

||||

@ -19,25 +19,69 @@ use std::sync::atomic::{AtomicIsize, AtomicUsize, Ordering};

|

||||

use std::sync::RwLock;

|

||||

use transaction::{Instruction, Plan, Transaction};

|

||||

|

||||

/// The number of most recent `last_id` values that the bank will track the signatures

|

||||

/// of. Once the bank discards a `last_id`, it will reject any transactions that use

|

||||

/// that `last_id` in a transaction. Lowering this value reduces memory consumption,

|

||||

/// but requires clients to update its `last_id` more frequently. Raising the value

|

||||

/// lengthens the time a client must wait to be certain a missing transaction will

|

||||

/// not be processed by the network.

|

||||

pub const MAX_ENTRY_IDS: usize = 1024 * 4;

|

||||

|

||||

/// Reasons a transaction might be rejected.

|

||||

#[derive(Debug, PartialEq, Eq)]

|

||||

pub enum BankError {

|

||||

/// Attempt to debit from `PublicKey`, but no found no record of a prior credit.

|

||||

AccountNotFound(PublicKey),

|

||||

|

||||

/// The requested debit from `PublicKey` has the potential to draw the balance

|

||||

/// below zero. This can occur when a debit and credit are processed in parallel.

|

||||

/// The bank may reject the debit or push it to a future entry.

|

||||

InsufficientFunds(PublicKey),

|

||||

|

||||

/// The bank has seen `Signature` before. This can occur under normal operation

|

||||

/// when a UDP packet is duplicated, as a user error from a client not updating

|

||||

/// its `last_id`, or as a double-spend attack.

|

||||

DuplicateSiganture(Signature),

|

||||

|

||||

/// The bank has not seen the given `last_id` or the transaction is too old and

|

||||

/// the `last_id` has been discarded.

|

||||

LastIdNotFound(Hash),

|

||||

|

||||

/// The transaction is invalid and has requested a debit or credit of negative

|

||||

/// tokens.

|

||||

NegativeTokens,

|

||||

}

|

||||

|

||||

pub type Result<T> = result::Result<T, BankError>;

|

||||

|

||||

/// The state of all accounts and contracts after processing its entries.

|

||||

pub struct Bank {

|

||||

/// A map of account public keys to the balance in that account.

|

||||

balances: RwLock<HashMap<PublicKey, AtomicIsize>>,

|

||||

|

||||

/// A map of smart contract transaction signatures to what remains of its payment

|

||||

/// plan. Each transaction that targets the plan should cause it to be reduced.

|

||||

/// Once it cannot be reduced, final payments are made and it is discarded.

|

||||

pending: RwLock<HashMap<Signature, Plan>>,

|

||||

|

||||

/// A FIFO queue of `last_id` items, where each item is a set of signatures

|

||||

/// that have been processed using that `last_id`. The bank uses this data to

|

||||

/// reject transactions with signatures its seen before as well as `last_id`

|

||||

/// values that are so old that its `last_id` has been pulled out of the queue.

|

||||

last_ids: RwLock<VecDeque<(Hash, RwLock<HashSet<Signature>>)>>,

|

||||

|

||||

/// The set of trusted timekeepers. A Timestamp transaction from a `PublicKey`

|

||||

/// outside this set will be discarded. Note that if validators do not have the

|

||||

/// same set as leaders, they may interpret the ledger differently.

|

||||

time_sources: RwLock<HashSet<PublicKey>>,

|

||||

|

||||

/// The most recent timestamp from a trusted timekeeper. This timestamp is applied

|

||||

/// to every smart contract when it enters the system. If it is waiting on a

|

||||

/// timestamp witness before that timestamp, the bank will execute it immediately.

|

||||

last_time: RwLock<DateTime<Utc>>,

|

||||

|

||||

/// The number of transactions the bank has processed without error since the

|

||||

/// start of the ledger.

|

||||

transaction_count: AtomicUsize,

|

||||

}

|

||||

|

||||

@ -67,7 +111,7 @@ impl Bank {

|

||||

bank

|

||||

}

|

||||

|

||||

/// Commit funds to the 'to' party.

|

||||

/// Commit funds to the `payment.to` party.

|

||||

fn apply_payment(&self, payment: &Payment) {

|

||||

// First we check balances with a read lock to maximize potential parallelization.

|

||||

if self.balances

|

||||

@ -89,13 +133,14 @@ impl Bank {

|

||||

}

|

||||

}

|

||||

|

||||

/// Return the last entry ID registered

|

||||

/// Return the last entry ID registered.

|

||||

pub fn last_id(&self) -> Hash {

|

||||

let last_ids = self.last_ids.read().expect("'last_ids' read lock");

|

||||

let last_item = last_ids.iter().last().expect("empty 'last_ids' list");

|

||||

last_item.0

|

||||

}

|

||||

|

||||

/// Store the given signature. The bank will reject any transaction with the same signature.

|

||||

fn reserve_signature(signatures: &RwLock<HashSet<Signature>>, sig: &Signature) -> Result<()> {

|

||||

if signatures

|

||||

.read()

|

||||

@ -111,14 +156,16 @@ impl Bank {

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn forget_signature(signatures: &RwLock<HashSet<Signature>>, sig: &Signature) {

|

||||

/// Forget the given `signature` because its transaction was rejected.

|

||||

fn forget_signature(signatures: &RwLock<HashSet<Signature>>, signature: &Signature) {

|

||||

signatures

|

||||

.write()

|

||||

.expect("'signatures' write lock in forget_signature")

|

||||

.remove(sig);

|

||||

.remove(signature);

|

||||

}

|

||||

|

||||

fn forget_signature_with_last_id(&self, sig: &Signature, last_id: &Hash) {

|

||||

/// Forget the given `signature` with `last_id` because the transaction was rejected.

|

||||

fn forget_signature_with_last_id(&self, signature: &Signature, last_id: &Hash) {

|

||||

if let Some(entry) = self.last_ids

|

||||

.read()

|

||||

.expect("'last_ids' read lock in forget_signature_with_last_id")

|

||||

@ -126,11 +173,11 @@ impl Bank {

|

||||

.rev()

|

||||

.find(|x| x.0 == *last_id)

|

||||

{

|

||||

Self::forget_signature(&entry.1, sig);

|

||||

Self::forget_signature(&entry.1, signature);

|

||||

}

|

||||

}

|

||||

|

||||

fn reserve_signature_with_last_id(&self, sig: &Signature, last_id: &Hash) -> Result<()> {

|

||||

fn reserve_signature_with_last_id(&self, signature: &Signature, last_id: &Hash) -> Result<()> {

|

||||

if let Some(entry) = self.last_ids

|

||||

.read()

|

||||

.expect("'last_ids' read lock in reserve_signature_with_last_id")

|

||||

@ -138,7 +185,7 @@ impl Bank {

|

||||

.rev()

|

||||

.find(|x| x.0 == *last_id)

|

||||

{

|

||||

return Self::reserve_signature(&entry.1, sig);

|

||||

return Self::reserve_signature(&entry.1, signature);

|

||||

}

|

||||

Err(BankError::LastIdNotFound(*last_id))

|

||||

}

|

||||

@ -207,6 +254,8 @@ impl Bank {

|

||||

}

|

||||

}

|

||||

|

||||

/// Apply only a transaction's credits. Credits from multiple transactions

|

||||

/// may safely be applied in parallel.

|

||||

fn apply_credits(&self, tx: &Transaction) {

|

||||

match &tx.instruction {

|

||||

Instruction::NewContract(contract) => {

|

||||

@ -215,8 +264,8 @@ impl Bank {

|

||||

.read()

|

||||

.expect("timestamp creation in apply_credits")));

|

||||

|

||||

if let Some(ref payment) = plan.final_payment() {

|

||||

self.apply_payment(payment);

|

||||

if let Some(payment) = plan.final_payment() {

|

||||

self.apply_payment(&payment);

|

||||

} else {

|

||||

let mut pending = self.pending

|

||||

.write()

|

||||

@ -233,17 +282,17 @@ impl Bank {

|

||||

}

|

||||

}

|

||||

|

||||

/// Process a Transaction.

|

||||

/// Process a Transaction. If it contains a payment plan that requires a witness

|

||||

/// to progress, the payment plan will be stored in the bank.

|

||||

fn process_transaction(&self, tx: &Transaction) -> Result<()> {

|

||||

self.apply_debits(tx)?;

|

||||

self.apply_credits(tx);

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a batch of transactions.

|

||||

/// Process a batch of transactions. It runs all debits first to filter out any

|

||||

/// transactions that can't be processed in parallel deterministically.

|

||||

pub fn process_transactions(&self, txs: Vec<Transaction>) -> Vec<Result<Transaction>> {

|

||||

// Run all debits first to filter out any transactions that can't be processed

|

||||

// in parallel deterministically.

|

||||

info!("processing Transactions {}", txs.len());

|

||||

let results: Vec<_> = txs.into_par_iter()

|

||||

.map(|tx| self.apply_debits(&tx).map(|_| tx))

|

||||

@ -260,6 +309,7 @@ impl Bank {

|

||||

.collect()

|

||||

}

|

||||

|

||||

/// Process an ordered list of entries.

|

||||

pub fn process_entries<I>(&self, entries: I) -> Result<()>

|

||||

where

|

||||

I: IntoIterator<Item = Entry>,

|

||||

@ -273,7 +323,8 @@ impl Bank {

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Signature.

|

||||

/// Process a Witness Signature. Any payment plans waiting on this signature

|

||||

/// will progress one step.

|

||||

fn apply_signature(&self, from: PublicKey, tx_sig: Signature) -> Result<()> {

|

||||

if let Occupied(mut e) = self.pending

|

||||

.write()

|

||||

@ -290,7 +341,8 @@ impl Bank {

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Timestamp.

|

||||

/// Process a Witness Timestamp. Any payment plans waiting on this timestamp

|

||||

/// will progress one step.

|

||||

fn apply_timestamp(&self, from: PublicKey, dt: DateTime<Utc>) -> Result<()> {

|

||||

// If this is the first timestamp we've seen, it probably came from the genesis block,

|

||||

// so we'll trust it.

|

||||

@ -329,8 +381,8 @@ impl Bank {

|

||||

plan.apply_witness(&Witness::Timestamp(*self.last_time

|

||||

.read()

|

||||

.expect("'last_time' read lock when creating timestamp")));

|

||||

if let Some(ref payment) = plan.final_payment() {

|

||||

self.apply_payment(payment);

|

||||

if let Some(payment) = plan.final_payment() {

|

||||

self.apply_payment(&payment);

|

||||

completed.push(key.clone());

|

||||

}

|

||||

}

|

||||

@ -392,7 +444,7 @@ mod tests {

|

||||

use signature::KeyPairUtil;

|

||||

|

||||

#[test]

|

||||

fn test_bank() {

|

||||

fn test_two_payments_to_one_party() {

|

||||

let mint = Mint::new(10_000);

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

let bank = Bank::new(&mint);

|

||||

@ -409,7 +461,7 @@ mod tests {

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_invalid_tokens() {

|

||||

fn test_negative_tokens() {

|

||||

let mint = Mint::new(1);

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

let bank = Bank::new(&mint);

|

||||

@ -433,7 +485,7 @@ mod tests {

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_invalid_transfer() {

|

||||

fn test_insufficient_funds() {

|

||||

let mint = Mint::new(11_000);

|

||||

let bank = Bank::new(&mint);

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

@ -570,7 +622,7 @@ mod tests {

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_max_entry_ids() {

|

||||

fn test_reject_old_last_id() {

|

||||

let mint = Mint::new(1);

|

||||

let bank = Bank::new(&mint);

|

||||

let sig = Signature::default();

|

||||

|

||||

@ -1,14 +1,17 @@

|

||||

//! The `banking_stage` processes Transaction messages.

|

||||

//! The `banking_stage` processes Transaction messages. It is intended to be used

|

||||

//! to contruct a software pipeline. The stage uses all available CPU cores and

|

||||

//! can do its processing in parallel with signature verification on the GPU.

|

||||

|

||||

use bank::Bank;

|

||||

use bincode::deserialize;

|

||||

use counter::Counter;

|

||||

use packet;

|

||||

use packet::SharedPackets;

|

||||

use rayon::prelude::*;

|

||||

use record_stage::Signal;

|

||||

use result::Result;

|

||||

use std::net::SocketAddr;

|

||||

use std::sync::atomic::{AtomicBool, Ordering};

|

||||

use std::sync::atomic::{AtomicBool, AtomicUsize, Ordering};

|

||||

use std::sync::mpsc::{channel, Receiver, Sender};

|

||||

use std::sync::Arc;

|

||||

use std::thread::{Builder, JoinHandle};

|

||||

@ -17,12 +20,20 @@ use std::time::Instant;

|

||||

use timing;

|

||||

use transaction::Transaction;

|

||||

|

||||

/// Stores the stage's thread handle and output receiver.

|

||||

pub struct BankingStage {

|

||||

/// Handle to the stage's thread.

|

||||

pub thread_hdl: JoinHandle<()>,

|

||||

|

||||

/// Output receiver for the following stage.

|

||||

pub signal_receiver: Receiver<Signal>,

|

||||

}

|

||||

|

||||

impl BankingStage {

|

||||

/// Create the stage using `bank`. Exit when either `exit` is set or

|

||||

/// when `verified_receiver` or the stage's output receiver is dropped.

|

||||

/// Discard input packets using `packet_recycler` to minimize memory

|

||||

/// allocations in a previous stage such as the `fetch_stage`.

|

||||

pub fn new(

|

||||

bank: Arc<Bank>,

|

||||

exit: Arc<AtomicBool>,

|

||||

@ -52,6 +63,8 @@ impl BankingStage {

|

||||

}

|

||||

}

|

||||

|

||||

/// Convert the transactions from a blob of binary data to a vector of transactions and

|

||||

/// an unused `SocketAddr` that could be used to send a response.

|

||||

fn deserialize_transactions(p: &packet::Packets) -> Vec<Option<(Transaction, SocketAddr)>> {

|

||||

p.packets

|

||||

.par_iter()

|

||||

@ -63,6 +76,8 @@ impl BankingStage {

|

||||

.collect()

|

||||

}

|

||||

|

||||

/// Process the incoming packets and send output `Signal` messages to `signal_sender`.

|

||||

/// Discard packets via `packet_recycler`.

|

||||

fn process_packets(

|

||||

bank: Arc<Bank>,

|

||||

verified_receiver: &Receiver<Vec<(SharedPackets, Vec<u8>)>>,

|

||||

@ -80,6 +95,8 @@ impl BankingStage {

|

||||

timing::duration_as_ms(&recv_start.elapsed()),

|

||||

mms.len(),

|

||||

);

|

||||

let count = mms.iter().map(|x| x.1.len()).sum();

|

||||

static mut COUNTER: Counter = create_counter!("banking_stage_process_packets", 1);

|

||||

let proc_start = Instant::now();

|

||||

for (msgs, vers) in mms {

|

||||

let transactions = Self::deserialize_transactions(&msgs.read().unwrap());

|

||||

@ -100,7 +117,7 @@ impl BankingStage {

|

||||

debug!("process_transactions");

|

||||

let results = bank.process_transactions(transactions);

|

||||

let transactions = results.into_iter().filter_map(|x| x.ok()).collect();

|

||||

signal_sender.send(Signal::Events(transactions))?;

|

||||

signal_sender.send(Signal::Transactions(transactions))?;

|

||||

debug!("done process_transactions");

|

||||

|

||||

packet_recycler.recycle(msgs);

|

||||

@ -115,6 +132,7 @@ impl BankingStage {

|

||||

reqs_len,

|

||||

(reqs_len as f32) / (total_time_s)

|

||||

);

|

||||

inc_counter!(COUNTER, count, proc_start);

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

@ -293,7 +311,7 @@ mod bench {

|

||||

&packet_recycler,

|

||||

).unwrap();

|

||||

let signal = signal_receiver.recv().unwrap();

|

||||

if let Signal::Events(ref transactions) = signal {

|

||||

if let Signal::Transactions(transactions) = signal {

|

||||

assert_eq!(transactions.len(), tx);

|

||||

} else {

|

||||

assert!(false);

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

extern crate env_logger;

|

||||

extern crate getopts;

|

||||

extern crate isatty;

|

||||

extern crate pnet;

|

||||

@ -10,8 +11,8 @@ use isatty::stdin_isatty;

|

||||

use pnet::datalink;

|

||||

use rayon::prelude::*;

|

||||

use solana::crdt::{Crdt, ReplicatedData};

|

||||

use solana::data_replicator::DataReplicator;

|

||||

use solana::mint::MintDemo;

|

||||

use solana::ncp::Ncp;

|

||||

use solana::signature::{GenKeys, KeyPair, KeyPairUtil};

|

||||

use solana::streamer::default_window;

|

||||

use solana::thin_client::ThinClient;

|

||||

@ -49,6 +50,7 @@ fn get_ip_addr() -> Option<IpAddr> {

|

||||

}

|

||||

|

||||

fn main() {

|

||||

env_logger::init().unwrap();

|

||||

let mut threads = 4usize;

|

||||

let mut num_nodes = 1usize;

|

||||

|

||||

@ -107,9 +109,10 @@ fn main() {

|

||||

&client_addr,

|

||||

&leader,

|

||||

signal.clone(),

|

||||

num_nodes + 2,

|

||||

num_nodes,

|

||||

&mut c_threads,

|

||||

);

|

||||

assert_eq!(validators.len(), num_nodes);

|

||||

|

||||

if stdin_isatty() {

|

||||

eprintln!("nothing found on stdin, expected a json file");

|

||||

@ -175,38 +178,63 @@ fn main() {

|

||||

}

|

||||

});

|

||||

|

||||

let sample_period = 1; // in seconds

|

||||

println!("Sampling tps every second...",);

|

||||

validators.into_par_iter().for_each(|val| {

|

||||

let mut client = mk_client(&client_addr, &val);

|

||||

let mut now = Instant::now();

|

||||

let mut initial_tx_count = client.transaction_count();

|

||||

for i in 0..100 {

|

||||

let tx_count = client.transaction_count();

|

||||

let duration = now.elapsed();

|

||||

now = Instant::now();

|

||||

let sample = tx_count - initial_tx_count;

|

||||

initial_tx_count = tx_count;

|

||||

println!(

|

||||

"{}: Transactions processed {}",

|

||||

val.transactions_addr, sample

|

||||

);

|

||||

let ns = duration.as_secs() * 1_000_000_000 + u64::from(duration.subsec_nanos());

|

||||

let tps = (sample * 1_000_000_000) as f64 / ns as f64;

|

||||

println!("{}: {} tps", val.transactions_addr, tps);

|

||||

let total = tx_count - first_count;

|

||||

println!(

|

||||

"{}: Total Transactions processed {}",

|

||||

val.transactions_addr, total

|

||||

);

|

||||

if total == transactions.len() as u64 {

|

||||

break;

|

||||

let maxes: Vec<_> = validators

|

||||

.into_par_iter()

|

||||

.map(|val| {

|

||||

let mut client = mk_client(&client_addr, &val);

|

||||

let mut now = Instant::now();

|

||||

let mut initial_tx_count = client.transaction_count();

|

||||

let mut max_tps = 0.0;

|

||||

let mut total = 0;

|

||||

for i in 0..100 {

|

||||

let tx_count = client.transaction_count();

|

||||

let duration = now.elapsed();

|

||||

now = Instant::now();

|

||||

let sample = tx_count - initial_tx_count;

|

||||

initial_tx_count = tx_count;

|

||||

println!(

|

||||

"{}: Transactions processed {}",

|

||||

val.transactions_addr, sample

|

||||

);

|

||||

let ns = duration.as_secs() * 1_000_000_000 + u64::from(duration.subsec_nanos());

|

||||

let tps = (sample * 1_000_000_000) as f64 / ns as f64;

|

||||

if tps > max_tps {

|

||||

max_tps = tps;

|

||||

}

|

||||

println!("{}: {} tps", val.transactions_addr, tps);

|

||||

total = tx_count - first_count;

|

||||

println!(

|

||||

"{}: Total Transactions processed {}",

|

||||

val.transactions_addr, total

|

||||

);

|

||||

if total == transactions.len() as u64 {

|

||||

break;

|

||||

}

|

||||

if i > 20 && sample == 0 {

|

||||

break;

|

||||

}

|

||||

sleep(Duration::new(sample_period, 0));

|

||||

}

|

||||

if i > 20 && sample == 0 {

|

||||

break;

|

||||

}

|

||||

sleep(Duration::new(1, 0));

|

||||

(max_tps, total)

|

||||

})

|

||||

.collect();

|

||||

let mut max_of_maxes = 0.0;

|

||||

let mut total_txs = 0;

|

||||

for (max, txs) in &maxes {

|

||||

if *max > max_of_maxes {

|

||||

max_of_maxes = *max;

|

||||

}

|

||||

});

|

||||

total_txs += *txs;

|

||||

}

|

||||

println!(

|

||||

"\nHighest TPS: {} sampling period {}s total transactions: {} clients: {}",

|

||||

max_of_maxes,

|

||||

sample_period,

|

||||

total_txs,

|

||||

maxes.len()

|

||||

);

|

||||

signal.store(true, Ordering::Relaxed);

|

||||

for t in c_threads {

|

||||

t.join().unwrap();

|

||||

@ -235,7 +263,14 @@ fn spy_node(client_addr: &Arc<RwLock<SocketAddr>>) -> (ReplicatedData, UdpSocket

|

||||

addr.set_port(port + 1);

|

||||

let daddr = "0.0.0.0:0".parse().unwrap();

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

let node = ReplicatedData::new(pubkey, gossip.local_addr().unwrap(), daddr, daddr, daddr);

|

||||

let node = ReplicatedData::new(

|

||||

pubkey,

|

||||

gossip.local_addr().unwrap(),

|

||||

daddr,

|

||||

daddr,

|

||||

daddr,

|

||||

daddr,

|

||||

);

|

||||

(node, gossip)

|

||||

}

|

||||

|

||||

@ -255,33 +290,34 @@ fn converge(

|

||||

let spy_ref = Arc::new(RwLock::new(spy_crdt));

|

||||

let window = default_window();

|

||||

let gossip_send_socket = UdpSocket::bind("0.0.0.0:0").expect("bind 0");

|

||||

let data_replicator = DataReplicator::new(

|

||||

let ncp = Ncp::new(

|

||||

spy_ref.clone(),

|

||||

window.clone(),

|

||||

spy_gossip,

|

||||

gossip_send_socket,

|

||||

exit.clone(),

|

||||

).expect("DataReplicator::new");

|

||||

//wait for the network to converge

|

||||

let mut rv = vec![];

|

||||

//wait for the network to converge, 30 seconds should be plenty

|

||||

for _ in 0..30 {

|

||||

let min = spy_ref.read().unwrap().convergence();

|

||||

if num_nodes as u64 == min {

|

||||

println!("converged!");

|

||||

let v: Vec<ReplicatedData> = spy_ref

|

||||

.read()

|

||||

.unwrap()

|

||||

.table

|

||||

.values()

|

||||

.into_iter()

|

||||

.filter(|x| x.requests_addr != daddr)

|

||||

.cloned()

|

||||

.collect();

|

||||

if v.len() >= num_nodes {

|

||||

println!("CONVERGED!");

|

||||

rv.extend(v.into_iter());

|

||||

break;

|

||||

}

|

||||

sleep(Duration::new(1, 0));

|

||||

}

|

||||

threads.extend(data_replicator.thread_hdls.into_iter());

|

||||

let v: Vec<ReplicatedData> = spy_ref

|

||||

.read()

|

||||

.unwrap()

|

||||

.table

|

||||

.values()

|

||||

.into_iter()

|

||||

.filter(|x| x.requests_addr != daddr)

|

||||

.map(|x| x.clone())

|

||||

.collect();

|

||||

v.clone()

|

||||

threads.extend(ncp.thread_hdls.into_iter());

|

||||

rv

|

||||

}

|

||||

|

||||

fn read_leader(path: String) -> ReplicatedData {

|

||||

|

||||

@ -129,6 +129,7 @@ fn main() {

|

||||

UdpSocket::bind("0.0.0.0:0").unwrap(),

|

||||

UdpSocket::bind(repl_data.replicate_addr).unwrap(),

|

||||

UdpSocket::bind(repl_data.gossip_addr).unwrap(),

|

||||

UdpSocket::bind(repl_data.repair_addr).unwrap(),

|

||||

leader,

|

||||

exit.clone(),

|

||||

);

|

||||

|

||||

@ -8,9 +8,13 @@ use payment_plan::{Payment, PaymentPlan, Witness};

|

||||

use signature::PublicKey;

|

||||

use std::mem;

|

||||

|

||||

/// A data type representing a `Witness` that the payment plan is waiting on.

|

||||

#[derive(Serialize, Deserialize, Debug, PartialEq, Eq, Clone)]

|

||||

pub enum Condition {

|

||||

/// Wait for a `Timestamp` `Witness` at or after the given `DateTime`.

|

||||

Timestamp(DateTime<Utc>),

|

||||

|

||||

/// Wait for a `Signature` `Witness` from `PublicKey`.

|

||||

Signature(PublicKey),

|

||||

}

|

||||

|

||||

@ -18,19 +22,26 @@ impl Condition {

|

||||

/// Return true if the given Witness satisfies this Condition.

|

||||

pub fn is_satisfied(&self, witness: &Witness) -> bool {

|

||||

match (self, witness) {

|

||||

(&Condition::Signature(ref pubkey), &Witness::Signature(ref from)) => pubkey == from,

|

||||

(&Condition::Timestamp(ref dt), &Witness::Timestamp(ref last_time)) => dt <= last_time,

|

||||

(Condition::Signature(pubkey), Witness::Signature(from)) => pubkey == from,

|

||||

(Condition::Timestamp(dt), Witness::Timestamp(last_time)) => dt <= last_time,

|

||||

_ => false,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// A data type reprsenting a payment plan.

|

||||

#[repr(C)]

|

||||

#[derive(Serialize, Deserialize, Debug, PartialEq, Eq, Clone)]

|

||||

pub enum Budget {

|

||||

/// Make a payment.

|

||||

Pay(Payment),

|

||||

|

||||

/// Make a payment after some condition.

|

||||

After(Condition, Payment),

|

||||

Race((Condition, Payment), (Condition, Payment)),

|

||||

|

||||

/// Either make a payment after one condition or a different payment after another

|

||||

/// condition, which ever condition is satisfied first.

|

||||

Or((Condition, Payment), (Condition, Payment)),

|

||||

}

|

||||

|

||||

impl Budget {

|

||||

@ -57,7 +68,7 @@ impl Budget {

|

||||

tokens: i64,

|

||||

to: PublicKey,

|

||||

) -> Self {

|

||||

Budget::Race(

|

||||

Budget::Or(

|

||||

(Condition::Timestamp(dt), Payment { tokens, to }),

|

||||

(Condition::Signature(from), Payment { tokens, to: from }),

|

||||

)

|

||||

@ -67,31 +78,27 @@ impl Budget {

|

||||

impl PaymentPlan for Budget {

|

||||

/// Return Payment if the budget requires no additional Witnesses.

|

||||

fn final_payment(&self) -> Option<Payment> {

|

||||

match *self {

|

||||

Budget::Pay(ref payment) => Some(payment.clone()),

|

||||

match self {

|

||||

Budget::Pay(payment) => Some(payment.clone()),

|

||||

_ => None,

|

||||

}

|

||||

}

|

||||

|

||||

/// Return true if the budget spends exactly `spendable_tokens`.

|

||||

fn verify(&self, spendable_tokens: i64) -> bool {

|

||||

match *self {

|

||||

Budget::Pay(ref payment) | Budget::After(_, ref payment) => {

|

||||

payment.tokens == spendable_tokens

|

||||

}

|

||||

Budget::Race(ref a, ref b) => {

|

||||

a.1.tokens == spendable_tokens && b.1.tokens == spendable_tokens

|

||||

}

|

||||

match self {

|

||||

Budget::Pay(payment) | Budget::After(_, payment) => payment.tokens == spendable_tokens,

|

||||

Budget::Or(a, b) => a.1.tokens == spendable_tokens && b.1.tokens == spendable_tokens,

|

||||

}

|

||||

}

|

||||

|

||||

/// Apply a witness to the budget to see if the budget can be reduced.

|

||||

/// If so, modify the budget in-place.

|

||||

fn apply_witness(&mut self, witness: &Witness) {

|

||||

let new_payment = match *self {

|

||||

Budget::After(ref cond, ref payment) if cond.is_satisfied(witness) => Some(payment),

|

||||

Budget::Race((ref cond, ref payment), _) if cond.is_satisfied(witness) => Some(payment),

|

||||

Budget::Race(_, (ref cond, ref payment)) if cond.is_satisfied(witness) => Some(payment),

|

||||

let new_payment = match self {

|

||||

Budget::After(cond, payment) if cond.is_satisfied(witness) => Some(payment),

|

||||

Budget::Or((cond, payment), _) if cond.is_satisfied(witness) => Some(payment),

|

||||

Budget::Or(_, (cond, payment)) if cond.is_satisfied(witness) => Some(payment),

|

||||

_ => None,

|

||||

}.cloned();

|

||||

|

||||

|

||||

70

src/counter.rs

Normal file

70

src/counter.rs

Normal file

@ -0,0 +1,70 @@

|

||||

use std::sync::atomic::{AtomicUsize, Ordering};

|

||||

use std::time::{Duration, SystemTime, UNIX_EPOCH};

|

||||

|

||||

pub struct Counter {

|

||||

pub name: &'static str,

|

||||

pub counts: AtomicUsize,

|

||||

pub nanos: AtomicUsize,

|

||||

pub times: AtomicUsize,

|

||||

pub lograte: usize,

|

||||

}

|

||||

|

||||

macro_rules! create_counter {

|

||||

($name:expr, $lograte:expr) => {

|

||||

Counter {

|

||||

name: $name,

|

||||

counts: AtomicUsize::new(0),

|

||||

nanos: AtomicUsize::new(0),

|

||||

times: AtomicUsize::new(0),

|

||||

lograte: $lograte,

|

||||

}

|

||||

};

|

||||

}

|

||||

|

||||

macro_rules! inc_counter {

|

||||

($name:expr, $count:expr, $start:expr) => {

|

||||

unsafe { $name.inc($count, $start.elapsed()) };

|

||||

};

|

||||

}

|

||||

|

||||

impl Counter {

|

||||

pub fn inc(&mut self, events: usize, dur: Duration) {

|

||||

let total = dur.as_secs() * 1_000_000_000 + dur.subsec_nanos() as u64;

|

||||

let counts = self.counts.fetch_add(events, Ordering::Relaxed);

|

||||

let nanos = self.nanos.fetch_add(total as usize, Ordering::Relaxed);

|

||||

let times = self.times.fetch_add(1, Ordering::Relaxed);

|

||||

if times % self.lograte == 0 && times > 0 {

|

||||

let now = SystemTime::now().duration_since(UNIX_EPOCH).unwrap();

|

||||

let now_ms = now.as_secs() * 1_000 + now.subsec_nanos() as u64 / 1_000_000;

|

||||

info!(

|

||||

"COUNTER:{{\"name:\":\"{}\", \"counts\": {}, \"nanos\": {}, \"samples\": {} \"rate\": {}, \"now\": {}}}",

|

||||

self.name,

|

||||

counts,

|

||||

nanos,

|

||||

times,

|

||||

counts as f64 * 1e9 / nanos as f64,

|

||||

now_ms,

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

#[cfg(test)]

|

||||

mod tests {

|

||||

use counter::Counter;

|

||||

use std::sync::atomic::{AtomicUsize, Ordering};

|

||||

use std::time::Instant;

|

||||

#[test]

|

||||

fn test_counter() {

|

||||

static mut COUNTER: Counter = create_counter!("test", 100);

|

||||

let start = Instant::now();

|

||||

let count = 1;

|

||||

inc_counter!(COUNTER, count, start);

|

||||

unsafe {

|

||||

assert_eq!(COUNTER.counts.load(Ordering::Relaxed), 1);

|

||||

assert_ne!(COUNTER.nanos.load(Ordering::Relaxed), 0);

|

||||

assert_eq!(COUNTER.times.load(Ordering::Relaxed), 1);

|

||||

assert_eq!(COUNTER.lograte, 100);

|

||||

assert_eq!(COUNTER.name, "test");

|

||||

}

|

||||

}

|

||||

}

|

||||

288

src/crdt.rs

288

src/crdt.rs

@ -77,6 +77,9 @@ pub struct ReplicatedData {

|

||||

pub requests_addr: SocketAddr,

|

||||

/// transactions address

|

||||

pub transactions_addr: SocketAddr,

|

||||

/// repair address, we use this to jump ahead of the packets

|

||||

/// destined to the replciate_addr

|

||||

pub repair_addr: SocketAddr,

|

||||

/// current leader identity

|

||||

pub current_leader_id: PublicKey,

|

||||

/// last verified hash that was submitted to the leader

|

||||

@ -92,6 +95,7 @@ impl ReplicatedData {

|

||||

replicate_addr: SocketAddr,

|

||||

requests_addr: SocketAddr,

|

||||

transactions_addr: SocketAddr,

|

||||

repair_addr: SocketAddr,

|

||||

) -> ReplicatedData {

|

||||

ReplicatedData {

|

||||

id,

|

||||

@ -101,6 +105,7 @@ impl ReplicatedData {

|

||||

replicate_addr,

|

||||

requests_addr,

|

||||

transactions_addr,

|

||||

repair_addr,

|

||||

current_leader_id: PublicKey::default(),

|

||||

last_verified_hash: Hash::default(),

|

||||

last_verified_count: 0,

|

||||

@ -118,6 +123,7 @@ impl ReplicatedData {

|

||||

let gossip_addr = Self::next_port(&bind_addr, 1);

|

||||

let replicate_addr = Self::next_port(&bind_addr, 2);

|

||||

let requests_addr = Self::next_port(&bind_addr, 3);

|

||||

let repair_addr = Self::next_port(&bind_addr, 4);

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

ReplicatedData::new(

|

||||

pubkey,

|

||||

@ -125,6 +131,7 @@ impl ReplicatedData {

|

||||

replicate_addr,

|

||||

requests_addr,

|

||||

transactions_addr,

|

||||

repair_addr,

|

||||

)

|

||||

}

|

||||

}

|

||||

@ -152,10 +159,9 @@ pub struct Crdt {

|

||||

pub remote: HashMap<PublicKey, u64>,

|

||||

pub update_index: u64,

|

||||

pub me: PublicKey,

|

||||

timeout: Duration,

|

||||

}

|

||||

// TODO These messages should be signed, and go through the gpu pipeline for spam filtering

|

||||

#[derive(Serialize, Deserialize)]

|

||||

#[derive(Serialize, Deserialize, Debug)]

|

||||

enum Protocol {

|

||||

/// forward your own latest data structure when requesting an update

|

||||

/// this doesn't update the `remote` update index, but it allows the

|

||||

@ -177,7 +183,6 @@ impl Crdt {

|

||||

remote: HashMap::new(),

|

||||

me: me.id,

|

||||

update_index: 1,

|

||||

timeout: Duration::from_millis(100),

|

||||

};

|

||||

g.local.insert(me.id, g.update_index);

|

||||

g.table.insert(me.id, me);

|

||||

@ -222,14 +227,38 @@ impl Crdt {

|

||||

}

|

||||

}

|

||||

|

||||

pub fn index_blobs(

|

||||

obj: &Arc<RwLock<Self>>,

|

||||

blobs: &Vec<SharedBlob>,

|

||||

receive_index: &mut u64,

|

||||

) -> Result<()> {

|

||||

let me: ReplicatedData = {

|

||||

let robj = obj.read().expect("'obj' read lock in crdt::index_blobs");

|

||||

debug!("broadcast table {}", robj.table.len());

|

||||

robj.table[&robj.me].clone()

|

||||

};

|

||||

|

||||

// enumerate all the blobs, those are the indices

|

||||

for (i, b) in blobs.iter().enumerate() {

|

||||

// only leader should be broadcasting

|

||||

let mut blob = b.write().expect("'blob' write lock in crdt::index_blobs");

|

||||

blob.set_id(me.id).expect("set_id in pub fn broadcast");

|

||||

blob.set_index(*receive_index + i as u64)

|

||||

.expect("set_index in pub fn broadcast");

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// broadcast messages from the leader to layer 1 nodes

|

||||

/// # Remarks

|

||||

/// We need to avoid having obj locked while doing any io, such as the `send_to`

|

||||

pub fn broadcast(

|

||||

obj: &Arc<RwLock<Self>>,

|

||||

blobs: &Vec<SharedBlob>,

|

||||

window: &Arc<RwLock<Vec<Option<SharedBlob>>>>,

|

||||

s: &UdpSocket,

|

||||

transmit_index: &mut u64,

|

||||

received_index: u64,

|

||||

) -> Result<()> {

|

||||

let (me, table): (ReplicatedData, Vec<ReplicatedData>) = {

|

||||

// copy to avoid locking during IO

|

||||

@ -259,31 +288,35 @@ impl Crdt {

|

||||

return Err(Error::CrdtTooSmall);

|

||||

}

|

||||

trace!("nodes table {}", nodes.len());

|

||||

trace!("blobs table {}", blobs.len());

|

||||

// enumerate all the blobs, those are the indices

|

||||

|

||||

// enumerate all the blobs in the window, those are the indices

|

||||

// transmit them to nodes, starting from a different node

|

||||

let orders: Vec<_> = blobs

|

||||

.iter()

|

||||

.enumerate()

|

||||

.zip(

|

||||

nodes

|

||||

.iter()

|

||||

.cycle()

|

||||

.skip((*transmit_index as usize) % nodes.len()),

|

||||

)

|

||||

.collect();

|

||||

let mut orders = Vec::new();

|

||||

let window_l = window.write().unwrap();

|

||||

for i in *transmit_index..received_index {

|

||||

let is = i as usize;

|

||||

let k = is % window_l.len();

|

||||

assert!(window_l[k].is_some());

|

||||

|

||||

orders.push((window_l[k].clone(), nodes[is % nodes.len()]));

|

||||

}

|

||||

|

||||

trace!("orders table {}", orders.len());

|

||||

let errs: Vec<_> = orders

|

||||

.into_iter()

|

||||

.map(|((i, b), v)| {

|

||||

.map(|(b, v)| {

|

||||

// only leader should be broadcasting

|

||||

assert!(me.current_leader_id != v.id);

|

||||

let mut blob = b.write().expect("'b' write lock in pub fn broadcast");

|

||||

blob.set_id(me.id).expect("set_id in pub fn broadcast");

|

||||

blob.set_index(*transmit_index + i as u64)

|

||||

.expect("set_index in pub fn broadcast");

|

||||

let bl = b.unwrap();

|

||||

let blob = bl.read().expect("blob read lock in streamer::broadcast");

|

||||

//TODO profile this, may need multiple sockets for par_iter

|

||||

trace!("broadcast {} to {}", blob.meta.size, v.replicate_addr);

|

||||

trace!(

|

||||

"broadcast idx: {} sz: {} to {} coding: {}",

|

||||

blob.get_index().unwrap(),

|

||||

blob.meta.size,

|

||||

v.replicate_addr,

|

||||

blob.is_coding()

|

||||

);

|

||||

assert!(blob.meta.size < BLOB_SIZE);

|

||||

let e = s.send_to(&blob.data[..blob.meta.size], &v.replicate_addr);

|

||||

trace!("done broadcast {} to {}", blob.meta.size, v.replicate_addr);

|

||||

@ -390,7 +423,7 @@ impl Crdt {

|

||||

let daddr = "0.0.0.0:0".parse().unwrap();

|

||||

let valid: Vec<_> = self.table

|

||||

.values()

|

||||

.filter(|r| r.id != self.me && r.replicate_addr != daddr)

|

||||

.filter(|r| r.id != self.me && r.repair_addr != daddr)

|

||||

.collect();

|

||||

if valid.is_empty() {

|

||||

return Err(Error::CrdtTooSmall);

|

||||

@ -482,12 +515,9 @@ impl Crdt {

|

||||

if exit.load(Ordering::Relaxed) {

|

||||

return;

|

||||

}

|

||||

//TODO this should be a tuned parameter

|

||||

sleep(

|

||||

obj.read()

|

||||

.expect("'obj' read lock in pub fn gossip")

|

||||

.timeout,

|

||||

);

|

||||

//TODO: possibly tune this parameter

|

||||

//we saw a deadlock passing an obj.read().unwrap().timeout into sleep

|

||||

sleep(Duration::from_millis(100));

|

||||

})

|

||||

.unwrap()

|

||||

}

|

||||

@ -498,7 +528,7 @@ impl Crdt {

|

||||

blob_recycler: &BlobRecycler,

|

||||

) -> Option<SharedBlob> {

|

||||

let pos = (ix as usize) % window.read().unwrap().len();

|

||||

if let &Some(ref blob) = &window.read().unwrap()[pos] {

|

||||

if let Some(blob) = &window.read().unwrap()[pos] {

|

||||

let rblob = blob.read().unwrap();

|

||||

let blob_ix = rblob.get_index().expect("run_window_request get_index");

|

||||

if blob_ix == ix {

|

||||

@ -509,7 +539,7 @@ impl Crdt {

|

||||

let sz = rblob.meta.size;

|

||||

outblob.meta.size = sz;

|

||||

outblob.data[..sz].copy_from_slice(&rblob.data[..sz]);

|

||||

outblob.meta.set_addr(&from.replicate_addr);

|

||||

outblob.meta.set_addr(&from.repair_addr);

|

||||

//TODO, set the sender id to the requester so we dont retransmit

|

||||

//come up with a cleaner solution for this when sender signatures are checked

|

||||

outblob.set_id(from.id).expect("blob set_id");

|

||||

@ -518,7 +548,7 @@ impl Crdt {

|

||||

}

|

||||

} else {

|

||||

assert!(window.read().unwrap()[pos].is_none());

|

||||

info!("failed RequestWindowIndex {} {}", ix, from.replicate_addr);

|

||||

info!("failed RequestWindowIndex {} {}", ix, from.repair_addr);

|

||||

}

|

||||

None

|

||||

}

|

||||

@ -574,16 +604,18 @@ impl Crdt {

|

||||

None

|

||||

}

|

||||

Ok(Protocol::RequestWindowIndex(from, ix)) => {

|

||||

//TODO this doesn't depend on CRDT module, can be moved

|

||||

//but we are using the listen thread to service these request

|

||||

//TODO verify from is signed

|

||||

obj.write().unwrap().insert(&from);

|

||||

let me = obj.read().unwrap().my_data().clone();

|

||||

trace!(

|

||||

"received RequestWindowIndex {} {} myaddr {}",

|

||||

ix,

|

||||

from.replicate_addr,

|

||||

me.replicate_addr

|

||||

from.repair_addr,

|

||||

me.repair_addr

|

||||

);

|

||||

assert_ne!(from.replicate_addr, me.replicate_addr);

|

||||

assert_ne!(from.repair_addr, me.repair_addr);

|

||||

Self::run_window_request(&window, &from, ix, blob_recycler)

|

||||

}

|

||||

Err(_) => {

|

||||

@ -656,6 +688,7 @@ pub struct Sockets {

|

||||

pub transaction: UdpSocket,

|

||||

pub respond: UdpSocket,

|

||||

pub broadcast: UdpSocket,

|

||||

pub repair: UdpSocket,

|

||||

}

|

||||

|

||||

pub struct TestNode {

|

||||

@ -672,6 +705,7 @@ impl TestNode {

|

||||

let replicate = UdpSocket::bind("0.0.0.0:0").unwrap();

|

||||

let respond = UdpSocket::bind("0.0.0.0:0").unwrap();

|

||||

let broadcast = UdpSocket::bind("0.0.0.0:0").unwrap();

|

||||

let repair = UdpSocket::bind("0.0.0.0:0").unwrap();

|

||||

let pubkey = KeyPair::new().pubkey();

|

||||

let data = ReplicatedData::new(

|

||||

pubkey,

|

||||

@ -679,6 +713,7 @@ impl TestNode {

|

||||

replicate.local_addr().unwrap(),

|

||||

requests.local_addr().unwrap(),

|

||||

transaction.local_addr().unwrap(),

|

||||

repair.local_addr().unwrap(),

|

||||

);

|

||||

TestNode {

|

||||

data: data,

|

||||

@ -690,6 +725,7 @@ impl TestNode {

|

||||

transaction,

|

||||

respond,

|

||||

broadcast,

|

||||

repair,

|

||||

},

|

||||

}

|

||||

}

|

||||

@ -698,7 +734,14 @@ impl TestNode {

|

||||

#[cfg(test)]

|

||||

mod tests {

|

||||

use crdt::{parse_port_or_addr, Crdt, ReplicatedData};

|

||||

use packet::BlobRecycler;

|

||||

use result::Error;

|

||||

use signature::{KeyPair, KeyPairUtil};

|

||||

use std::sync::atomic::{AtomicBool, Ordering};

|

||||

use std::sync::mpsc::channel;

|

||||

use std::sync::{Arc, RwLock};

|

||||

use std::time::Duration;

|

||||

use streamer::default_window;

|

||||

|

||||

#[test]

|

||||

fn test_parse_port_or_addr() {

|

||||

@ -709,8 +752,6 @@ mod tests {

|

||||

let p3 = parse_port_or_addr(None);

|

||||

assert_eq!(p3.port(), 8000);

|

||||

}

|

||||

|

||||

/// Test that insert drops messages that are older

|

||||

#[test]

|

||||

fn insert_test() {

|

||||

let mut d = ReplicatedData::new(

|

||||

@ -719,6 +760,7 @@ mod tests {

|

||||

"127.0.0.1:1235".parse().unwrap(),

|

||||

"127.0.0.1:1236".parse().unwrap(),

|

||||

"127.0.0.1:1237".parse().unwrap(),

|

||||

"127.0.0.1:1238".parse().unwrap(),

|

||||

);

|

||||

assert_eq!(d.version, 0);

|

||||

let mut crdt = Crdt::new(d.clone());

|

||||

@ -736,6 +778,15 @@ mod tests {

|

||||

copy

|

||||

}

|

||||

#[test]

|

||||

fn replicated_data_new_leader() {

|

||||

let d1 = ReplicatedData::new_leader(&"127.0.0.1:1234".parse().unwrap());

|

||||

assert_eq!(d1.gossip_addr, "127.0.0.1:1235".parse().unwrap());

|

||||

assert_eq!(d1.replicate_addr, "127.0.0.1:1236".parse().unwrap());

|

||||

assert_eq!(d1.requests_addr, "127.0.0.1:1237".parse().unwrap());

|

||||

assert_eq!(d1.transactions_addr, "127.0.0.1:1234".parse().unwrap());

|

||||

assert_eq!(d1.repair_addr, "127.0.0.1:1238".parse().unwrap());

|

||||

}

|

||||

#[test]

|

||||

fn update_test() {

|

||||

let d1 = ReplicatedData::new(

|

||||

KeyPair::new().pubkey(),

|

||||

@ -743,6 +794,7 @@ mod tests {

|

||||

"127.0.0.1:1235".parse().unwrap(),

|

||||

"127.0.0.1:1236".parse().unwrap(),

|

||||

"127.0.0.1:1237".parse().unwrap(),

|

||||

"127.0.0.1:1238".parse().unwrap(),

|

||||

);

|

||||

let d2 = ReplicatedData::new(

|

||||

KeyPair::new().pubkey(),

|

||||

@ -750,6 +802,7 @@ mod tests {

|

||||

"127.0.0.1:1235".parse().unwrap(),

|

||||

"127.0.0.1:1236".parse().unwrap(),

|

||||

"127.0.0.1:1237".parse().unwrap(),

|

||||

"127.0.0.1:1238".parse().unwrap(),

|

||||

);

|

||||

let d3 = ReplicatedData::new(

|

||||

KeyPair::new().pubkey(),

|

||||

@ -757,6 +810,7 @@ mod tests {

|

||||

"127.0.0.1:1235".parse().unwrap(),

|

||||

"127.0.0.1:1236".parse().unwrap(),

|

||||

"127.0.0.1:1237".parse().unwrap(),

|

||||

"127.0.0.1:1238".parse().unwrap(),

|

||||

);

|

||||

let mut crdt = Crdt::new(d1.clone());

|

||||

let (key, ix, ups) = crdt.get_updates_since(0);

|

||||

@ -784,5 +838,165 @@ mod tests {

|

||||

sorted(&crdt.table.values().map(|x| x.clone()).collect())

|

||||

);

|

||||

}

|

||||

#[test]

|

||||

fn window_index_request() {

|

||||

let me = ReplicatedData::new(

|

||||