Compare commits

855 Commits

v0.1.1

...

v0.6.0-bet

| Author | SHA1 | Date | |

|---|---|---|---|

| ef8eac92e3 | |||

| 9c9c63572b | |||

| 6c0c6de1d0 | |||

| b57aecc24c | |||

| 290dde60a0 | |||

| 38623785f9 | |||

| 256ecc7208 | |||

| 76b06b47ba | |||

| cf15cf587f | |||

| 134c7add57 | |||

| ac0791826a | |||

| d2622b7798 | |||

| f82cbf3a27 | |||

| aa7e3df8d6 | |||

| ad00d7bd9c | |||

| 8d1f82c34d | |||

| 0cb2036e3a | |||

| 2b1e90b0a5 | |||

| f2ccc133a2 | |||

| 5e824b39dd | |||

| 41efcae64b | |||

| cf5671d058 | |||

| 2570bba6b1 | |||

| 71cb7d5c97 | |||

| 0df6541d5e | |||

| 52145caf7e | |||

| 86a50ae9e1 | |||

| c64cfb74f3 | |||

| 26153d9919 | |||

| 5af922722f | |||

| b70d730b32 | |||

| bf4b856e0c | |||

| 0cf0ae6755 | |||

| 29061cff39 | |||

| b7eec4c89f | |||

| a3854c229e | |||

| dcde256433 | |||

| 931bdbd5cd | |||

| b7bd59c344 | |||

| 2dbf9a6017 | |||

| fe93bba457 | |||

| 6e35f54738 | |||

| 089294a85e | |||

| 25c0b44641 | |||

| 58c1589688 | |||

| bb53f69016 | |||

| 75659ca042 | |||

| fc00594ea4 | |||

| 8d26be8b89 | |||

| af4e95ae0f | |||

| ffb4a7aa78 | |||

| dcaeacc507 | |||

| 4f377e6710 | |||

| 122db85727 | |||

| a598e4aa74 | |||

| 733b31ebbd | |||

| dac9775de0 | |||

| 46c19a5783 | |||

| aaeb5ba52f | |||

| 9f5a3d6064 | |||

| 4cdf873f98 | |||

| b43ae748c3 | |||

| 02ddd89653 | |||

| bbe6eccefe | |||

| 6677a7b66a | |||

| 75c37fcc73 | |||

| 5be71a8a9d | |||

| b9ae7d1ebb | |||

| 8b02e0f57c | |||

| 342cc7350a | |||

| 2335a51ced | |||

| 868df1824c | |||

| 83c11f0f9d | |||

| 1022f1b0c6 | |||

| c2c80232e3 | |||

| 115f4e54b8 | |||

| 669b1694b8 | |||

| 2128c58fbe | |||

| e12e154877 | |||

| 73d3c17507 | |||

| 7f647a93da | |||

| ecb3dbbb60 | |||

| cc907ba69d | |||

| 5a45eef1dc | |||

| 0d980e89bc | |||

| ef87832bff | |||

| 94507d1aca | |||

| 89924a38ff | |||

| 7faa2b8698 | |||

| 65352ce8e7 | |||

| f1988ee1e3 | |||

| 82ac8eb731 | |||

| ae47e34fa5 | |||

| 28e781efc3 | |||

| 5c3ceb8355 | |||

| c9113b381d | |||

| 75e69eecfa | |||

| f3c4acc723 | |||

| 2a0095e322 | |||

| 9ad5f3c65b | |||

| 579de64d49 | |||

| d4200a7b1e | |||

| 84477835dc | |||

| 504b318ef1 | |||

| f154c8c490 | |||

| d4959bc157 | |||

| 87e025fe22 | |||

| 8049323ca8 | |||

| b38c7ea2ff | |||

| 239b925fb3 | |||

| 60da7f7aaf | |||

| 8646ff4927 | |||

| 59be94a81f | |||

| 437c485e5c | |||

| 79a58da6a9 | |||

| ae29641a18 | |||

| 9c3f65bca9 | |||

| 086365b4c4 | |||

| 64044da49c | |||

| 7b5b7feb63 | |||

| 2e059f8504 | |||

| 207b6686d1 | |||

| abfd7d6951 | |||

| 7fc166b5ba | |||

| 021953d59a | |||

| bbe89df2ff | |||

| a638ec5911 | |||

| 26272a3600 | |||

| 8454eb79d0 | |||

| 796f4b981b | |||

| 34514d65bc | |||

| 2786357082 | |||

| 4badeacd1d | |||

| 63a0ba6ec8 | |||

| 9a4ce6d70e | |||

| 35ee2d0ce1 | |||

| b04716d40d | |||

| 051fa6f1f1 | |||

| 8dc1b07e75 | |||

| bee1e7ebaf | |||

| f3f0b9f0c5 | |||

| a5cf745e1c | |||

| 273b800047 | |||

| 6c1f1c2a7a | |||

| 9c62f8d81f | |||

| 82aef7ebe2 | |||

| 57636d3d5f | |||

| dc87effc0a | |||

| f0c9823e9f | |||

| 0b91dd6163 | |||

| 4955c6f13a | |||

| 2e7beca9ba | |||

| 59c1b9983d | |||

| f7083e0923 | |||

| 6d4defdf96 | |||

| b826f837f8 | |||

| 5855e18a4e | |||

| 3f38c0a245 | |||

| cfe8b3fc55 | |||

| e9ee020b5f | |||

| 1bcf3891b4 | |||

| 5456de63e9 | |||

| 9026c70952 | |||

| 99dc4ea4a9 | |||

| 0aaa500f7c | |||

| 5f5be83a17 | |||

| 7e44005a0f | |||

| ee3fb985ea | |||

| 2a268aa528 | |||

| cd262cf860 | |||

| a1889c32d4 | |||

| d42d024d9c | |||

| 7b88b8d159 | |||

| 4131071b9a | |||

| ef6bd7e3b8 | |||

| 374bff6550 | |||

| 0a46bbe4f9 | |||

| f4971be236 | |||

| 421273f862 | |||

| 2c7f229883 | |||

| 904eabad2f | |||

| 8b233f6be4 | |||

| 08fc821ca9 | |||

| 81706f2d75 | |||

| 7b50c3910f | |||

| 2d635386af | |||

| a604dcb4c4 | |||

| 7736b9cac6 | |||

| d2dd005a59 | |||

| 6e8f99d9b2 | |||

| 685de30047 | |||

| 17cc9ab07f | |||

| 3f10bf44db | |||

| 27984e469a | |||

| a2c05b112e | |||

| a578c1a5e3 | |||

| 500aaed48e | |||

| 4a94da8a94 | |||

| cc447c0fda | |||

| 0ae69bdcd9 | |||

| 5ba20a94e8 | |||

| f168c377fd | |||

| dfb754dd13 | |||

| 455050e19c | |||

| 317031f455 | |||

| b132ce1944 | |||

| 8b226652aa | |||

| 2c7fe3ed8d | |||

| 3d5f2b3c28 | |||

| 7a79afe4a6 | |||

| 1f7387a39b | |||

| 0fc2bee144 | |||

| 791ae852a2 | |||

| c2fcd876d7 | |||

| d239d4a495 | |||

| aec05ef602 | |||

| e5d46d998b | |||

| b2e3299539 | |||

| c308a6459f | |||

| 4eb1bc08a7 | |||

| ff5e1c635f | |||

| 6149c2fcb5 | |||

| d7cd80dce5 | |||

| 6264508f5e | |||

| a3869dd4c1 | |||

| a3d2831f8c | |||

| 4cd1fa8c38 | |||

| 1511dc43d7 | |||

| 3d82807965 | |||

| 4180571660 | |||

| 421d9aa501 | |||

| 898f4971a2 | |||

| 7ab3331f01 | |||

| b4ca414492 | |||

| 73abea088a | |||

| 2376dfc139 | |||

| d2f95d5319 | |||

| cd96843699 | |||

| ca80bc33c6 | |||

| 19607886f7 | |||

| 3c11a91f77 | |||

| b781fdbd04 | |||

| 765d901530 | |||

| 3cedbc493e | |||

| 0488d0a82f | |||

| f0be595e4c | |||

| 55100854d6 | |||

| 600a1f8866 | |||

| 95bf68f3f5 | |||

| bcdb058492 | |||

| 7f46aef624 | |||

| e779496dfb | |||

| 3d77fa5fbc | |||

| 250830ade9 | |||

| 7b2eb7ccfc | |||

| 458c27c6e9 | |||

| a49e664e63 | |||

| f20380d6b4 | |||

| 05a5e551d6 | |||

| d278b71cb2 | |||

| a485c141d5 | |||

| 8a9f6b9ae3 | |||

| 7144090528 | |||

| ee0015ac38 | |||

| 8b7f7f1088 | |||

| c95c6a75f8 | |||

| 44bf79e35f | |||

| bb654f286c | |||

| 1acd2aa8cf | |||

| 18d3659b91 | |||

| 63a4bafa72 | |||

| 4eb2e84c9f | |||

| 73c7fb87e8 | |||

| c1496722aa | |||

| d9f81b0c8c | |||

| d69beaabe1 | |||

| b7a0bd6347 | |||

| 882ea6b672 | |||

| 736d3eabae | |||

| af53197c04 | |||

| cf186c5762 | |||

| f384a2ce85 | |||

| 803b76e997 | |||

| 230d7c3dd6 | |||

| 4f629dd982 | |||

| 4fdd891b54 | |||

| 64a892321a | |||

| a80991f2b3 | |||

| c9cd81319a | |||

| 521ae21632 | |||

| bcd6606a16 | |||

| 52ebb88205 | |||

| 1e91d09be7 | |||

| 02c573986b | |||

| f2de486658 | |||

| 900b4f2644 | |||

| 1cfaa9afb6 | |||

| 801468d70d | |||

| 0601e05978 | |||

| 7ce11b5d1c | |||

| f2d4799491 | |||

| ebc458cd32 | |||

| 43cd631579 | |||

| bc824c1a6c | |||

| 4223aff840 | |||

| f107c6c2ca | |||

| 7daf14caa7 | |||

| ded28c705f | |||

| 778bec0777 | |||

| 6967cf7f86 | |||

| 0ee3ec86bd | |||

| e4c47e8417 | |||

| 98ae80f4ed | |||

| 876c77d0bc | |||

| d44a6f7541 | |||

| 9040c04d27 | |||

| ebbdef0538 | |||

| bfbee988d0 | |||

| 1d4d0272ca | |||

| 77a76f0783 | |||

| d9079de262 | |||

| b3d732a1a1 | |||

| 52f1a02938 | |||

| fe51669e85 | |||

| 670a6c50c9 | |||

| 86c1aaf7d8 | |||

| 658e787b60 | |||

| 40c50aef50 | |||

| a24c2bbe73 | |||

| bdbe90b891 | |||

| 3236be7877 | |||

| 1dca17fdb4 | |||

| 785e971698 | |||

| 2bfa20ff85 | |||

| 474a9af78d | |||

| 61425eacb8 | |||

| 4870def1fb | |||

| 3e73fb9233 | |||

| 5ad6061c3f | |||

| fae019b974 | |||

| 3bb06d8364 | |||

| c9c9afa472 | |||

| bd0671e123 | |||

| 6f3ec8d21f | |||

| 9a0bf13feb | |||

| 9ff1a6f0cd | |||

| a59f64cae1 | |||

| a4ecd09723 | |||

| f159dfd15a | |||

| 9e8ec86fa3 | |||

| 62bb78f58d | |||

| 893011c3ba | |||

| 880cb8e7cc | |||

| 85f83f2c74 | |||

| 4751e459cc | |||

| 138efa6cec | |||

| a68e50935e | |||

| e8f5fb35ac | |||

| 6af27669b0 | |||

| e162f24119 | |||

| dbcc462a48 | |||

| 2d5313639a | |||

| 38af0f436d | |||

| 888c2ffb20 | |||

| 588593f619 | |||

| 2cdd515b12 | |||

| 0aad71d46e | |||

| 6f9285322d | |||

| 68c7f992fa | |||

| 1feff408ff | |||

| f752e02487 | |||

| c9c7fb0a27 | |||

| de680c2a8e | |||

| 03695ba4c5 | |||

| c2e2960bf7 | |||

| 385d2a580c | |||

| 7e02652068 | |||

| ae29c9b4a0 | |||

| 078f917e61 | |||

| b65f04d500 | |||

| 6acaffe581 | |||

| e47ef42a33 | |||

| b950e33d81 | |||

| ec8cfc77ad | |||

| 00a16db9cd | |||

| 4b9f115586 | |||

| c5cc91443e | |||

| 48d94143e7 | |||

| 8174a05156 | |||

| 63cf6363a2 | |||

| cc6de605ac | |||

| d0151d2b79 | |||

| 6b45d453b8 | |||

| b992a84d67 | |||

| cb362e9052 | |||

| ccb478c1f6 | |||

| 6af3680f99 | |||

| e6c3c215ab | |||

| 5c66bbde01 | |||

| 77dd1bdd4a | |||

| 6268d540a8 | |||

| 5918e38747 | |||

| 3cfb571356 | |||

| 5eb80f8027 | |||

| f6e5f2439d | |||

| edf6272374 | |||

| 7f6a4b0ce3 | |||

| 3be5f25f2f | |||

| 1b6cdd5637 | |||

| f752e55929 | |||

| ebb089b3f1 | |||

| ad6303f031 | |||

| 828b9d6717 | |||

| 444adcd1ca | |||

| 69ac305883 | |||

| 2ff57df2a0 | |||

| 7077f4cbe2 | |||

| 266f85f607 | |||

| d90ab90145 | |||

| 48018b3f5b | |||

| 15584e7062 | |||

| d415b17146 | |||

| 9ed953e8c3 | |||

| b60a98bd6e | |||

| a15e30d4b3 | |||

| d5d133353f | |||

| 6badc98510 | |||

| ea8bfb46ce | |||

| 58860ed19f | |||

| 583f652197 | |||

| 3215dcff78 | |||

| 38fdd17067 | |||

| 807ccd15ba | |||

| 1c923d2f9e | |||

| 2676b21400 | |||

| fd5ef94b5a | |||

| 02c7eea236 | |||

| 34d1805b54 | |||

| 753eaa8266 | |||

| 0b39c6f98e | |||

| 55b8d0db4d | |||

| 3d7969d8a2 | |||

| 041de8082a | |||

| 3da1fa4d88 | |||

| 39df21de30 | |||

| 8cbb7d7362 | |||

| 10a0c47210 | |||

| 89bf3765f3 | |||

| 8181bc591b | |||

| ca877e689c | |||

| c6048e2bab | |||

| 60015aee04 | |||

| 43e6741071 | |||

| b91f6bcbff | |||

| 64e2f1b949 | |||

| 13a2f05776 | |||

| 903374ae9b | |||

| d366a07403 | |||

| e94921174a | |||

| dea5ab2f79 | |||

| 5e11078f34 | |||

| d7670cd4ff | |||

| 29f3230089 | |||

| d003efb522 | |||

| 97e772e87a | |||

| 0b33615979 | |||

| 249cead13e | |||

| 7c96dea359 | |||

| 374c9921fd | |||

| fb55ab8c33 | |||

| 13485074ac | |||

| 4944c965e4 | |||

| 83c5b3bc38 | |||

| 7fc42de758 | |||

| 0a30bd74c1 | |||

| 9b12a79c8d | |||

| 0dcde23b05 | |||

| 8dc15b88eb | |||

| d20c952f92 | |||

| c2eeeb27fd | |||

| 180d8b67e4 | |||

| 9c989c46ee | |||

| 51633f509d | |||

| 705228ecc2 | |||

| 740f6d2258 | |||

| 3b9ef5ccab | |||

| ab74e7f24f | |||

| be9a670fb7 | |||

| 6e43e7a146 | |||

| ab2093926a | |||

| 916b90f415 | |||

| 2ef3db9fab | |||

| 6987b6fd58 | |||

| 078179e9b8 | |||

| 50ccecdff5 | |||

| e838a8c28a | |||

| e5f7eeedbf | |||

| d1948b5a00 | |||

| c07f700c53 | |||

| c934a30f66 | |||

| 310d01d8a2 | |||

| f330739bc7 | |||

| 58626721ad | |||

| 584c8c07b8 | |||

| a93ec03d2c | |||

| 7bd3a8e004 | |||

| 912a5f951e | |||

| 6869089111 | |||

| 6fd32fe850 | |||

| 81e2b36d38 | |||

| 7d811afab1 | |||

| 39f5aaab8b | |||

| 5fc81dd6c8 | |||

| 491a530d90 | |||

| c12da50f9b | |||

| 41e8500fc5 | |||

| a7f59ef3c1 | |||

| f4466c8c0a | |||

| bc6d6b20fa | |||

| 01326936e6 | |||

| c960e8d351 | |||

| fc69d31914 | |||

| 8d425e127b | |||

| 3cfb07ea38 | |||

| 76679ffb92 | |||

| dc2ec925d7 | |||

| 81d6ba3ec5 | |||

| 014bdaa355 | |||

| 0c60fdd2ce | |||

| 43d986d14e | |||

| 123d7c6a37 | |||

| 5ac7df17f9 | |||

| bc0dde696a | |||

| c323bd3c87 | |||

| 5c672adc21 | |||

| 2f80747dc7 | |||

| 95749ed0e3 | |||

| 94eea3abec | |||

| fe32159673 | |||

| 07aa2e1260 | |||

| 6fec8fad57 | |||

| 84df487f7d | |||

| 49708e92d3 | |||

| daadae7987 | |||

| 2b788d06b7 | |||

| 90cd9bd533 | |||

| d63506f98c | |||

| 17de6876bb | |||

| fc540395f9 | |||

| da2b4962a9 | |||

| 3abe305a21 | |||

| 46e8c09bd8 | |||

| e683c34a89 | |||

| 54e4f75081 | |||

| 9f256f0929 | |||

| ef169a6652 | |||

| eaec25f940 | |||

| 6a87d8975c | |||

| b8cf5f9427 | |||

| 2f1e585446 | |||

| f9309b46aa | |||

| 22f5985f1b | |||

| c59c38e50e | |||

| 232e1bb8a3 | |||

| 1fbb34620c | |||

| 89f5b803c9 | |||

| 55179101cd | |||

| 132495b1fc | |||

| a03d7bf5cd | |||

| 3bf225e85f | |||

| cc2bb290c4 | |||

| 878ca8c5c5 | |||

| 4bc41d81ee | |||

| f6ca176fc8 | |||

| 0bec360a31 | |||

| 04f30710c5 | |||

| 98c0a2af87 | |||

| 9db42c1769 | |||

| 849bced602 | |||

| 27f29019ef | |||

| 8642a41f2b | |||

| bf902ef5bc | |||

| 7656b55c22 | |||

| 7d3d4b9443 | |||

| 15c093c5e2 | |||

| 116166f62d | |||

| 26b19dde75 | |||

| c8ddc68f13 | |||

| 7c9681007c | |||

| 13206e4976 | |||

| 2f18302d32 | |||

| ddb21d151d | |||

| c64a9fb456 | |||

| ee19b4f86e | |||

| 14239e584f | |||

| 112aecf6eb | |||

| c1783d77d7 | |||

| f089abb3c5 | |||

| 8e551f5e32 | |||

| 290960c3b5 | |||

| 62af09adbe | |||

| e39c0b34e5 | |||

| 8ad90807ee | |||

| 533b3170a7 | |||

| 7732f3f5fb | |||

| f52f02a434 | |||

| 4d7d4d673e | |||

| 9a437f0d38 | |||

| c385f8bb6e | |||

| fa44be2a9d | |||

| 117ab0c141 | |||

| 7488d19ae6 | |||

| 60524ad5f2 | |||

| fad7ff8bf0 | |||

| 383d445ba1 | |||

| 803dcb0800 | |||

| fde320e2f2 | |||

| 8ea97141ea | |||

| 9f232bac58 | |||

| 8295cc11c0 | |||

| 70f80adb9a | |||

| 9a7cac1e07 | |||

| c584a25ec9 | |||

| bff32bf7bc | |||

| d0e7450389 | |||

| 4da89ac8a9 | |||

| f7032f7d9a | |||

| 7c7e3931a0 | |||

| 6be3d62d89 | |||

| 6f509a8a1e | |||

| 4379fabf16 | |||

| 6b66e1a077 | |||

| c11a3e0fdc | |||

| 3418033c55 | |||

| caa9a846ed | |||

| 8ee76bcea0 | |||

| 47325cbe01 | |||

| e0c8417297 | |||

| 9238ee9572 | |||

| 64af37e0cd | |||

| 9f9b79f30b | |||

| 265f41887f | |||

| 4f09e5d04c | |||

| 434f321336 | |||

| f4e0d1be58 | |||

| e5bae0604b | |||

| e7da083c31 | |||

| 367c32dabe | |||

| e054238af6 | |||

| e8faf6d59a | |||

| baa4ea3cd8 | |||

| 75ef0f0329 | |||

| 65185c0011 | |||

| eb94613d7d | |||

| 67f4f4fb49 | |||

| a7ecf4ac4c | |||

| 45765b625a | |||

| aa0a184ebe | |||

| 069f9f0d5d | |||

| c82b520ea8 | |||

| 9d6e5bde4a | |||

| 0eb3669fbf | |||

| 30449b6054 | |||

| f5f71a19b8 | |||

| 0135971769 | |||

| 8579795c40 | |||

| 9d77fd7eec | |||

| 8c40d1bd72 | |||

| 7a0bc7d888 | |||

| 1e07014f86 | |||

| 49281b24e5 | |||

| a8b1980de4 | |||

| b8cd5f0482 | |||

| cc9f0788aa | |||

| 209910299d | |||

| 17926ff5d9 | |||

| 957fb0667c | |||

| 8d17aed785 | |||

| 7ef8d5ddde | |||

| 9930a2e167 | |||

| a86be9ebf2 | |||

| ad6665c8b6 | |||

| 923162ae9d | |||

| dd2bd67049 | |||

| d500bbff04 | |||

| e759bd1a99 | |||

| 94daf4cea4 | |||

| 2379792e0a | |||

| dba6d7a8a6 | |||

| 086c206b76 | |||

| 5dd567deef | |||

| b6d8f737ca | |||

| 491ba9da84 | |||

| a420a9293f | |||

| c1bc5f6a07 | |||

| 9834c251d0 | |||

| 54340ed4c6 | |||

| 96a0a9202c | |||

| a4c081d3a1 | |||

| d1b6206858 | |||

| 0eb6849fe3 | |||

| b725fdb093 | |||

| 1436bb1ff2 | |||

| 5a44c36b1f | |||

| 5d990502cb | |||

| 64735da716 | |||

| 95b82aa6dc | |||

| f09952f3d7 | |||

| b98e04dc56 | |||

| cb436250da | |||

| 4376032e3a | |||

| c231331e05 | |||

| 624c151ca2 | |||

| 5d0356f74b | |||

| b019416518 | |||

| 4fcd9e3bd6 | |||

| 66bf889c39 | |||

| a2811842c8 | |||

| 1929601425 | |||

| 282afee47e | |||

| e701ccc949 | |||

| 6543497c17 | |||

| 7d9af5a937 | |||

| 720c54a5bb | |||

| 5dca3c41f2 | |||

| 929546f60b | |||

| cb0ce9986c | |||

| 064eba00fd | |||

| a4336a39d6 | |||

| 298989c4b9 | |||

| 48c28c2267 | |||

| d76ecbc9c9 | |||

| 79fb9c00aa | |||

| c9e03f37ce | |||

| aa5f1699a7 | |||

| e1e9126d03 | |||

| 672a4b3723 | |||

| 955f76baab | |||

| 7da8a5e2d1 | |||

| ff82fbf112 | |||

| 8503a0a58f | |||

| b1e9512f44 | |||

| 608def9c78 | |||

| bcb21bc1d8 | |||

| f63096620a | |||

| 9b26892bae | |||

| 572475ce14 | |||

| 876d7995e1 | |||

| b8655e30d4 | |||

| 7cf0d55546 | |||

| ce60b960c0 | |||

| cebcb5b92d | |||

| 11a0f96f5e | |||

| 74ebaf1744 | |||

| f7496ea6d1 | |||

| bebba7dc1f | |||

| afb2bf442c | |||

| c7de48c982 | |||

| f906112c03 | |||

| 8ef864fb39 | |||

| 1c9b5ab53c | |||

| c10faae3b5 | |||

| 2104dd5a0a | |||

| fbe64037db | |||

| d8c50b150c | |||

| 8871bb2d8e | |||

| a148454376 | |||

| be518b569b | |||

| c998fbe2ae | |||

| 9f12cd0c09 | |||

| 0d0fee1ca1 | |||

| a0410c4677 | |||

| 8fe464cfa3 | |||

| 3e2d6d9e8b | |||

| 32d677787b | |||

| dfd1c4eab3 | |||

| 36bb1f989d | |||

| 684f4c59e0 | |||

| 1b77e8a69a | |||

| 662e10c3e0 | |||

| c935fdb12f | |||

| 9e16937914 | |||

| f705202381 | |||

| f5532ad9f7 | |||

| 570e71f050 | |||

| c9cc4b4369 | |||

| 7111aa3b18 | |||

| 12eba4bcc7 | |||

| 4610de8fdd | |||

| 3fcc2dd944 | |||

| 8299bae2d4 | |||

| 604ccf7552 | |||

| f3dd47948a | |||

| c3bb207488 | |||

| 9009d1bfb3 | |||

| fa4d9e8bcb | |||

| 34b77efc87 | |||

| 5ca0ccbcd2 | |||

| 6aa4e52480 | |||

| f98e9a2ad7 | |||

| c6134cc25b | |||

| 0443b39264 | |||

| 8b0b8efbcb | |||

| 97449cee43 | |||

| ab5252c750 | |||

| 05a27cb34d | |||

| b02eab57d2 | |||

| b8d52cc3e4 | |||

| 7d9bab9508 | |||

| 944181a30e | |||

| d8dd50505a | |||

| d78082f5e4 | |||

| 08e501e57b | |||

| 29a607427d | |||

| afb830c91f | |||

| c1326ac3d5 | |||

| 513a1adf57 | |||

| 7871b38c80 | |||

| b34d2d7dee | |||

| d7dfa8c22d | |||

| 8df274f0af | |||

| 07c4ebb7f2 | |||

| 49605b257d | |||

| fa4e232d73 | |||

| bd84cf6586 | |||

| 6e37f70d55 | |||

| d97112d7f0 | |||

| e57bba17c1 | |||

| 959da300cc | |||

| ba90e43f72 | |||

| 6effd64ab0 | |||

| e18da7c7c1 | |||

| 0297edaf1f | |||

| b317d13b44 | |||

| bb22522e45 | |||

| 41053b6d0b | |||

| bd3fe5fac9 | |||

| 10a70a238b | |||

| 0bead4d410 | |||

| 4a7156de43 | |||

| d88d1b2a09 | |||

| a7186328e0 | |||

| 5e3c7816bd | |||

| a2fa60fa31 | |||

| ceb65c2669 | |||

| fd209ef1a9 | |||

| 471f036444 | |||

| 6ec0e5834c | |||

| 4c94754661 | |||

| 831e2cbdc9 | |||

| 3550f703c3 | |||

| ea1d57b461 | |||

| 49386309c8 | |||

| b7a95ab7cc | |||

| bf35b730de |

2

.codecov.yml

Normal file

2

.codecov.yml

Normal file

@ -0,0 +1,2 @@

|

||||

ignore:

|

||||

- "src/bin"

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -1,4 +1,4 @@

|

||||

|

||||

Cargo.lock

|

||||

/target/

|

||||

**/*.rs.bk

|

||||

Cargo.lock

|

||||

.cargo

|

||||

|

||||

22

.travis.yml

22

.travis.yml

@ -1,22 +0,0 @@

|

||||

language: rust

|

||||

required: sudo

|

||||

services:

|

||||

- docker

|

||||

matrix:

|

||||

allow_failures:

|

||||

- rust: nightly

|

||||

include:

|

||||

- rust: stable

|

||||

- rust: nightly

|

||||

env:

|

||||

- FEATURES='unstable'

|

||||

before_script: |

|

||||

export PATH="$PATH:$HOME/.cargo/bin"

|

||||

rustup component add rustfmt-preview

|

||||

script:

|

||||

- cargo fmt -- --write-mode=diff

|

||||

- cargo build --verbose --features "$FEATURES"

|

||||

- cargo test --verbose --features "$FEATURES"

|

||||

after_success: |

|

||||

docker run -it --rm --security-opt seccomp=unconfined --volume "$PWD:/volume" elmtai/docker-rust-kcov

|

||||

bash <(curl -s https://codecov.io/bash) -s target/cov

|

||||

63

Cargo.toml

63

Cargo.toml

@ -1,19 +1,68 @@

|

||||

[package]

|

||||

name = "silk"

|

||||

description = "A silky smooth implementation of the Loom architecture"

|

||||

version = "0.1.1"

|

||||

name = "solana"

|

||||

description = "The World's Fastest Blockchain"

|

||||

version = "0.6.0-beta"

|

||||

documentation = "https://docs.rs/solana"

|

||||

homepage = "http://solana.com/"

|

||||

repository = "https://github.com/solana-labs/solana"

|

||||

authors = [

|

||||

"Anatoly Yakovenko <aeyakovenko@gmail.com>",

|

||||

"Greg Fitzgerald <garious@gmail.com>",

|

||||

"Anatoly Yakovenko <anatoly@solana.com>",

|

||||

"Greg Fitzgerald <greg@solana.com>",

|

||||

"Stephen Akridge <stephen@solana.com>",

|

||||

]

|

||||

license = "Apache-2.0"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-client-demo"

|

||||

path = "src/bin/client-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-fullnode"

|

||||

path = "src/bin/fullnode.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-genesis"

|

||||

path = "src/bin/genesis.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-genesis-demo"

|

||||

path = "src/bin/genesis-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-mint"

|

||||

path = "src/bin/mint.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "solana-mint-demo"

|

||||

path = "src/bin/mint-demo.rs"

|

||||

|

||||

[badges]

|

||||

codecov = { repository = "loomprotocol/silk", branch = "master", service = "github" }

|

||||

codecov = { repository = "solana-labs/solana", branch = "master", service = "github" }

|

||||

|

||||

[features]

|

||||

unstable = []

|

||||

ipv6 = []

|

||||

cuda = []

|

||||

erasure = []

|

||||

|

||||

[dependencies]

|

||||

rayon = "1.0.0"

|

||||

itertools = "0.7.6"

|

||||

sha2 = "0.7.0"

|

||||

generic-array = { version = "0.9.0", default-features = false, features = ["serde"] }

|

||||

serde = "1.0.27"

|

||||

serde_derive = "1.0.27"

|

||||

serde_json = "1.0.10"

|

||||

ring = "0.12.1"

|

||||

untrusted = "0.5.1"

|

||||

bincode = "1.0.0"

|

||||

chrono = { version = "0.4.0", features = ["serde"] }

|

||||

log = "^0.4.1"

|

||||

env_logger = "^0.4.1"

|

||||

matches = "^0.1.6"

|

||||

byteorder = "^1.2.1"

|

||||

libc = "^0.2.1"

|

||||

getopts = "^0.2"

|

||||

isatty = "0.1"

|

||||

futures = "0.1"

|

||||

rand = "0.4.2"

|

||||

pnet = "^0.21.0"

|

||||

|

||||

2

LICENSE

2

LICENSE

@ -1,4 +1,4 @@

|

||||

Copyright 2018 Anatoly Yakovenko <anatoly@loomprotocol.com> and Greg Fitzgerald <garious@gmail.com>

|

||||

Copyright 2018 Anatoly Yakovenko, Greg Fitzgerald and Stephen Akridge

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

|

||||

169

README.md

169

README.md

@ -1,22 +1,100 @@

|

||||

[](https://crates.io/crates/silk)

|

||||

[](https://docs.rs/silk)

|

||||

[](https://travis-ci.org/loomprotocol/silk)

|

||||

[](https://codecov.io/gh/loomprotocol/silk)

|

||||

[](https://crates.io/crates/solana)

|

||||

[](https://docs.rs/solana)

|

||||

[](https://buildkite.com/solana-labs/solana)

|

||||

[](https://codecov.io/gh/solana-labs/solana)

|

||||

|

||||

# Silk, A Silky Smooth Implementation of the Loom Architecture

|

||||

Disclaimer

|

||||

===

|

||||

|

||||

Loom is a new achitecture for a high performance blockchain. Its whitepaper boasts a theoretical

|

||||

throughput of 710k transactions per second on a 1 gbps network. The first implementation of the

|

||||

whitepaper is happening in the 'loomprotocol/loom' repository. That repo is aggressively moving

|

||||

forward, looking to de-risk technical claims as quickly as possible. This repo is quite a bit

|

||||

different philosophically. Here we assume the Loom architecture is sound and worthy of building

|

||||

a community around. We care a great deal about quality, clarity and short learning curve. We

|

||||

avoid the use of `unsafe` Rust and an write tests for *everything*. Optimizations are only

|

||||

added when corresponding benchmarks are also added that demonstrate real performance boots. We

|

||||

expect the feature set here will always be a long ways behind the loom repo, but that this is

|

||||

an implementation you can take to the bank, literally.

|

||||

All claims, content, designs, algorithms, estimates, roadmaps, specifications, and performance measurements described in this project are done with the author's best effort. It is up to the reader to check and validate their accuracy and truthfulness. Furthermore nothing in this project constitutes a solicitation for investment.

|

||||

|

||||

# Developing

|

||||

Solana: High Performance Blockchain

|

||||

===

|

||||

|

||||

Solana™ is a new architecture for a high performance blockchain. It aims to support

|

||||

over 700 thousand transactions per second on a gigabit network.

|

||||

|

||||

Introduction

|

||||

===

|

||||

|

||||

It's possible for a centralized database to process 710,000 transactions per second on a standard gigabit network if the transactions are, on average, no more than 178 bytes. A centralized database can also replicate itself and maintain high availability without significantly compromising that transaction rate using the distributed system technique known as Optimistic Concurrency Control [H.T.Kung, J.T.Robinson (1981)]. At Solana, we're demonstrating that these same theoretical limits apply just as well to blockchain on an adversarial network. The key ingredient? Finding a way to share time when nodes can't trust one-another. Once nodes can trust time, suddenly ~40 years of distributed systems research becomes applicable to blockchain! Furthermore, and much to our surprise, it can implemented using a mechanism that has existed in Bitcoin since day one. The Bitcoin feature is called nLocktime and it can be used to postdate transactions using block height instead of a timestamp. As a Bitcoin client, you'd use block height instead of a timestamp if you don't trust the network. Block height turns out to be an instance of what's being called a Verifiable Delay Function in cryptography circles. It's a cryptographically secure way to say time has passed. In Solana, we use a far more granular verifiable delay function, a SHA 256 hash chain, to checkpoint the ledger and coordinate consensus. With it, we implement Optimistic Concurrency Control and are now well in route towards that theoretical limit of 710,000 transactions per second.

|

||||

|

||||

Running the demo

|

||||

===

|

||||

|

||||

First, install Rust's package manager Cargo.

|

||||

|

||||

```bash

|

||||

$ curl https://sh.rustup.rs -sSf | sh

|

||||

$ source $HOME/.cargo/env

|

||||

```

|

||||

|

||||

Now checkout the code from github:

|

||||

|

||||

```bash

|

||||

$ git clone https://github.com/solana-labs/solana.git

|

||||

$ cd solana

|

||||

```

|

||||

|

||||

The fullnode server is initialized with a ledger from stdin and

|

||||

generates new ledger entries on stdout. To create the input ledger, we'll need

|

||||

to create *the mint* and use it to generate a *genesis ledger*. It's done in

|

||||

two steps because the mint-demo.json file contains private keys that will be

|

||||

used later in this demo.

|

||||

|

||||

```bash

|

||||

$ echo 1000000000 | cargo run --release --bin solana-mint-demo > mint-demo.json

|

||||

$ cat mint-demo.json | cargo run --release --bin solana-genesis-demo > genesis.log

|

||||

```

|

||||

|

||||

Before you start the server, make sure you know the IP address of the machine ou want to be the leader for the demo, and make sure that udp ports 8000-10000 are open on all the machines you wan to test with. Now you can start the server:

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/leader.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cat genesis.log leader.log | cargo run --release --features cuda --bin solana-fullnode -- -s leader.json -l leader.json -b 8000 -d 2>&1 | tee leader-tee.log

|

||||

$ ./multinode-demo/leader.sh

|

||||

```

|

||||

|

||||

Wait a few seconds for the server to initialize. It will print "Ready." when it's safe

|

||||

to start sending it transactions.

|

||||

|

||||

Now you can start some validators:

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/validator.sh

|

||||

#!/bin/bash

|

||||

rsync -v -e ssh $1:~/solana/mint-demo.json .

|

||||

rsync -v -e ssh $1:~/solana/leader.json .

|

||||

rsync -v -e ssh $1:~/solana/genesis.log .

|

||||

rsync -v -e ssh $1:~/solana/leader.log .

|

||||

rsync -v -e ssh $1:~/solana/libcuda_verify_ed25519.a .

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cat genesis.log leader.log | cargo run --release --features cuda --bin solana-fullnode -- -l validator.json -s validator.json -v leader.json -b 9000 -d 2>&1 | tee validator-tee.log

|

||||

$ ./multinode-demo/validator.sh ubuntu@10.0.1.51 #The leader machine

|

||||

```

|

||||

|

||||

|

||||

Then, in a separate shell, let's execute some transactions. Note we pass in

|

||||

the JSON configuration file here, not the genesis ledger.

|

||||

|

||||

```bash

|

||||

$ cat ./multinode-demo/client.sh

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

rsync -v -e ssh $1:~/solana/leader.json .

|

||||

rsync -v -e ssh $1:~/solana/mint-demo.json .

|

||||

cat mint-demo.json | cargo run --release --bin solana-client-demo -- -l leader.json -c 8100 -n 1

|

||||

$ ./multinode-demo/client.sh ubuntu@10.0.1.51 #The leader machine

|

||||

```

|

||||

|

||||

Try starting a more validators and reruning the client demo!

|

||||

|

||||

Developing

|

||||

===

|

||||

|

||||

Building

|

||||

---

|

||||

@ -29,11 +107,17 @@ $ source $HOME/.cargo/env

|

||||

$ rustup component add rustfmt-preview

|

||||

```

|

||||

|

||||

If your rustc version is lower than 1.25.0, please update it:

|

||||

|

||||

```bash

|

||||

$ rustup update

|

||||

```

|

||||

|

||||

Download the source code:

|

||||

|

||||

```bash

|

||||

$ git clone https://github.com/loomprotocol/silk.git

|

||||

$ cd silk

|

||||

$ git clone https://github.com/solana-labs/solana.git

|

||||

$ cd solana

|

||||

```

|

||||

|

||||

Testing

|

||||

@ -42,9 +126,26 @@ Testing

|

||||

Run the test suite:

|

||||

|

||||

```bash

|

||||

cargo test

|

||||

$ cargo test

|

||||

```

|

||||

|

||||

To emulate all the tests that will run on a Pull Request, run:

|

||||

```bash

|

||||

$ ./ci/run-local.sh

|

||||

```

|

||||

|

||||

Debugging

|

||||

---

|

||||

|

||||

There are some useful debug messages in the code, you can enable them on a per-module and per-level

|

||||

basis with the normal RUST\_LOG environment variable. Run the fullnode with this syntax:

|

||||

```bash

|

||||

$ RUST_LOG=solana::streamer=debug,solana::server=info cat genesis.log | ./target/release/solana-fullnode > transactions0.log

|

||||

```

|

||||

to see the debug and info sections for streamer and server respectively. Generally

|

||||

we are using debug for infrequent debug messages, trace for potentially frequent messages and

|

||||

info for performance-related logging.

|

||||

|

||||

Benchmarking

|

||||

---

|

||||

|

||||

@ -59,3 +160,33 @@ Run the benchmarks:

|

||||

```bash

|

||||

$ cargo +nightly bench --features="unstable"

|

||||

```

|

||||

|

||||

To run the benchmarks on Linux with GPU optimizations enabled:

|

||||

|

||||

```bash

|

||||

$ wget https://solana-build-artifacts.s3.amazonaws.com/v0.5.0/libcuda_verify_ed25519.a

|

||||

$ cargo +nightly bench --features="unstable,cuda"

|

||||

```

|

||||

|

||||

Code coverage

|

||||

---

|

||||

|

||||

To generate code coverage statistics, run kcov via Docker:

|

||||

|

||||

```bash

|

||||

$ ./ci/coverage.sh

|

||||

```

|

||||

The coverage report will be written to `./target/cov/index.html`

|

||||

|

||||

|

||||

Why coverage? While most see coverage as a code quality metric, we see it primarily as a developer

|

||||

productivity metric. When a developer makes a change to the codebase, presumably it's a *solution* to

|

||||

some problem. Our unit-test suite is how we encode the set of *problems* the codebase solves. Running

|

||||

the test suite should indicate that your change didn't *infringe* on anyone else's solutions. Adding a

|

||||

test *protects* your solution from future changes. Say you don't understand why a line of code exists,

|

||||

try deleting it and running the unit-tests. The nearest test failure should tell you what problem

|

||||

was solved by that code. If no test fails, go ahead and submit a Pull Request that asks, "what

|

||||

problem is solved by this code?" On the other hand, if a test does fail and you can think of a

|

||||

better way to solve the same problem, a Pull Request with your solution would most certainly be

|

||||

welcome! Likewise, if rewriting a test can better communicate what code it's protecting, please

|

||||

send us that patch!

|

||||

|

||||

1

_config.yml

Normal file

1

_config.yml

Normal file

@ -0,0 +1 @@

|

||||

theme: jekyll-theme-slate

|

||||

15

build.rs

Normal file

15

build.rs

Normal file

@ -0,0 +1,15 @@

|

||||

use std::env;

|

||||

|

||||

fn main() {

|

||||

println!("cargo:rustc-link-search=native=.");

|

||||

if !env::var("CARGO_FEATURE_CUDA").is_err() {

|

||||

println!("cargo:rustc-link-lib=static=cuda_verify_ed25519");

|

||||

println!("cargo:rustc-link-search=native=/usr/local/cuda/lib64");

|

||||

println!("cargo:rustc-link-lib=dylib=cudart");

|

||||

println!("cargo:rustc-link-lib=dylib=cuda");

|

||||

println!("cargo:rustc-link-lib=dylib=cudadevrt");

|

||||

}

|

||||

if !env::var("CARGO_FEATURE_ERASURE").is_err() {

|

||||

println!("cargo:rustc-link-lib=dylib=Jerasure");

|

||||

}

|

||||

}

|

||||

2

ci/.gitignore

vendored

Normal file

2

ci/.gitignore

vendored

Normal file

@ -0,0 +1,2 @@

|

||||

/node_modules/

|

||||

/package-lock.json

|

||||

16

ci/buildkite.yml

Normal file

16

ci/buildkite.yml

Normal file

@ -0,0 +1,16 @@

|

||||

steps:

|

||||

- command: "ci/coverage.sh"

|

||||

name: "coverage [public]"

|

||||

- command: "ci/docker-run.sh rust ci/test-stable.sh"

|

||||

name: "stable [public]"

|

||||

- command: "ci/docker-run.sh rustlang/rust:nightly ci/test-nightly.sh || true"

|

||||

name: "nightly - FAILURES IGNORED [public]"

|

||||

- command: "ci/docker-run.sh rust ci/test-ignored.sh"

|

||||

name: "ignored [public]"

|

||||

- command: "ci/test-cuda.sh"

|

||||

name: "cuda"

|

||||

- command: "ci/shellcheck.sh"

|

||||

name: "shellcheck [public]"

|

||||

- wait

|

||||

- command: "ci/publish.sh"

|

||||

name: "publish release artifacts"

|

||||

21

ci/coverage.sh

Executable file

21

ci/coverage.sh

Executable file

@ -0,0 +1,21 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

ci/docker-run.sh evilmachines/rust-cargo-kcov \

|

||||

bash -exc "\

|

||||

export RUST_BACKTRACE=1; \

|

||||

cargo build --verbose; \

|

||||

cargo kcov --lib --verbose; \

|

||||

"

|

||||

|

||||

echo Coverage report:

|

||||

ls -l target/cov/index.html

|

||||

|

||||

if [[ -z "$CODECOV_TOKEN" ]]; then

|

||||

echo CODECOV_TOKEN undefined

|

||||

else

|

||||

bash <(curl -s https://codecov.io/bash)

|

||||

fi

|

||||

|

||||

exit 0

|

||||

41

ci/docker-run.sh

Executable file

41

ci/docker-run.sh

Executable file

@ -0,0 +1,41 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

usage() {

|

||||

echo "Usage: $0 [docker image name] [command]"

|

||||

echo

|

||||

echo Runs command in the specified docker image with

|

||||

echo a CI-appropriate environment

|

||||

echo

|

||||

}

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

IMAGE="$1"

|

||||

if [[ -z "$IMAGE" ]]; then

|

||||

echo Error: image not defined

|

||||

exit 1

|

||||

fi

|

||||

|

||||

docker pull "$IMAGE"

|

||||

shift

|

||||

|

||||

ARGS=(--workdir /solana --volume "$PWD:/solana" --rm)

|

||||

|

||||

ARGS+=(--env "CARGO_HOME=/solana/.cargo")

|

||||

|

||||

# kcov tries to set the personality of the binary which docker

|

||||

# doesn't allow by default.

|

||||

ARGS+=(--security-opt "seccomp=unconfined")

|

||||

|

||||

# Ensure files are created with the current host uid/gid

|

||||

ARGS+=(--user "$(id -u):$(id -g)")

|

||||

|

||||

# Environment variables to propagate into the container

|

||||

ARGS+=(

|

||||

--env BUILDKITE_TAG

|

||||

--env CODECOV_TOKEN

|

||||

--env CRATES_IO_TOKEN

|

||||

)

|

||||

|

||||

set -x

|

||||

docker run "${ARGS[@]}" "$IMAGE" "$@"

|

||||

19

ci/publish.sh

Executable file

19

ci/publish.sh

Executable file

@ -0,0 +1,19 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

if [[ -z "$BUILDKITE_TAG" ]]; then

|

||||

# Skip publish if this is not a tagged release

|

||||

exit 0

|

||||

fi

|

||||

|

||||

if [[ -z "$CRATES_IO_TOKEN" ]]; then

|

||||

echo CRATES_IO_TOKEN undefined

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# TODO: Ensure the published version matches the contents of BUILDKITE_TAG

|

||||

ci/docker-run.sh rust \

|

||||

bash -exc "cargo package; cargo publish --token $CRATES_IO_TOKEN"

|

||||

|

||||

exit 0

|

||||

19

ci/run-local.sh

Executable file

19

ci/run-local.sh

Executable file

@ -0,0 +1,19 @@

|

||||

#!/bin/bash -e

|

||||

#

|

||||

# Run the entire buildkite CI pipeline locally for pre-testing before sending a

|

||||

# Github pull request

|

||||

#

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

BKRUN=ci/node_modules/.bin/bkrun

|

||||

|

||||

if [[ ! -x $BKRUN ]]; then

|

||||

(

|

||||

set -x

|

||||

cd ci/

|

||||

npm install bkrun

|

||||

)

|

||||

fi

|

||||

|

||||

set -x

|

||||

./ci/node_modules/.bin/bkrun ci/buildkite.yml

|

||||

11

ci/shellcheck.sh

Executable file

11

ci/shellcheck.sh

Executable file

@ -0,0 +1,11 @@

|

||||

#!/bin/bash -e

|

||||

#

|

||||

# Reference: https://github.com/koalaman/shellcheck/wiki/Directive

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

set -x

|

||||

find . -name "*.sh" -not -regex ".*/.cargo/.*" -not -regex ".*/node_modules/.*" -print0 \

|

||||

| xargs -0 \

|

||||

ci/docker-run.sh koalaman/shellcheck --color=always --external-sources --shell=bash

|

||||

exit 0

|

||||

22

ci/test-cuda.sh

Executable file

22

ci/test-cuda.sh

Executable file

@ -0,0 +1,22 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

LIB=libcuda_verify_ed25519.a

|

||||

if [[ ! -r $LIB ]]; then

|

||||

if [[ -z "${libcuda_verify_ed25519_URL:-}" ]]; then

|

||||

echo "$0 skipped. Unable to locate $LIB"

|

||||

exit 0

|

||||

fi

|

||||

|

||||

export LD_LIBRARY_PATH=/usr/local/cuda/lib64

|

||||

export PATH=$PATH:/usr/local/cuda/bin

|

||||

curl -X GET -o $LIB "$libcuda_verify_ed25519_URL"

|

||||

fi

|

||||

|

||||

# shellcheck disable=SC1090 # <-- shellcheck can't follow ~

|

||||

source ~/.cargo/env

|

||||

export RUST_BACKTRACE=1

|

||||

cargo test --features=cuda

|

||||

|

||||

exit 0

|

||||

9

ci/test-ignored.sh

Executable file

9

ci/test-ignored.sh

Executable file

@ -0,0 +1,9 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

cargo test -- --ignored

|

||||

14

ci/test-nightly.sh

Executable file

14

ci/test-nightly.sh

Executable file

@ -0,0 +1,14 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustup component add rustfmt-preview

|

||||

cargo build --verbose --features unstable

|

||||

cargo test --verbose --features unstable

|

||||

cargo bench --verbose --features unstable

|

||||

|

||||

exit 0

|

||||

14

ci/test-stable.sh

Executable file

14

ci/test-stable.sh

Executable file

@ -0,0 +1,14 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

cd "$(dirname "$0")/.."

|

||||

|

||||

rustc --version

|

||||

cargo --version

|

||||

|

||||

export RUST_BACKTRACE=1

|

||||

rustup component add rustfmt-preview

|

||||

cargo fmt -- --write-mode=diff

|

||||

cargo build --verbose

|

||||

cargo test --verbose

|

||||

|

||||

exit 0

|

||||

15

doc/consensus.msc

Normal file

15

doc/consensus.msc

Normal file

@ -0,0 +1,15 @@

|

||||

msc {

|

||||

client,leader,verifier_a,verifier_b,verifier_c;

|

||||

|

||||

client=>leader [ label = "SUBMIT" ] ;

|

||||

leader=>client [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_a [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_b [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_c [ label = "CONFIRMED" ] ;

|

||||

verifier_a=>leader [ label = "VERIFIED" ] ;

|

||||

verifier_b=>leader [ label = "VERIFIED" ] ;

|

||||

leader=>client [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_a [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_b [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_c [ label = "FINALIZED" ] ;

|

||||

}

|

||||

65

doc/historian.md

Normal file

65

doc/historian.md

Normal file

@ -0,0 +1,65 @@

|

||||

The Historian

|

||||

===

|

||||

|

||||

Create a *Historian* and send it *events* to generate an *event log*, where each *entry*

|

||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||

|

||||

|

||||

|

||||

```rust

|

||||

extern crate solana;

|

||||

|

||||

use solana::historian::Historian;

|

||||

use solana::ledger::{Block, Entry, Hash};

|

||||

use solana::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_ledger(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

sleep(Duration::from_millis(15));

|

||||

let tokens = 42;

|

||||

let keypair = generate_keypair();

|

||||

let event0 = Event::new_claim(get_pubkey(&keypair), tokens, sign_claim_data(&tokens, &keypair));

|

||||

hist.sender.send(event0)?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn main() {

|

||||

let seed = Hash::default();

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_ledger(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry<Hash>> = hist.receiver.iter().collect();

|

||||

for entry in &entries {

|

||||

println!("{:?}", entry);

|

||||

}

|

||||

// Proof-of-History: Verify the historian learned about the events

|

||||

// in the same order they appear in the vector.

|

||||

assert!(entries[..].verify(&seed));

|

||||

}

|

||||

```

|

||||

|

||||

Running the program should produce a ledger similar to:

|

||||

|

||||

```rust

|

||||

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { tokens: 42 } }

|

||||

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

||||

```

|

||||

|

||||

Proof-of-History

|

||||

---

|

||||

|

||||

Take note of the last line:

|

||||

|

||||

```rust

|

||||

assert!(entries[..].verify(&seed));

|

||||

```

|

||||

|

||||

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

||||

historian, we can verify that `id` is the result of applying a sha256 hash to the previous `id`

|

||||

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

||||

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

||||

18

doc/historian.msc

Normal file

18

doc/historian.msc

Normal file

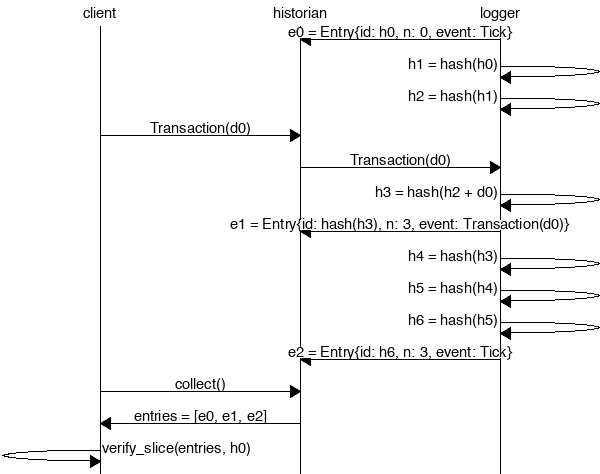

@ -0,0 +1,18 @@

|

||||

msc {

|

||||

client,historian,recorder;

|

||||

|

||||

recorder=>historian [ label = "e0 = Entry{id: h0, n: 0, event: Tick}" ] ;

|

||||

recorder=>recorder [ label = "h1 = hash(h0)" ] ;

|

||||

recorder=>recorder [ label = "h2 = hash(h1)" ] ;

|

||||

client=>historian [ label = "Transaction(d0)" ] ;

|

||||

historian=>recorder [ label = "Transaction(d0)" ] ;

|

||||

recorder=>recorder [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

recorder=>historian [ label = "e1 = Entry{id: hash(h3), n: 3, event: Transaction(d0)}" ] ;

|

||||

recorder=>recorder [ label = "h4 = hash(h3)" ] ;

|

||||

recorder=>recorder [ label = "h5 = hash(h4)" ] ;

|

||||

recorder=>recorder [ label = "h6 = hash(h5)" ] ;

|

||||

recorder=>historian [ label = "e2 = Entry{id: h6, n: 3, event: Tick}" ] ;

|

||||

client=>historian [ label = "collect()" ] ;

|

||||

historian=>client [ label = "entries = [e0, e1, e2]" ] ;

|

||||

client=>client [ label = "entries.verify(h0)" ] ;

|

||||

}

|

||||

16

multinode-demo/client.sh

Executable file

16

multinode-demo/client.sh

Executable file

@ -0,0 +1,16 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

if [[ -z "$1" ]]; then

|

||||

echo "usage: $0 [leader machine]"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

LEADER="$1"

|

||||

|

||||

set -x

|

||||

export RUST_LOG=solana=info

|

||||

rsync -v -e ssh "$LEADER:~/solana/leader.json" .

|

||||

rsync -v -e ssh "$LEADER:~/solana/mint-demo.json" .

|

||||

|

||||

cargo run --release --bin solana-client-demo -- \

|

||||

-l leader.json -c 8100 -n 1 < mint-demo.json

|

||||

4

multinode-demo/leader.sh

Executable file

4

multinode-demo/leader.sh

Executable file

@ -0,0 +1,4 @@

|

||||

#!/bin/bash

|

||||

export RUST_LOG=solana=info

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

cat genesis.log leader.log | cargo run --release --features cuda --bin solana-fullnode -- -s leader.json -l leader.json -b 8000 -d 2>&1 | tee leader-tee.log

|

||||

24

multinode-demo/validator.sh

Executable file

24

multinode-demo/validator.sh

Executable file

@ -0,0 +1,24 @@

|

||||

#!/bin/bash -e

|

||||

|

||||

if [[ -z "$1" ]]; then

|

||||

echo "usage: $0 [leader machine]"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

LEADER="$1"

|

||||

|

||||

set -x

|

||||

|

||||

rsync -v -e ssh "$LEADER:~/solana/mint-demo.json" .

|

||||

rsync -v -e ssh "$LEADER:~/solana/leader.json" .

|

||||

rsync -v -e ssh "$LEADER:~/solana/genesis.log" .

|

||||

rsync -v -e ssh "$LEADER:~/solana/leader.log" .

|

||||

rsync -v -e ssh "$LEADER:~/solana/libcuda_verify_ed25519.a" .

|

||||

|

||||

export RUST_LOG=solana=info

|

||||

|

||||

sudo sysctl -w net.core.rmem_max=26214400

|

||||

|

||||

cat genesis.log leader.log | \

|

||||

cargo run --release --features cuda --bin solana-fullnode -- \

|

||||

-l validator.json -s validator.json -v leader.json -b 9000 -d 2>&1 | tee validator-tee.log

|

||||

648

src/bank.rs

Normal file

648

src/bank.rs

Normal file

@ -0,0 +1,648 @@

|

||||

//! The `bank` module tracks client balances, and the progress of pending

|

||||

//! transactions. It offers a high-level public API that signs transactions

|

||||

//! on behalf of the caller, and a private low-level API for when they have

|

||||

//! already been signed and verified.

|

||||

|

||||

extern crate libc;

|

||||

|

||||

use chrono::prelude::*;

|

||||

use entry::Entry;

|

||||

use hash::Hash;

|

||||

use mint::Mint;

|

||||

use payment_plan::{Payment, PaymentPlan, Witness};

|

||||

use rayon::prelude::*;

|

||||

use signature::{KeyPair, PublicKey, Signature};

|

||||

use std::collections::hash_map::Entry::Occupied;

|

||||

use std::collections::{HashMap, HashSet, VecDeque};

|

||||

use std::result;

|

||||

use std::sync::atomic::{AtomicIsize, AtomicUsize, Ordering};

|

||||

use std::sync::RwLock;

|

||||

use transaction::{Instruction, Plan, Transaction};

|

||||

|

||||

pub const MAX_ENTRY_IDS: usize = 1024 * 4;

|

||||

|

||||

#[derive(Debug, PartialEq, Eq)]

|

||||

pub enum BankError {

|

||||

AccountNotFound(PublicKey),

|

||||

InsufficientFunds(PublicKey),

|

||||

DuplicateSiganture(Signature),

|

||||

LastIdNotFound(Hash),

|

||||

NegativeTokens,

|

||||

}

|

||||

|

||||

pub type Result<T> = result::Result<T, BankError>;

|

||||

|

||||

pub struct Bank {

|

||||

balances: RwLock<HashMap<PublicKey, AtomicIsize>>,

|

||||

pending: RwLock<HashMap<Signature, Plan>>,

|

||||

last_ids: RwLock<VecDeque<(Hash, RwLock<HashSet<Signature>>)>>,

|

||||

time_sources: RwLock<HashSet<PublicKey>>,

|

||||

last_time: RwLock<DateTime<Utc>>,

|

||||

transaction_count: AtomicUsize,

|

||||

}

|

||||

|

||||

impl Bank {

|

||||

/// Create an Bank using a deposit.

|

||||

pub fn new_from_deposit(deposit: &Payment) -> Self {

|

||||

let bank = Bank {

|

||||

balances: RwLock::new(HashMap::new()),

|

||||

pending: RwLock::new(HashMap::new()),

|

||||

last_ids: RwLock::new(VecDeque::new()),

|

||||

time_sources: RwLock::new(HashSet::new()),

|

||||

last_time: RwLock::new(Utc.timestamp(0, 0)),

|

||||

transaction_count: AtomicUsize::new(0),

|

||||

};

|

||||

bank.apply_payment(deposit);

|

||||

bank

|

||||

}

|

||||

|

||||

/// Create an Bank with only a Mint. Typically used by unit tests.

|

||||

pub fn new(mint: &Mint) -> Self {

|

||||

let deposit = Payment {

|

||||

to: mint.pubkey(),

|

||||

tokens: mint.tokens,

|

||||

};

|

||||

let bank = Self::new_from_deposit(&deposit);

|

||||

bank.register_entry_id(&mint.last_id());

|

||||

bank

|

||||

}

|

||||

|

||||

/// Commit funds to the 'to' party.

|

||||

fn apply_payment(&self, payment: &Payment) {

|

||||

// First we check balances with a read lock to maximize potential parallelization.

|

||||

if self.balances

|

||||

.read()

|

||||

.expect("'balances' read lock in apply_payment")

|

||||

.contains_key(&payment.to)

|

||||

{

|

||||

let bals = self.balances.read().expect("'balances' read lock");

|

||||

bals[&payment.to].fetch_add(payment.tokens as isize, Ordering::Relaxed);

|

||||

} else {

|

||||

// Now we know the key wasn't present a nanosecond ago, but it might be there

|

||||

// by the time we aquire a write lock, so we'll have to check again.

|

||||

let mut bals = self.balances.write().expect("'balances' write lock");

|

||||

if bals.contains_key(&payment.to) {

|

||||

bals[&payment.to].fetch_add(payment.tokens as isize, Ordering::Relaxed);

|

||||

} else {

|

||||

bals.insert(payment.to, AtomicIsize::new(payment.tokens as isize));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Return the last entry ID registered

|

||||

pub fn last_id(&self) -> Hash {

|

||||

let last_ids = self.last_ids.read().expect("'last_ids' read lock");

|

||||

let last_item = last_ids.iter().last().expect("empty 'last_ids' list");

|

||||

last_item.0

|

||||

}

|

||||

|

||||

fn reserve_signature(signatures: &RwLock<HashSet<Signature>>, sig: &Signature) -> Result<()> {

|

||||

if signatures

|

||||

.read()

|

||||

.expect("'signatures' read lock")

|

||||

.contains(sig)

|

||||

{

|

||||

return Err(BankError::DuplicateSiganture(*sig));

|

||||

}

|

||||

signatures

|

||||

.write()

|

||||

.expect("'signatures' write lock")

|

||||

.insert(*sig);

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn forget_signature(signatures: &RwLock<HashSet<Signature>>, sig: &Signature) {

|

||||

signatures

|

||||

.write()

|

||||

.expect("'signatures' write lock in forget_signature")

|

||||

.remove(sig);

|

||||

}

|

||||

|

||||

fn forget_signature_with_last_id(&self, sig: &Signature, last_id: &Hash) {

|

||||

if let Some(entry) = self.last_ids

|

||||

.read()

|

||||

.expect("'last_ids' read lock in forget_signature_with_last_id")

|

||||

.iter()

|

||||

.rev()

|

||||

.find(|x| x.0 == *last_id)

|

||||

{

|

||||

Self::forget_signature(&entry.1, sig);

|

||||

}

|

||||

}

|

||||

|

||||

fn reserve_signature_with_last_id(&self, sig: &Signature, last_id: &Hash) -> Result<()> {

|

||||

if let Some(entry) = self.last_ids

|

||||

.read()

|

||||

.expect("'last_ids' read lock in reserve_signature_with_last_id")

|

||||

.iter()

|

||||

.rev()

|

||||

.find(|x| x.0 == *last_id)

|

||||

{

|

||||

return Self::reserve_signature(&entry.1, sig);

|

||||

}

|

||||

Err(BankError::LastIdNotFound(*last_id))

|

||||

}

|

||||

|

||||

/// Tell the bank which Entry IDs exist on the ledger. This function

|

||||

/// assumes subsequent calls correspond to later entries, and will boot

|

||||

/// the oldest ones once its internal cache is full. Once boot, the

|

||||

/// bank will reject transactions using that `last_id`.

|

||||

pub fn register_entry_id(&self, last_id: &Hash) {

|

||||

let mut last_ids = self.last_ids

|

||||

.write()

|

||||

.expect("'last_ids' write lock in register_entry_id");

|

||||

if last_ids.len() >= MAX_ENTRY_IDS {

|

||||

last_ids.pop_front();

|

||||

}

|

||||

last_ids.push_back((*last_id, RwLock::new(HashSet::new())));

|

||||

}

|

||||

|

||||

/// Deduct tokens from the 'from' address the account has sufficient

|

||||

/// funds and isn't a duplicate.

|

||||

fn apply_debits(&self, tx: &Transaction) -> Result<()> {

|

||||

if let Instruction::NewContract(contract) = &tx.instruction {

|

||||

trace!("Transaction {}", contract.tokens);

|

||||

if contract.tokens < 0 {

|

||||

return Err(BankError::NegativeTokens);

|

||||

}

|

||||

}

|

||||

let bals = self.balances

|

||||

.read()

|

||||

.expect("'balances' read lock in apply_debits");

|

||||

let option = bals.get(&tx.from);

|

||||

|

||||

if option.is_none() {

|

||||

return Err(BankError::AccountNotFound(tx.from));

|

||||

}

|

||||

|

||||

self.reserve_signature_with_last_id(&tx.sig, &tx.last_id)?;

|

||||

|

||||

loop {

|

||||

let result = if let Instruction::NewContract(contract) = &tx.instruction {

|

||||

let bal = option.expect("assignment of option to bal");

|

||||

let current = bal.load(Ordering::Relaxed) as i64;

|

||||

|

||||

if current < contract.tokens {

|

||||

self.forget_signature_with_last_id(&tx.sig, &tx.last_id);

|

||||

return Err(BankError::InsufficientFunds(tx.from));

|

||||

}

|

||||

|

||||

bal.compare_exchange(

|

||||

current as isize,

|

||||

(current - contract.tokens) as isize,

|

||||

Ordering::Relaxed,

|

||||

Ordering::Relaxed,

|

||||

)

|

||||

} else {

|

||||

Ok(0)

|

||||

};

|

||||

|

||||

match result {

|

||||

Ok(_) => {

|

||||

self.transaction_count.fetch_add(1, Ordering::Relaxed);

|

||||

return Ok(());

|

||||

}

|

||||

Err(_) => continue,

|

||||

};

|

||||

}

|

||||

}

|

||||

|

||||

fn apply_credits(&self, tx: &Transaction) {

|

||||

match &tx.instruction {

|

||||

Instruction::NewContract(contract) => {

|

||||

let mut plan = contract.plan.clone();

|

||||

plan.apply_witness(&Witness::Timestamp(*self.last_time

|

||||

.read()

|

||||

.expect("timestamp creation in apply_credits")));

|

||||

|

||||

if let Some(ref payment) = plan.final_payment() {

|

||||

self.apply_payment(payment);

|

||||

} else {

|

||||

let mut pending = self.pending

|

||||

.write()

|

||||

.expect("'pending' write lock in apply_credits");

|

||||

pending.insert(tx.sig, plan);

|

||||

}

|

||||

}

|

||||

Instruction::ApplyTimestamp(dt) => {

|

||||

let _ = self.apply_timestamp(tx.from, *dt);

|

||||

}

|

||||

Instruction::ApplySignature(tx_sig) => {

|

||||

let _ = self.apply_signature(tx.from, *tx_sig);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Process a Transaction.

|

||||

fn process_transaction(&self, tx: &Transaction) -> Result<()> {

|

||||

self.apply_debits(tx)?;

|

||||

self.apply_credits(tx);

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a batch of transactions.

|

||||

pub fn process_transactions(&self, txs: Vec<Transaction>) -> Vec<Result<Transaction>> {

|

||||

// Run all debits first to filter out any transactions that can't be processed

|

||||

// in parallel deterministically.

|

||||

info!("processing Transactions {}", txs.len());

|

||||

let results: Vec<_> = txs.into_par_iter()

|

||||

.map(|tx| self.apply_debits(&tx).map(|_| tx))

|

||||

.collect(); // Calling collect() here forces all debits to complete before moving on.

|

||||

|

||||

results

|

||||

.into_par_iter()

|

||||

.map(|result| {

|

||||

result.map(|tx| {

|

||||

self.apply_credits(&tx);

|

||||

tx

|

||||

})

|

||||

})

|

||||

.collect()

|

||||

}

|

||||

|

||||

pub fn process_entries(&self, entries: Vec<Entry>) -> Result<()> {

|

||||

for entry in entries {

|

||||

self.register_entry_id(&entry.id);

|

||||

for result in self.process_transactions(entry.transactions) {

|

||||

result?;

|

||||

}

|

||||

}

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Signature.

|

||||

fn apply_signature(&self, from: PublicKey, tx_sig: Signature) -> Result<()> {

|

||||

if let Occupied(mut e) = self.pending

|

||||

.write()

|

||||

.expect("write() in apply_signature")

|

||||

.entry(tx_sig)

|

||||

{

|

||||

e.get_mut().apply_witness(&Witness::Signature(from));

|

||||

if let Some(payment) = e.get().final_payment() {

|

||||

self.apply_payment(&payment);

|

||||

e.remove_entry();

|

||||

}

|

||||

};

|

||||

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Timestamp.

|

||||

fn apply_timestamp(&self, from: PublicKey, dt: DateTime<Utc>) -> Result<()> {

|

||||

// If this is the first timestamp we've seen, it probably came from the genesis block,

|

||||

// so we'll trust it.

|

||||

if *self.last_time

|

||||

.read()

|

||||

.expect("'last_time' read lock on first timestamp check")

|

||||

== Utc.timestamp(0, 0)

|

||||

{

|

||||

self.time_sources

|

||||

.write()

|

||||

.expect("'time_sources' write lock on first timestamp")

|

||||

.insert(from);

|

||||

}

|

||||

|

||||

if self.time_sources

|

||||

.read()

|

||||

.expect("'time_sources' read lock")

|

||||

.contains(&from)

|

||||

{

|

||||

if dt > *self.last_time.read().expect("'last_time' read lock") {

|

||||

*self.last_time.write().expect("'last_time' write lock") = dt;

|

||||

}

|

||||

} else {

|

||||

return Ok(());

|

||||

}

|

||||

|

||||

// Check to see if any timelocked transactions can be completed.

|

||||

let mut completed = vec![];

|

||||

|

||||

// Hold 'pending' write lock until the end of this function. Otherwise another thread can

|

||||