Compare commits

13 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| afb830c91f | |||

| c1326ac3d5 | |||

| 513a1adf57 | |||

| 7871b38c80 | |||

| b34d2d7dee | |||

| d7dfa8c22d | |||

| 8df274f0af | |||

| 07c4ebb7f2 | |||

| 49605b257d | |||

| fa4e232d73 | |||

| bd84cf6586 | |||

| 6e37f70d55 | |||

| d97112d7f0 |

@ -1,7 +1,7 @@

|

||||

[package]

|

||||

name = "silk"

|

||||

description = "A silky smooth implementation of the Loom architecture"

|

||||

version = "0.2.1"

|

||||

version = "0.2.2"

|

||||

documentation = "https://docs.rs/silk"

|

||||

homepage = "http://loomprotocol.com/"

|

||||

repository = "https://github.com/loomprotocol/silk"

|

||||

@ -24,7 +24,8 @@ asm = ["sha2-asm"]

|

||||

|

||||

[dependencies]

|

||||

rayon = "1.0.0"

|

||||

itertools = "0.7.6"

|

||||

sha2 = "0.7.0"

|

||||

sha2-asm = {version="0.3", optional=true}

|

||||

digest = "0.7.2"

|

||||

generic-array = { version = "0.9.0", default-features = false, features = ["serde"] }

|

||||

serde = "1.0.27"

|

||||

serde_derive = "1.0.27"

|

||||

|

||||

37

README.md

37

README.md

@ -11,7 +11,7 @@ in two git repositories. Reserach is performed in the loom repository. That work

|

||||

Loom specification forward. This repository, on the other hand, aims to implement the specification

|

||||

as-is. We care a great deal about quality, clarity and short learning curve. We avoid the use

|

||||

of `unsafe` Rust and write tests for *everything*. Optimizations are only added when

|

||||

corresponding benchmarks are also added that demonstrate real performance boots. We expect the

|

||||

corresponding benchmarks are also added that demonstrate real performance boosts. We expect the

|

||||

feature set here will always be a ways behind the loom repo, but that this is an implementation

|

||||

you can take to the bank, literally.

|

||||

|

||||

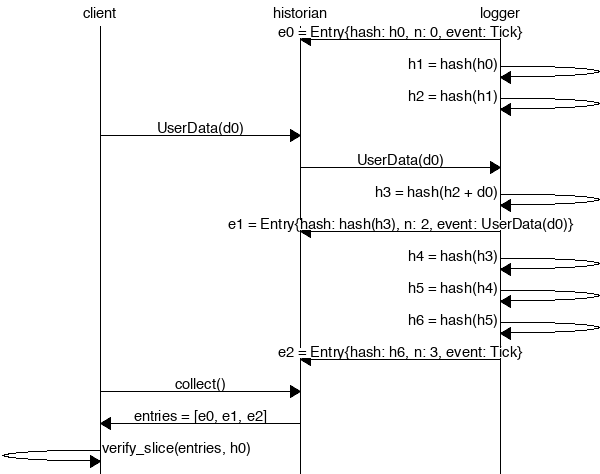

@ -24,32 +24,36 @@ Create a *Historian* and send it *events* to generate an *event log*, where each

|

||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||

|

||||

|

||||

|

||||

```rust

|

||||

extern crate silk;

|

||||

|

||||

use silk::historian::Historian;

|

||||

use silk::log::{verify_slice, Entry, Event, Sha256Hash};

|

||||

use std::{thread, time};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_log(hist: &Historian) -> Result<(), SendError<Event>> {

|

||||

hist.sender.send(Event::Tick)?;

|

||||

thread::sleep(time::Duration::new(0, 100_000));

|

||||

hist.sender.send(Event::UserDataKey(0xdeadbeef))?;

|

||||

thread::sleep(time::Duration::new(0, 100_000));

|

||||

hist.sender.send(Event::Tick)?;

|

||||

sleep(Duration::from_millis(15));

|

||||

hist.sender.send(Event::UserDataKey(Sha256Hash::default()))?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn main() {

|

||||

let seed = Sha256Hash::default();

|

||||

let hist = Historian::new(&seed);

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_log(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry> = hist.receiver.iter().collect();

|

||||

for entry in &entries {

|

||||

println!("{:?}", entry);

|

||||

}

|

||||

|

||||

// Proof-of-History: Verify the historian learned about the events

|

||||

// in the same order they appear in the vector.

|

||||

assert!(verify_slice(&entries, &seed));

|

||||

}

|

||||

```

|

||||

@ -58,10 +62,23 @@ Running the program should produce a log similar to:

|

||||

|

||||

```rust

|

||||

Entry { num_hashes: 0, end_hash: [0, ...], event: Tick }

|

||||

Entry { num_hashes: 6, end_hash: [67, ...], event: UserDataKey(3735928559) }

|

||||

Entry { num_hashes: 5, end_hash: [123, ...], event: Tick }

|

||||

Entry { num_hashes: 2, end_hash: [67, ...], event: UserDataKey(3735928559) }

|

||||

Entry { num_hashes: 3, end_hash: [123, ...], event: Tick }

|

||||

```

|

||||

|

||||

Proof-of-History

|

||||

---

|

||||

|

||||

Take note of the last line:

|

||||

|

||||

```rust

|

||||

assert!(verify_slice(&entries, &seed));

|

||||

```

|

||||

|

||||

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

||||

historian, we can verify that `end_hash` is the result of applying a sha256 hash to the previous `end_hash`

|

||||

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

||||

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

||||

|

||||

# Developing

|

||||

|

||||

|

||||

18

diagrams/historian.msc

Normal file

18

diagrams/historian.msc

Normal file

@ -0,0 +1,18 @@

|

||||

msc {

|

||||

client,historian,logger;

|

||||

|

||||

logger=>historian [ label = "e0 = Entry{hash: h0, n: 0, event: Tick}" ] ;

|

||||

logger=>logger [ label = "h1 = hash(h0)" ] ;

|

||||

logger=>logger [ label = "h2 = hash(h1)" ] ;

|

||||

client=>historian [ label = "UserData(d0)" ] ;

|

||||

historian=>logger [ label = "UserData(d0)" ] ;

|

||||

logger=>logger [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

logger=>historian [ label = "e1 = Entry{hash: hash(h3), n: 2, event: UserData(d0)}" ] ;

|

||||

logger=>logger [ label = "h4 = hash(h3)" ] ;

|

||||

logger=>logger [ label = "h5 = hash(h4)" ] ;

|

||||

logger=>logger [ label = "h6 = hash(h5)" ] ;

|

||||

logger=>historian [ label = "e2 = Entry{hash: h6, n: 3, event: Tick}" ] ;

|

||||

client=>historian [ label = "collect()" ] ;

|

||||

historian=>client [ label = "entries = [e0, e1, e2]" ] ;

|

||||

client=>client [ label = "verify_slice(entries, h0)" ] ;

|

||||

}

|

||||

@ -2,21 +2,20 @@ extern crate silk;

|

||||

|

||||

use silk::historian::Historian;

|

||||

use silk::log::{verify_slice, Entry, Event, Sha256Hash};

|

||||

use std::{thread, time};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_log(hist: &Historian) -> Result<(), SendError<Event>> {

|

||||

hist.sender.send(Event::Tick)?;

|

||||

thread::sleep(time::Duration::new(0, 100_000));

|

||||

hist.sender.send(Event::UserDataKey(0xdeadbeef))?;

|

||||

thread::sleep(time::Duration::new(0, 100_000));

|

||||

hist.sender.send(Event::Tick)?;

|

||||

sleep(Duration::from_millis(15));

|

||||

hist.sender.send(Event::UserDataKey(Sha256Hash::default()))?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn main() {

|

||||

let seed = Sha256Hash::default();

|

||||

let hist = Historian::new(&seed);

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_log(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry> = hist.receiver.iter().collect();

|

||||

|

||||

105

src/historian.rs

105

src/historian.rs

@ -7,7 +7,8 @@

|

||||

|

||||

use std::thread::JoinHandle;

|

||||

use std::sync::mpsc::{Receiver, Sender};

|

||||

use log::{hash, Entry, Event, Sha256Hash};

|

||||

use std::time::{Duration, SystemTime};

|

||||

use log::{extend_and_hash, hash, Entry, Event, Sha256Hash};

|

||||

|

||||

pub struct Historian {

|

||||

pub sender: Sender<Event>,

|

||||

@ -20,35 +21,56 @@ pub enum ExitReason {

|

||||

RecvDisconnected,

|

||||

SendDisconnected,

|

||||

}

|

||||

fn log_event(

|

||||

sender: &Sender<Entry>,

|

||||

num_hashes: &mut u64,

|

||||

end_hash: &mut Sha256Hash,

|

||||

event: Event,

|

||||

) -> Result<(), (Entry, ExitReason)> {

|

||||

if let Event::UserDataKey(key) = event {

|

||||

*end_hash = extend_and_hash(end_hash, &key);

|

||||

}

|

||||

let entry = Entry {

|

||||

end_hash: *end_hash,

|

||||

num_hashes: *num_hashes,

|

||||

event,

|

||||

};

|

||||

if let Err(_) = sender.send(entry.clone()) {

|

||||

return Err((entry, ExitReason::SendDisconnected));

|

||||

}

|

||||

*num_hashes = 0;

|

||||

Ok(())

|

||||

}

|

||||

|

||||

fn log_events(

|

||||

receiver: &Receiver<Event>,

|

||||

sender: &Sender<Entry>,

|

||||

num_hashes: u64,

|

||||

end_hash: Sha256Hash,

|

||||

) -> Result<u64, (Entry, ExitReason)> {

|

||||

num_hashes: &mut u64,

|

||||

end_hash: &mut Sha256Hash,

|

||||

epoch: SystemTime,

|

||||

num_ticks: &mut u64,

|

||||

ms_per_tick: Option<u64>,

|

||||

) -> Result<(), (Entry, ExitReason)> {

|

||||

use std::sync::mpsc::TryRecvError;

|

||||

let mut num_hashes = num_hashes;

|

||||

loop {

|

||||

if let Some(ms) = ms_per_tick {

|

||||

let now = SystemTime::now();

|

||||

if now > epoch + Duration::from_millis((*num_ticks + 1) * ms) {

|

||||

log_event(sender, num_hashes, end_hash, Event::Tick)?;

|

||||

*num_ticks += 1;

|

||||

}

|

||||

}

|

||||

match receiver.try_recv() {

|

||||

Ok(event) => {

|

||||

let entry = Entry {

|

||||

end_hash,

|

||||

num_hashes,

|

||||

event,

|

||||

};

|

||||

if let Err(_) = sender.send(entry.clone()) {

|

||||

return Err((entry, ExitReason::SendDisconnected));

|

||||

}

|

||||

num_hashes = 0;

|

||||

log_event(sender, num_hashes, end_hash, event)?;

|

||||

}

|

||||

Err(TryRecvError::Empty) => {

|

||||

return Ok(num_hashes);

|

||||

return Ok(());

|

||||

}

|

||||

Err(TryRecvError::Disconnected) => {

|

||||

let entry = Entry {

|

||||

end_hash,

|

||||

num_hashes,

|

||||

end_hash: *end_hash,

|

||||

num_hashes: *num_hashes,

|

||||

event: Event::Tick,

|

||||

};

|

||||

return Err((entry, ExitReason::RecvDisconnected));

|

||||

@ -61,6 +83,7 @@ fn log_events(

|

||||

/// sending back Entry messages until either the receiver or sender channel is closed.

|

||||

pub fn create_logger(

|

||||

start_hash: Sha256Hash,

|

||||

ms_per_tick: Option<u64>,

|

||||

receiver: Receiver<Event>,

|

||||

sender: Sender<Entry>,

|

||||

) -> JoinHandle<(Entry, ExitReason)> {

|

||||

@ -68,10 +91,19 @@ pub fn create_logger(

|

||||

thread::spawn(move || {

|

||||

let mut end_hash = start_hash;

|

||||

let mut num_hashes = 0;

|

||||

let mut num_ticks = 0;

|

||||

let epoch = SystemTime::now();

|

||||

loop {

|

||||

match log_events(&receiver, &sender, num_hashes, end_hash) {

|

||||

Ok(n) => num_hashes = n,

|

||||

Err(err) => return err,

|

||||

if let Err(err) = log_events(

|

||||

&receiver,

|

||||

&sender,

|

||||

&mut num_hashes,

|

||||

&mut end_hash,

|

||||

epoch,

|

||||

&mut num_ticks,

|

||||

ms_per_tick,

|

||||

) {

|

||||

return err;

|

||||

}

|

||||

end_hash = hash(&end_hash);

|

||||

num_hashes += 1;

|

||||

@ -80,11 +112,11 @@ pub fn create_logger(

|

||||

}

|

||||

|

||||

impl Historian {

|

||||

pub fn new(start_hash: &Sha256Hash) -> Self {

|

||||

pub fn new(start_hash: &Sha256Hash, ms_per_tick: Option<u64>) -> Self {

|

||||

use std::sync::mpsc::channel;

|

||||

let (sender, event_receiver) = channel();

|

||||

let (entry_sender, receiver) = channel();

|

||||

let thread_hdl = create_logger(*start_hash, event_receiver, entry_sender);

|

||||

let thread_hdl = create_logger(*start_hash, ms_per_tick, event_receiver, entry_sender);

|

||||

Historian {

|

||||

sender,

|

||||

receiver,

|

||||

@ -97,18 +129,17 @@ impl Historian {

|

||||

mod tests {

|

||||

use super::*;

|

||||

use log::*;

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

|

||||

#[test]

|

||||

fn test_historian() {

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

|

||||

let zero = Sha256Hash::default();

|

||||

let hist = Historian::new(&zero);

|

||||

let hist = Historian::new(&zero, None);

|

||||

|

||||

hist.sender.send(Event::Tick).unwrap();

|

||||

sleep(Duration::new(0, 1_000_000));

|

||||

hist.sender.send(Event::UserDataKey(0xdeadbeef)).unwrap();

|

||||

hist.sender.send(Event::UserDataKey(zero)).unwrap();

|

||||

sleep(Duration::new(0, 1_000_000));

|

||||

hist.sender.send(Event::Tick).unwrap();

|

||||

|

||||

@ -128,7 +159,7 @@ mod tests {

|

||||

#[test]

|

||||

fn test_historian_closed_sender() {

|

||||

let zero = Sha256Hash::default();

|

||||

let hist = Historian::new(&zero);

|

||||

let hist = Historian::new(&zero, None);

|

||||

drop(hist.receiver);

|

||||

hist.sender.send(Event::Tick).unwrap();

|

||||

assert_eq!(

|

||||

@ -136,4 +167,22 @@ mod tests {

|

||||

ExitReason::SendDisconnected

|

||||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_ticking_historian() {

|

||||

let zero = Sha256Hash::default();

|

||||

let hist = Historian::new(&zero, Some(20));

|

||||

sleep(Duration::from_millis(30));

|

||||

hist.sender.send(Event::UserDataKey(zero)).unwrap();

|

||||

sleep(Duration::from_millis(15));

|

||||

drop(hist.sender);

|

||||

assert_eq!(

|

||||

hist.thread_hdl.join().unwrap().1,

|

||||

ExitReason::RecvDisconnected

|

||||

);

|

||||

|

||||

let entries: Vec<Entry> = hist.receiver.iter().collect();

|

||||

assert!(entries.len() > 1);

|

||||

assert!(verify_slice(&entries, &zero));

|

||||

}

|

||||

}

|

||||

|

||||

@ -1,7 +1,9 @@

|

||||

#![cfg_attr(feature = "unstable", feature(test))]

|

||||

pub mod log;

|

||||

pub mod historian;

|

||||

extern crate digest;

|

||||

extern crate itertools;

|

||||

extern crate generic_array;

|

||||

extern crate rayon;

|

||||

extern crate serde;

|

||||

#[macro_use]

|

||||

extern crate serde_derive;

|

||||

extern crate sha2;

|

||||

|

||||

88

src/log.rs

88

src/log.rs

@ -13,11 +13,11 @@

|

||||

/// fastest processor. Duration should therefore be estimated by assuming that the hash

|

||||

/// was generated by the fastest processor at the time the entry was logged.

|

||||

|

||||

use digest::generic_array::GenericArray;

|

||||

use digest::generic_array::typenum::U32;

|

||||

use generic_array::GenericArray;

|

||||

use generic_array::typenum::U32;

|

||||

pub type Sha256Hash = GenericArray<u8, U32>;

|

||||

|

||||

#[derive(Debug, PartialEq, Eq, Clone)]

|

||||

#[derive(Serialize, Deserialize, Debug, PartialEq, Eq, Clone)]

|

||||

pub struct Entry {

|

||||

pub num_hashes: u64,

|

||||

pub end_hash: Sha256Hash,

|

||||

@ -29,27 +29,27 @@ pub struct Entry {

|

||||

/// be generated in 'num_hashes' hashes and verified in 'num_hashes' hashes. By logging

|

||||

/// a hash alongside the tick, each tick and be verified in parallel using the 'end_hash'

|

||||

/// of the preceding tick to seed its hashing.

|

||||

#[derive(Debug, PartialEq, Eq, Clone)]

|

||||

#[derive(Serialize, Deserialize, Debug, PartialEq, Eq, Clone)]

|

||||

pub enum Event {

|

||||

Tick,

|

||||

UserDataKey(u64),

|

||||

UserDataKey(Sha256Hash),

|

||||

}

|

||||

|

||||

impl Entry {

|

||||

/// Creates a Entry from the number of hashes 'num_hashes' since the previous event

|

||||

/// and that resulting 'end_hash'.

|

||||

pub fn new_tick(num_hashes: u64, end_hash: &Sha256Hash) -> Self {

|

||||

let event = Event::Tick;

|

||||

Entry {

|

||||

num_hashes,

|

||||

end_hash: *end_hash,

|

||||

event,

|

||||

event: Event::Tick,

|

||||

}

|

||||

}

|

||||

|

||||

/// Verifies self.end_hash is the result of hashing a 'start_hash' 'self.num_hashes' times.

|

||||

/// If the event is a UserDataKey, then hash that as well.

|

||||

pub fn verify(self: &Self, start_hash: &Sha256Hash) -> bool {

|

||||

self.end_hash == next_tick(start_hash, self.num_hashes).end_hash

|

||||

self.end_hash == next_hash(start_hash, self.num_hashes, &self.event)

|

||||

}

|

||||

}

|

||||

|

||||

@ -60,13 +60,36 @@ pub fn hash(val: &[u8]) -> Sha256Hash {

|

||||

hasher.result()

|

||||

}

|

||||

|

||||

/// Creates the next Tick Entry 'num_hashes' after 'start_hash'.

|

||||

pub fn next_tick(start_hash: &Sha256Hash, num_hashes: u64) -> Entry {

|

||||

/// Return the hash of the given hash extended with the given value.

|

||||

pub fn extend_and_hash(end_hash: &Sha256Hash, val: &[u8]) -> Sha256Hash {

|

||||

let mut hash_data = end_hash.to_vec();

|

||||

hash_data.extend_from_slice(val);

|

||||

hash(&hash_data)

|

||||

}

|

||||

|

||||

pub fn next_hash(start_hash: &Sha256Hash, num_hashes: u64, event: &Event) -> Sha256Hash {

|

||||

let mut end_hash = *start_hash;

|

||||

for _ in 0..num_hashes {

|

||||

end_hash = hash(&end_hash);

|

||||

}

|

||||

Entry::new_tick(num_hashes, &end_hash)

|

||||

if let Event::UserDataKey(key) = *event {

|

||||

return extend_and_hash(&end_hash, &key);

|

||||

}

|

||||

end_hash

|

||||

}

|

||||

|

||||

/// Creates the next Tick Entry 'num_hashes' after 'start_hash'.

|

||||

pub fn next_entry(start_hash: &Sha256Hash, num_hashes: u64, event: Event) -> Entry {

|

||||

Entry {

|

||||

num_hashes,

|

||||

end_hash: next_hash(start_hash, num_hashes, &event),

|

||||

event,

|

||||

}

|

||||

}

|

||||

|

||||

/// Creates the next Tick Entry 'num_hashes' after 'start_hash'.

|

||||

pub fn next_tick(start_hash: &Sha256Hash, num_hashes: u64) -> Entry {

|

||||

next_entry(start_hash, num_hashes, Event::Tick)

|

||||

}

|

||||

|

||||

/// Verifies the hashes and counts of a slice of events are all consistent.

|

||||

@ -86,13 +109,16 @@ pub fn verify_slice_seq(events: &[Entry], start_hash: &Sha256Hash) -> bool {

|

||||

|

||||

/// Create a vector of Ticks of length 'len' from 'start_hash' hash and 'num_hashes'.

|

||||

pub fn create_ticks(start_hash: &Sha256Hash, num_hashes: u64, len: usize) -> Vec<Entry> {

|

||||

use itertools::unfold;

|

||||

let mut events = unfold(*start_hash, |state| {

|

||||

let event = next_tick(state, num_hashes);

|

||||

*state = event.end_hash;

|

||||

return Some(event);

|

||||

});

|

||||

events.by_ref().take(len).collect()

|

||||

use std::iter;

|

||||

let mut end_hash = *start_hash;

|

||||

iter::repeat(Event::Tick)

|

||||

.take(len)

|

||||

.map(|event| {

|

||||

let entry = next_entry(&end_hash, num_hashes, event);

|

||||

end_hash = entry.end_hash;

|

||||

entry

|

||||

})

|

||||

.collect()

|

||||

}

|

||||

|

||||

#[cfg(test)]

|

||||

@ -138,6 +164,32 @@ mod tests {

|

||||

verify_slice_generic(verify_slice_seq);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_reorder_attack() {

|

||||

let zero = Sha256Hash::default();

|

||||

let one = hash(&zero);

|

||||

|

||||

// First, verify UserData events

|

||||

let mut end_hash = zero;

|

||||

let events = [Event::UserDataKey(zero), Event::UserDataKey(one)];

|

||||

let mut entries: Vec<Entry> = events

|

||||

.iter()

|

||||

.map(|event| {

|

||||

let entry = next_entry(&end_hash, 0, event.clone());

|

||||

end_hash = entry.end_hash;

|

||||

entry

|

||||

})

|

||||

.collect();

|

||||

assert!(verify_slice(&entries, &zero)); // inductive step

|

||||

|

||||

// Next, swap only two UserData events and ensure verification fails.

|

||||

let event0 = entries[0].event.clone();

|

||||

let event1 = entries[1].event.clone();

|

||||

entries[0].event = event1;

|

||||

entries[1].event = event0;

|

||||

assert!(!verify_slice(&entries, &zero)); // inductive step

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

#[cfg(all(feature = "unstable", test))]

|

||||

|

||||

Reference in New Issue

Block a user