Compare commits

123 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| e683c34a89 | |||

| 54e4f75081 | |||

| 9f256f0929 | |||

| ef169a6652 | |||

| eaec25f940 | |||

| 6a87d8975c | |||

| b8cf5f9427 | |||

| 2f1e585446 | |||

| f9309b46aa | |||

| 22f5985f1b | |||

| c59c38e50e | |||

| 232e1bb8a3 | |||

| 1fbb34620c | |||

| 89f5b803c9 | |||

| 55179101cd | |||

| 132495b1fc | |||

| a03d7bf5cd | |||

| 3bf225e85f | |||

| cc2bb290c4 | |||

| 878ca8c5c5 | |||

| 4bc41d81ee | |||

| f6ca176fc8 | |||

| 0bec360a31 | |||

| 04f30710c5 | |||

| 98c0a2af87 | |||

| 9db42c1769 | |||

| 849bced602 | |||

| 27f29019ef | |||

| 8642a41f2b | |||

| bf902ef5bc | |||

| 7656b55c22 | |||

| 7d3d4b9443 | |||

| 15c093c5e2 | |||

| 116166f62d | |||

| 26b19dde75 | |||

| c8ddc68f13 | |||

| 7c9681007c | |||

| 13206e4976 | |||

| 2f18302d32 | |||

| ddb21d151d | |||

| c64a9fb456 | |||

| ee19b4f86e | |||

| 14239e584f | |||

| 112aecf6eb | |||

| c1783d77d7 | |||

| f089abb3c5 | |||

| 8e551f5e32 | |||

| 290960c3b5 | |||

| 62af09adbe | |||

| e39c0b34e5 | |||

| 8ad90807ee | |||

| 533b3170a7 | |||

| 7732f3f5fb | |||

| f52f02a434 | |||

| 4d7d4d673e | |||

| 9a437f0d38 | |||

| c385f8bb6e | |||

| fa44be2a9d | |||

| 117ab0c141 | |||

| 7488d19ae6 | |||

| 60524ad5f2 | |||

| fad7ff8bf0 | |||

| 383d445ba1 | |||

| 803dcb0800 | |||

| fde320e2f2 | |||

| 8ea97141ea | |||

| 9f232bac58 | |||

| 8295cc11c0 | |||

| 70f80adb9a | |||

| 9a7cac1e07 | |||

| c584a25ec9 | |||

| bff32bf7bc | |||

| d0e7450389 | |||

| 4da89ac8a9 | |||

| f7032f7d9a | |||

| 7c7e3931a0 | |||

| 6be3d62d89 | |||

| 6f509a8a1e | |||

| 4379fabf16 | |||

| 6b66e1a077 | |||

| c11a3e0fdc | |||

| 3418033c55 | |||

| caa9a846ed | |||

| 8ee76bcea0 | |||

| 47325cbe01 | |||

| e0c8417297 | |||

| 9238ee9572 | |||

| 64af37e0cd | |||

| 9f9b79f30b | |||

| 265f41887f | |||

| 4f09e5d04c | |||

| 434f321336 | |||

| f4e0d1be58 | |||

| e5bae0604b | |||

| e7da083c31 | |||

| 367c32dabe | |||

| e054238af6 | |||

| e8faf6d59a | |||

| baa4ea3cd8 | |||

| 75ef0f0329 | |||

| 65185c0011 | |||

| eb94613d7d | |||

| 67f4f4fb49 | |||

| a7ecf4ac4c | |||

| 45765b625a | |||

| aa0a184ebe | |||

| 069f9f0d5d | |||

| c82b520ea8 | |||

| 9d6e5bde4a | |||

| 0eb3669fbf | |||

| 30449b6054 | |||

| f5f71a19b8 | |||

| 0135971769 | |||

| 8579795c40 | |||

| 9d77fd7eec | |||

| 8c40d1bd72 | |||

| 7a0bc7d888 | |||

| 1e07014f86 | |||

| 49281b24e5 | |||

| a8b1980de4 | |||

| b8cd5f0482 | |||

| cc9f0788aa | |||

| 209910299d |

2

.gitignore

vendored

2

.gitignore

vendored

@ -1,3 +1,3 @@

|

||||

|

||||

Cargo.lock

|

||||

/target/

|

||||

**/*.rs.bk

|

||||

|

||||

@ -9,7 +9,7 @@ matrix:

|

||||

- rust: stable

|

||||

- rust: nightly

|

||||

env:

|

||||

- FEATURES='asm,unstable'

|

||||

- FEATURES='unstable'

|

||||

before_script: |

|

||||

export PATH="$PATH:$HOME/.cargo/bin"

|

||||

rustup component add rustfmt-preview

|

||||

|

||||

482

Cargo.lock

generated

482

Cargo.lock

generated

@ -1,482 +0,0 @@

|

||||

[[package]]

|

||||

name = "arrayref"

|

||||

version = "0.3.4"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "arrayvec"

|

||||

version = "0.4.7"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"nodrop 0.1.12 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "bincode"

|

||||

version = "1.0.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"byteorder 1.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "bitflags"

|

||||

version = "1.0.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "block-buffer"

|

||||

version = "0.3.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"arrayref 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"byte-tools 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "byte-tools"

|

||||

version = "0.2.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "byteorder"

|

||||

version = "1.2.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "cfg-if"

|

||||

version = "0.1.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "chrono"

|

||||

version = "0.4.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"num 0.1.42 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"time 0.1.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "crossbeam-deque"

|

||||

version = "0.2.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"crossbeam-epoch 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"crossbeam-utils 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "crossbeam-epoch"

|

||||

version = "0.3.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"arrayvec 0.4.7 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"cfg-if 0.1.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"crossbeam-utils 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"lazy_static 0.2.11 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"memoffset 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"nodrop 0.1.12 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"scopeguard 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "crossbeam-utils"

|

||||

version = "0.2.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"cfg-if 0.1.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "digest"

|

||||

version = "0.7.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"generic-array 0.9.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "dtoa"

|

||||

version = "0.4.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "either"

|

||||

version = "1.4.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "fake-simd"

|

||||

version = "0.1.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "fuchsia-zircon"

|

||||

version = "0.3.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"bitflags 1.0.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"fuchsia-zircon-sys 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "fuchsia-zircon-sys"

|

||||

version = "0.3.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "gcc"

|

||||

version = "0.3.54"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "generic-array"

|

||||

version = "0.8.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"nodrop 0.1.12 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"typenum 1.9.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "generic-array"

|

||||

version = "0.9.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"typenum 1.9.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "itoa"

|

||||

version = "0.3.4"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "lazy_static"

|

||||

version = "0.2.11"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "lazy_static"

|

||||

version = "1.0.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "libc"

|

||||

version = "0.2.39"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "memoffset"

|

||||

version = "0.2.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "nodrop"

|

||||

version = "0.1.12"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "num"

|

||||

version = "0.1.42"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"num-integer 0.1.36 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num-iter 0.1.35 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num-traits 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "num-integer"

|

||||

version = "0.1.36"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"num-traits 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "num-iter"

|

||||

version = "0.1.35"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"num-integer 0.1.36 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num-traits 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "num-traits"

|

||||

version = "0.2.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "num_cpus"

|

||||

version = "1.8.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "proc-macro2"

|

||||

version = "0.2.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"unicode-xid 0.1.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "quote"

|

||||

version = "0.4.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"proc-macro2 0.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rand"

|

||||

version = "0.4.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"fuchsia-zircon 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"winapi 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rayon"

|

||||

version = "0.8.2"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"rayon-core 1.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rayon"

|

||||

version = "1.0.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"either 1.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rayon-core 1.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rayon-core"

|

||||

version = "1.4.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"crossbeam-deque 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"lazy_static 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num_cpus 1.8.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rand 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "redox_syscall"

|

||||

version = "0.1.37"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "ring"

|

||||

version = "0.12.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"gcc 0.3.54 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"lazy_static 0.2.11 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rayon 0.8.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"untrusted 0.5.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "scopeguard"

|

||||

version = "0.3.3"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "serde"

|

||||

version = "1.0.28"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "serde_derive"

|

||||

version = "1.0.28"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"proc-macro2 0.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"quote 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde_derive_internals 0.20.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"syn 0.12.13 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "serde_derive_internals"

|

||||

version = "0.20.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"proc-macro2 0.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"syn 0.12.13 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "serde_json"

|

||||

version = "1.0.10"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"dtoa 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"itoa 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num-traits 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "sha2"

|

||||

version = "0.7.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"block-buffer 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"byte-tools 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"digest 0.7.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"fake-simd 0.1.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "sha2-asm"

|

||||

version = "0.3.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"gcc 0.3.54 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"generic-array 0.8.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "silk"

|

||||

version = "0.3.3"

|

||||

dependencies = [

|

||||

"bincode 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"chrono 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"generic-array 0.9.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rayon 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"ring 0.12.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde_derive 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde_json 1.0.10 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"sha2 0.7.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"sha2-asm 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"untrusted 0.5.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "syn"

|

||||

version = "0.12.13"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"proc-macro2 0.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"quote 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"unicode-xid 0.1.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "time"

|

||||

version = "0.1.39"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"redox_syscall 0.1.37 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"winapi 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "typenum"

|

||||

version = "1.9.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "unicode-xid"

|

||||

version = "0.1.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "untrusted"

|

||||

version = "0.5.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "winapi"

|

||||

version = "0.3.4"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"winapi-i686-pc-windows-gnu 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"winapi-x86_64-pc-windows-gnu 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "winapi-i686-pc-windows-gnu"

|

||||

version = "0.4.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "winapi-x86_64-pc-windows-gnu"

|

||||

version = "0.4.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[metadata]

|

||||

"checksum arrayref 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)" = "0fd1479b7c29641adbd35ff3b5c293922d696a92f25c8c975da3e0acbc87258f"

|

||||

"checksum arrayvec 0.4.7 (registry+https://github.com/rust-lang/crates.io-index)" = "a1e964f9e24d588183fcb43503abda40d288c8657dfc27311516ce2f05675aef"

|

||||

"checksum bincode 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "bda13183df33055cbb84b847becce220d392df502ebe7a4a78d7021771ed94d0"

|

||||

"checksum bitflags 1.0.1 (registry+https://github.com/rust-lang/crates.io-index)" = "b3c30d3802dfb7281680d6285f2ccdaa8c2d8fee41f93805dba5c4cf50dc23cf"

|

||||

"checksum block-buffer 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)" = "a076c298b9ecdb530ed9d967e74a6027d6a7478924520acddcddc24c1c8ab3ab"

|

||||

"checksum byte-tools 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)" = "560c32574a12a89ecd91f5e742165893f86e3ab98d21f8ea548658eb9eef5f40"

|

||||

"checksum byteorder 1.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "652805b7e73fada9d85e9a6682a4abd490cb52d96aeecc12e33a0de34dfd0d23"

|

||||

"checksum cfg-if 0.1.2 (registry+https://github.com/rust-lang/crates.io-index)" = "d4c819a1287eb618df47cc647173c5c4c66ba19d888a6e50d605672aed3140de"

|

||||

"checksum chrono 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "7c20ebe0b2b08b0aeddba49c609fe7957ba2e33449882cb186a180bc60682fa9"

|

||||

"checksum crossbeam-deque 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)" = "f739f8c5363aca78cfb059edf753d8f0d36908c348f3d8d1503f03d8b75d9cf3"

|

||||

"checksum crossbeam-epoch 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)" = "59796cc6cbbdc6bb319161349db0c3250ec73ec7fcb763a51065ec4e2e158552"

|

||||

"checksum crossbeam-utils 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)" = "2760899e32a1d58d5abb31129f8fae5de75220bc2176e77ff7c627ae45c918d9"

|

||||

"checksum digest 0.7.2 (registry+https://github.com/rust-lang/crates.io-index)" = "00a49051fef47a72c9623101b19bd71924a45cca838826caae3eaa4d00772603"

|

||||

"checksum dtoa 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)" = "09c3753c3db574d215cba4ea76018483895d7bff25a31b49ba45db21c48e50ab"

|

||||

"checksum either 1.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "740178ddf48b1a9e878e6d6509a1442a2d42fd2928aae8e7a6f8a36fb01981b3"

|

||||

"checksum fake-simd 0.1.2 (registry+https://github.com/rust-lang/crates.io-index)" = "e88a8acf291dafb59c2d96e8f59828f3838bb1a70398823ade51a84de6a6deed"

|

||||

"checksum fuchsia-zircon 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)" = "2e9763c69ebaae630ba35f74888db465e49e259ba1bc0eda7d06f4a067615d82"

|

||||

"checksum fuchsia-zircon-sys 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)" = "3dcaa9ae7725d12cdb85b3ad99a434db70b468c09ded17e012d86b5c1010f7a7"

|

||||

"checksum gcc 0.3.54 (registry+https://github.com/rust-lang/crates.io-index)" = "5e33ec290da0d127825013597dbdfc28bee4964690c7ce1166cbc2a7bd08b1bb"

|

||||

"checksum generic-array 0.8.3 (registry+https://github.com/rust-lang/crates.io-index)" = "fceb69994e330afed50c93524be68c42fa898c2d9fd4ee8da03bd7363acd26f2"

|

||||

"checksum generic-array 0.9.0 (registry+https://github.com/rust-lang/crates.io-index)" = "ef25c5683767570c2bbd7deba372926a55eaae9982d7726ee2a1050239d45b9d"

|

||||

"checksum itoa 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)" = "8324a32baf01e2ae060e9de58ed0bc2320c9a2833491ee36cd3b4c414de4db8c"

|

||||

"checksum lazy_static 0.2.11 (registry+https://github.com/rust-lang/crates.io-index)" = "76f033c7ad61445c5b347c7382dd1237847eb1bce590fe50365dcb33d546be73"

|

||||

"checksum lazy_static 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "c8f31047daa365f19be14b47c29df4f7c3b581832407daabe6ae77397619237d"

|

||||

"checksum libc 0.2.39 (registry+https://github.com/rust-lang/crates.io-index)" = "f54263ad99207254cf58b5f701ecb432c717445ea2ee8af387334bdd1a03fdff"

|

||||

"checksum memoffset 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "0f9dc261e2b62d7a622bf416ea3c5245cdd5d9a7fcc428c0d06804dfce1775b3"

|

||||

"checksum nodrop 0.1.12 (registry+https://github.com/rust-lang/crates.io-index)" = "9a2228dca57108069a5262f2ed8bd2e82496d2e074a06d1ccc7ce1687b6ae0a2"

|

||||

"checksum num 0.1.42 (registry+https://github.com/rust-lang/crates.io-index)" = "4703ad64153382334aa8db57c637364c322d3372e097840c72000dabdcf6156e"

|

||||

"checksum num-integer 0.1.36 (registry+https://github.com/rust-lang/crates.io-index)" = "f8d26da319fb45674985c78f1d1caf99aa4941f785d384a2ae36d0740bc3e2fe"

|

||||

"checksum num-iter 0.1.35 (registry+https://github.com/rust-lang/crates.io-index)" = "4b226df12c5a59b63569dd57fafb926d91b385dfce33d8074a412411b689d593"

|

||||

"checksum num-traits 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "0b3c2bd9b9d21e48e956b763c9f37134dc62d9e95da6edb3f672cacb6caf3cd3"

|

||||

"checksum num_cpus 1.8.0 (registry+https://github.com/rust-lang/crates.io-index)" = "c51a3322e4bca9d212ad9a158a02abc6934d005490c054a2778df73a70aa0a30"

|

||||

"checksum proc-macro2 0.2.3 (registry+https://github.com/rust-lang/crates.io-index)" = "cd07deb3c6d1d9ff827999c7f9b04cdfd66b1b17ae508e14fe47b620f2282ae0"

|

||||

"checksum quote 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)" = "1eca14c727ad12702eb4b6bfb5a232287dcf8385cb8ca83a3eeaf6519c44c408"

|

||||

"checksum rand 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)" = "eba5f8cb59cc50ed56be8880a5c7b496bfd9bd26394e176bc67884094145c2c5"

|

||||

"checksum rayon 0.8.2 (registry+https://github.com/rust-lang/crates.io-index)" = "b614fe08b6665cb9a231d07ac1364b0ef3cb3698f1239ee0c4c3a88a524f54c8"

|

||||

"checksum rayon 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "485541959c8ecc49865526fe6c4de9653dd6e60d829d6edf0be228167b60372d"

|

||||

"checksum rayon-core 1.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "9d24ad214285a7729b174ed6d3bcfcb80177807f959d95fafd5bfc5c4f201ac8"

|

||||

"checksum redox_syscall 0.1.37 (registry+https://github.com/rust-lang/crates.io-index)" = "0d92eecebad22b767915e4d529f89f28ee96dbbf5a4810d2b844373f136417fd"

|

||||

"checksum ring 0.12.1 (registry+https://github.com/rust-lang/crates.io-index)" = "6f7d28b30a72c01b458428e0ae988d4149c20d902346902be881e3edc4bb325c"

|

||||

"checksum scopeguard 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)" = "94258f53601af11e6a49f722422f6e3425c52b06245a5cf9bc09908b174f5e27"

|

||||

"checksum serde 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)" = "e928fecdb00fe608c96f83a012633383564e730962fc7a0b79225a6acf056798"

|

||||

"checksum serde_derive 1.0.28 (registry+https://github.com/rust-lang/crates.io-index)" = "95f666a2356d87ce4780ea15b14b13532785579a5cad2dcba5292acc75f6efe2"

|

||||

"checksum serde_derive_internals 0.20.0 (registry+https://github.com/rust-lang/crates.io-index)" = "1fc848d073be32cd982380c06587ea1d433bc1a4c4a111de07ec2286a3ddade8"

|

||||

"checksum serde_json 1.0.10 (registry+https://github.com/rust-lang/crates.io-index)" = "57781ed845b8e742fc2bf306aba8e3b408fe8c366b900e3769fbc39f49eb8b39"

|

||||

"checksum sha2 0.7.0 (registry+https://github.com/rust-lang/crates.io-index)" = "7daca11f2fdb8559c4f6c588386bed5e2ad4b6605c1442935a7f08144a918688"

|

||||

"checksum sha2-asm 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)" = "3e319010fd740857efd4428b8ee1b74f311aeb0fda1ece174a9bad6741182d26"

|

||||

"checksum syn 0.12.13 (registry+https://github.com/rust-lang/crates.io-index)" = "517f6da31bc53bf080b9a77b29fbd0ff8da2f5a2ebd24c73c2238274a94ac7cb"

|

||||

"checksum time 0.1.39 (registry+https://github.com/rust-lang/crates.io-index)" = "a15375f1df02096fb3317256ce2cee6a1f42fc84ea5ad5fc8c421cfe40c73098"

|

||||

"checksum typenum 1.9.0 (registry+https://github.com/rust-lang/crates.io-index)" = "13a99dc6780ef33c78780b826cf9d2a78840b72cae9474de4bcaf9051e60ebbd"

|

||||

"checksum unicode-xid 0.1.0 (registry+https://github.com/rust-lang/crates.io-index)" = "fc72304796d0818e357ead4e000d19c9c174ab23dc11093ac919054d20a6a7fc"

|

||||

"checksum untrusted 0.5.1 (registry+https://github.com/rust-lang/crates.io-index)" = "f392d7819dbe58833e26872f5f6f0d68b7bbbe90fc3667e98731c4a15ad9a7ae"

|

||||

"checksum winapi 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)" = "04e3bd221fcbe8a271359c04f21a76db7d0c6028862d1bb5512d85e1e2eb5bb3"

|

||||

"checksum winapi-i686-pc-windows-gnu 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "ac3b87c63620426dd9b991e5ce0329eff545bccbbb34f3be09ff6fb6ab51b7b6"

|

||||

"checksum winapi-x86_64-pc-windows-gnu 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "712e227841d057c1ee1cd2fb22fa7e5a5461ae8e48fa2ca79ec42cfc1931183f"

|

||||

33

Cargo.toml

33

Cargo.toml

@ -1,51 +1,50 @@

|

||||

[package]

|

||||

name = "silk"

|

||||

description = "A silky smooth implementation of the Loom architecture"

|

||||

version = "0.3.3"

|

||||

documentation = "https://docs.rs/silk"

|

||||

name = "solana"

|

||||

description = "High Performance Blockchain"

|

||||

version = "0.4.0"

|

||||

documentation = "https://docs.rs/solana"

|

||||

homepage = "http://loomprotocol.com/"

|

||||

repository = "https://github.com/loomprotocol/silk"

|

||||

repository = "https://github.com/solana-labs/solana"

|

||||

authors = [

|

||||

"Anatoly Yakovenko <aeyakovenko@gmail.com>",

|

||||

"Greg Fitzgerald <garious@gmail.com>",

|

||||

"Anatoly Yakovenko <anatoly@solana.co>",

|

||||

"Greg Fitzgerald <greg@solana.co>",

|

||||

]

|

||||

license = "Apache-2.0"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-historian-demo"

|

||||

name = "solana-historian-demo"

|

||||

path = "src/bin/historian-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-client-demo"

|

||||

name = "solana-client-demo"

|

||||

path = "src/bin/client-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-testnode"

|

||||

name = "solana-testnode"

|

||||

path = "src/bin/testnode.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-genesis"

|

||||

name = "solana-genesis"

|

||||

path = "src/bin/genesis.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-genesis-demo"

|

||||

name = "solana-genesis-demo"

|

||||

path = "src/bin/genesis-demo.rs"

|

||||

|

||||

[[bin]]

|

||||

name = "silk-mint"

|

||||

name = "solana-mint"

|

||||

path = "src/bin/mint.rs"

|

||||

|

||||

[badges]

|

||||

codecov = { repository = "loomprotocol/silk", branch = "master", service = "github" }

|

||||

codecov = { repository = "solana-labs/solana", branch = "master", service = "github" }

|

||||

|

||||

[features]

|

||||

unstable = []

|

||||

asm = ["sha2-asm"]

|

||||

ipv6 = []

|

||||

|

||||

[dependencies]

|

||||

rayon = "1.0.0"

|

||||

sha2 = "0.7.0"

|

||||

sha2-asm = {version="0.3", optional=true}

|

||||

generic-array = { version = "0.9.0", default-features = false, features = ["serde"] }

|

||||

serde = "1.0.27"

|

||||

serde_derive = "1.0.27"

|

||||

@ -54,3 +53,5 @@ ring = "0.12.1"

|

||||

untrusted = "0.5.1"

|

||||

bincode = "1.0.0"

|

||||

chrono = { version = "0.4.0", features = ["serde"] }

|

||||

log = "^0.4.1"

|

||||

matches = "^0.1.6"

|

||||

|

||||

61

README.md

61

README.md

@ -1,25 +1,18 @@

|

||||

[](https://crates.io/crates/silk)

|

||||

[](https://docs.rs/silk)

|

||||

[](https://travis-ci.org/loomprotocol/silk)

|

||||

[](https://codecov.io/gh/loomprotocol/silk)

|

||||

[](https://crates.io/crates/solana)

|

||||

[](https://docs.rs/solana)

|

||||

[](https://travis-ci.org/solana-labs/solana)

|

||||

[](https://codecov.io/gh/solana-labs/solana)

|

||||

|

||||

Disclaimer

|

||||

===

|

||||

|

||||

All claims, content, designs, algorithms, estimates, roadmaps, specifications, and performance measurements described in this project are done with the author's best effort. It is up to the reader to check and validate their accuracy and truthfulness. Furthermore nothing in this project constitutes a solicitation for investment.

|

||||

|

||||

Silk, a silky smooth implementation of the Loom specification

|

||||

Solana: High Performance Blockchain

|

||||

===

|

||||

|

||||

Loom™ is a new architecture for a high performance blockchain. Its white paper boasts a theoretical

|

||||

throughput of 710k transactions per second on a 1 gbps network. The specification is implemented

|

||||

in two git repositories. Research is performed in the loom repository. That work drives the

|

||||

Loom specification forward. This repository, on the other hand, aims to implement the specification

|

||||

as-is. We care a great deal about quality, clarity and short learning curve. We avoid the use

|

||||

of `unsafe` Rust and write tests for *everything*. Optimizations are only added when

|

||||

corresponding benchmarks are also added that demonstrate real performance boosts. We expect the

|

||||

feature set here will always be a ways behind the loom repo, but that this is an implementation

|

||||

you can take to the bank, literally.

|

||||

Solana™ is a new architecture for a high performance blockchain. It aims to support

|

||||

over 700 thousand transactions per second on a gigabit network.

|

||||

|

||||

Running the demo

|

||||

===

|

||||

@ -31,57 +24,51 @@ $ curl https://sh.rustup.rs -sSf | sh

|

||||

$ source $HOME/.cargo/env

|

||||

```

|

||||

|

||||

Install the silk executables:

|

||||

|

||||

```bash

|

||||

$ cargo install silk

|

||||

```

|

||||

|

||||

The testnode server is initialized with a transaction log from stdin and

|

||||

generates new log entries on stdout. To create the input log, we'll need

|

||||

to create *the mint* and use it to generate a *genesis log*. It's done in

|

||||

The testnode server is initialized with a ledger from stdin and

|

||||

generates new ledger entries on stdout. To create the input ledger, we'll need

|

||||

to create *the mint* and use it to generate a *genesis ledger*. It's done in

|

||||

two steps because the mint.json file contains a private key that will be

|

||||

used later in this demo.

|

||||

|

||||

```bash

|

||||

$ echo 500 | silk-mint > mint.json

|

||||

$ cat mint.json | silk-genesis > genesis.log

|

||||

$ echo 1000000000 | cargo run --release --bin solana-mint | tee mint.json

|

||||

$ cat mint.json | cargo run --release --bin solana-genesis | tee genesis.log

|

||||

```

|

||||

|

||||

Now you can start the server:

|

||||

|

||||

```bash

|

||||

$ cat genesis.log | silk-testnode > transactions0.log

|

||||

$ cat genesis.log | cargo run --release --bin solana-testnode | tee transactions0.log

|

||||

```

|

||||

|

||||

Then, in a separate shell, let's execute some transactions. Note we pass in

|

||||

the JSON configuration file here, not the genesis log.

|

||||

the JSON configuration file here, not the genesis ledger.

|

||||

|

||||

```bash

|

||||

$ cat mint.json | silk-client-demo

|

||||

$ cat mint.json | cargo run --release --bin solana-client-demo

|

||||

```

|

||||

|

||||

Now kill the server with Ctrl-C, and take a look at the transaction log. You should

|

||||

Now kill the server with Ctrl-C, and take a look at the ledger. You should

|

||||

see something similar to:

|

||||

|

||||

```json

|

||||

{"num_hashes":27,"id":[0, "..."],"event":"Tick"}

|

||||

{"num_hashes":3,"id":[67, "..."],"event":{"Transaction":{"asset":42}}}

|

||||

{"num_hashes":3,"id":[67, "..."],"event":{"Transaction":{"tokens":42}}}

|

||||

{"num_hashes":27,"id":[0, "..."],"event":"Tick"}

|

||||

```

|

||||

|

||||

Now restart the server from where we left off. Pass it both the genesis log, and

|

||||

the transaction log.

|

||||

Now restart the server from where we left off. Pass it both the genesis ledger, and

|

||||

the transaction ledger.

|

||||

|

||||

```bash

|

||||

$ cat genesis.log transactions0.log | silk-testnode > transactions1.log

|

||||

$ cat genesis.log transactions0.log | cargo run --release --bin solana-testnode | tee transactions1.log

|

||||

```

|

||||

|

||||

Lastly, run the client demo again, and verify that all funds were spent in the

|

||||

previous round, and so no additional transactions are added.

|

||||

|

||||

```bash

|

||||

$ cat mint.json | silk-client-demo

|

||||

$ cat mint.json | cargo run --release --bin solana-client-demo

|

||||

```

|

||||

|

||||

Stop the server again, and verify there are only Tick entries, and no Transaction entries.

|

||||

@ -103,8 +90,8 @@ $ rustup component add rustfmt-preview

|

||||

Download the source code:

|

||||

|

||||

```bash

|

||||

$ git clone https://github.com/loomprotocol/silk.git

|

||||

$ cd silk

|

||||

$ git clone https://github.com/solana-labs/solana.git

|

||||

$ cd solana

|

||||

```

|

||||

|

||||

Testing

|

||||

@ -128,5 +115,5 @@ $ rustup install nightly

|

||||

Run the benchmarks:

|

||||

|

||||

```bash

|

||||

$ cargo +nightly bench --features="asm,unstable"

|

||||

$ cargo +nightly bench --features="unstable"

|

||||

```

|

||||

|

||||

15

doc/consensus.msc

Normal file

15

doc/consensus.msc

Normal file

@ -0,0 +1,15 @@

|

||||

msc {

|

||||

client,leader,verifier_a,verifier_b,verifier_c;

|

||||

|

||||

client=>leader [ label = "SUBMIT" ] ;

|

||||

leader=>client [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_a [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_b [ label = "CONFIRMED" ] ;

|

||||

leader=>verifier_c [ label = "CONFIRMED" ] ;

|

||||

verifier_a=>leader [ label = "VERIFIED" ] ;

|

||||

verifier_b=>leader [ label = "VERIFIED" ] ;

|

||||

leader=>client [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_a [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_b [ label = "FINALIZED" ] ;

|

||||

leader=>verifier_c [ label = "FINALIZED" ] ;

|

||||

}

|

||||

@ -1,27 +1,27 @@

|

||||

The Historian

|

||||

===

|

||||

|

||||

Create a *Historian* and send it *events* to generate an *event log*, where each log *entry*

|

||||

Create a *Historian* and send it *events* to generate an *event log*, where each *entry*

|

||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||

|

||||

|

||||

|

||||

```rust

|

||||

extern crate silk;

|

||||

extern crate solana;

|

||||

|

||||

use silk::historian::Historian;

|

||||

use silk::log::{verify_slice, Entry, Hash};

|

||||

use silk::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||

use solana::historian::Historian;

|

||||

use solana::ledger::{verify_slice, Entry, Hash};

|

||||

use solana::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||

use std::thread::sleep;

|

||||

use std::time::Duration;

|

||||

use std::sync::mpsc::SendError;

|

||||

|

||||

fn create_log(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

fn create_ledger(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

sleep(Duration::from_millis(15));

|

||||

let asset = Hash::default();

|

||||

let tokens = 42;

|

||||

let keypair = generate_keypair();

|

||||

let event0 = Event::new_claim(get_pubkey(&keypair), asset, sign_claim_data(&asset, &keypair));

|

||||

let event0 = Event::new_claim(get_pubkey(&keypair), tokens, sign_claim_data(&tokens, &keypair));

|

||||

hist.sender.send(event0)?;

|

||||

sleep(Duration::from_millis(10));

|

||||

Ok(())

|

||||

@ -30,7 +30,7 @@ fn create_log(hist: &Historian<Hash>) -> Result<(), SendError<Event<Hash>>> {

|

||||

fn main() {

|

||||

let seed = Hash::default();

|

||||

let hist = Historian::new(&seed, Some(10));

|

||||

create_log(&hist).expect("send error");

|

||||

create_ledger(&hist).expect("send error");

|

||||

drop(hist.sender);

|

||||

let entries: Vec<Entry<Hash>> = hist.receiver.iter().collect();

|

||||

for entry in &entries {

|

||||

@ -42,11 +42,11 @@ fn main() {

|

||||

}

|

||||

```

|

||||

|

||||

Running the program should produce a log similar to:

|

||||

Running the program should produce a ledger similar to:

|

||||

|

||||

```rust

|

||||

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { asset: [37, ...] } }

|

||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { tokens: 42 } }

|

||||

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

||||

```

|

||||

|

||||

|

||||

@ -1,17 +1,17 @@

|

||||

msc {

|

||||

client,historian,logger;

|

||||

client,historian,recorder;

|

||||

|

||||

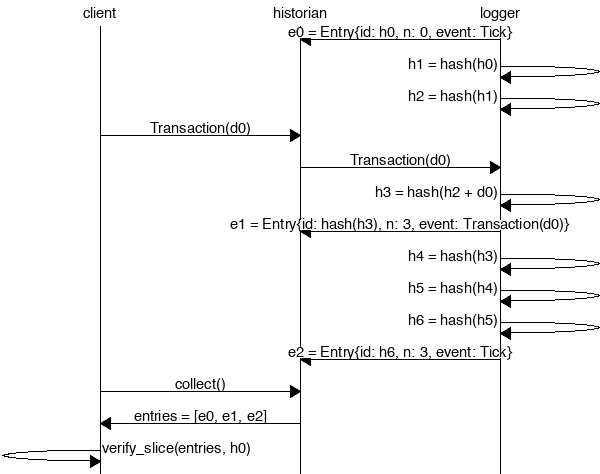

logger=>historian [ label = "e0 = Entry{id: h0, n: 0, event: Tick}" ] ;

|

||||

logger=>logger [ label = "h1 = hash(h0)" ] ;

|

||||

logger=>logger [ label = "h2 = hash(h1)" ] ;

|

||||

recorder=>historian [ label = "e0 = Entry{id: h0, n: 0, event: Tick}" ] ;

|

||||

recorder=>recorder [ label = "h1 = hash(h0)" ] ;

|

||||

recorder=>recorder [ label = "h2 = hash(h1)" ] ;

|

||||

client=>historian [ label = "Transaction(d0)" ] ;

|

||||

historian=>logger [ label = "Transaction(d0)" ] ;

|

||||

logger=>logger [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

logger=>historian [ label = "e1 = Entry{id: hash(h3), n: 3, event: Transaction(d0)}" ] ;

|

||||

logger=>logger [ label = "h4 = hash(h3)" ] ;

|

||||

logger=>logger [ label = "h5 = hash(h4)" ] ;

|

||||

logger=>logger [ label = "h6 = hash(h5)" ] ;

|

||||

logger=>historian [ label = "e2 = Entry{id: h6, n: 3, event: Tick}" ] ;

|

||||

historian=>recorder [ label = "Transaction(d0)" ] ;

|

||||

recorder=>recorder [ label = "h3 = hash(h2 + d0)" ] ;

|

||||

recorder=>historian [ label = "e1 = Entry{id: hash(h3), n: 3, event: Transaction(d0)}" ] ;

|

||||

recorder=>recorder [ label = "h4 = hash(h3)" ] ;

|

||||

recorder=>recorder [ label = "h5 = hash(h4)" ] ;

|

||||

recorder=>recorder [ label = "h6 = hash(h5)" ] ;

|

||||

recorder=>historian [ label = "e2 = Entry{id: h6, n: 3, event: Tick}" ] ;

|

||||

client=>historian [ label = "collect()" ] ;

|

||||

historian=>client [ label = "entries = [e0, e1, e2]" ] ;

|

||||

client=>client [ label = "verify_slice(entries, h0)" ] ;

|

||||

|

||||

@ -1,18 +1,22 @@

|

||||

//! The `accountant` is a client of the `historian`. It uses the historian's

|

||||

//! event log to record transactions. Its users can deposit funds and

|

||||

//! transfer funds to other users.

|

||||

//! The `accountant` module tracks client balances, and the progress of pending

|

||||

//! transactions. It offers a high-level public API that signs transactions

|

||||

//! on behalf of the caller, and a private low-level API for when they have

|

||||

//! already been signed and verified.

|

||||

|

||||

use hash::Hash;

|

||||

use chrono::prelude::*;

|

||||

use entry::Entry;

|

||||

use event::Event;

|

||||

use transaction::{Condition, Transaction};

|

||||

use signature::{KeyPair, PublicKey, Signature};

|

||||

use hash::Hash;

|

||||

use historian::Historian;

|

||||

use mint::Mint;

|

||||

use historian::{reserve_signature, Historian};

|

||||

use std::sync::mpsc::SendError;

|

||||

use plan::{Plan, Witness};

|

||||

use recorder::Signal;

|

||||

use signature::{KeyPair, PublicKey, Signature};

|

||||

use std::collections::hash_map::Entry::Occupied;

|

||||

use std::collections::{HashMap, HashSet};

|

||||

use std::result;

|

||||

use chrono::prelude::*;

|

||||

use std::sync::mpsc::SendError;

|

||||

use transaction::Transaction;

|

||||

|

||||

#[derive(Debug, PartialEq, Eq)]

|

||||

pub enum AccountingError {

|

||||

@ -24,25 +28,32 @@ pub enum AccountingError {

|

||||

|

||||

pub type Result<T> = result::Result<T, AccountingError>;

|

||||

|

||||

/// Commit funds to the 'to' party.

|

||||

fn complete_transaction(balances: &mut HashMap<PublicKey, i64>, plan: &Plan) {

|

||||

if let Plan::Pay(ref payment) = *plan {

|

||||

*balances.entry(payment.to).or_insert(0) += payment.tokens;

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Accountant {

|

||||

pub historian: Historian,

|

||||

pub balances: HashMap<PublicKey, i64>,

|

||||

pub first_id: Hash,

|

||||

pub last_id: Hash,

|

||||

pending: HashMap<Signature, Transaction<i64>>,

|

||||

pending: HashMap<Signature, Plan>,

|

||||

time_sources: HashSet<PublicKey>,

|

||||

last_time: DateTime<Utc>,

|

||||

}

|

||||

|

||||

impl Accountant {

|

||||

/// Create an Accountant using an existing ledger.

|

||||

pub fn new_from_entries<I>(entries: I, ms_per_tick: Option<u64>) -> Self

|

||||

where

|

||||

I: IntoIterator<Item = Entry>,

|

||||

{

|

||||

let mut entries = entries.into_iter();

|

||||

|

||||

// The first item in the log is required to be an entry with zero num_hashes,

|

||||

// which implies its id can be used as the log's seed.

|

||||

// The first item in the ledger is required to be an entry with zero num_hashes,

|

||||

// which implies its id can be used as the ledger's seed.

|

||||

let entry0 = entries.next().unwrap();

|

||||

let start_hash = entry0.id;

|

||||

|

||||

@ -51,146 +62,106 @@ impl Accountant {

|

||||

historian: hist,

|

||||

balances: HashMap::new(),

|

||||

first_id: start_hash,

|

||||

last_id: start_hash,

|

||||

pending: HashMap::new(),

|

||||

time_sources: HashSet::new(),

|

||||

last_time: Utc.timestamp(0, 0),

|

||||

};

|

||||

|

||||

// The second item in the log is a special transaction where the to and from

|

||||

// The second item in the ledger is a special transaction where the to and from

|

||||

// fields are the same. That entry should be treated as a deposit, not a

|

||||

// transfer to oneself.

|

||||

let entry1 = entries.next().unwrap();

|

||||

acc.process_verified_event(&entry1.event, true).unwrap();

|

||||

acc.process_verified_event(&entry1.events[0], true).unwrap();

|

||||

|

||||

for entry in entries {

|

||||

acc.process_verified_event(&entry.event, false).unwrap();

|

||||

for event in entry.events {

|

||||

acc.process_verified_event(&event, false).unwrap();

|

||||

}

|

||||

}

|

||||

acc

|

||||

}

|

||||

|

||||

/// Create an Accountant with only a Mint. Typically used by unit tests.

|

||||

pub fn new(mint: &Mint, ms_per_tick: Option<u64>) -> Self {

|

||||

Self::new_from_entries(mint.create_entries(), ms_per_tick)

|

||||

}

|

||||

|

||||

pub fn sync(self: &mut Self) -> Hash {

|

||||

while let Ok(entry) = self.historian.receiver.try_recv() {

|

||||

self.last_id = entry.id;

|

||||

fn is_deposit(allow_deposits: bool, from: &PublicKey, plan: &Plan) -> bool {

|

||||

if let Plan::Pay(ref payment) = *plan {

|

||||

allow_deposits && *from == payment.to

|

||||

} else {

|

||||

false

|

||||

}

|

||||

self.last_id

|

||||

}

|

||||

|

||||

fn is_deposit(allow_deposits: bool, from: &PublicKey, to: &PublicKey) -> bool {

|

||||

allow_deposits && from == to

|

||||

}

|

||||

|

||||

pub fn process_transaction(self: &mut Self, tr: Transaction<i64>) -> Result<()> {

|

||||

if !tr.verify() {

|

||||

return Err(AccountingError::InvalidTransfer);

|

||||

}

|

||||

|

||||

if self.get_balance(&tr.from).unwrap_or(0) < tr.asset {

|

||||

/// Process and log the given Transaction.

|

||||

pub fn log_verified_transaction(&mut self, tr: Transaction) -> Result<()> {

|

||||

if self.get_balance(&tr.from).unwrap_or(0) < tr.tokens {

|

||||

return Err(AccountingError::InsufficientFunds);

|

||||

}

|

||||

|

||||

self.process_verified_transaction(&tr, false)?;

|

||||

if let Err(SendError(_)) = self.historian.sender.send(Event::Transaction(tr)) {

|

||||

if let Err(SendError(_)) = self.historian

|

||||

.sender

|

||||

.send(Signal::Event(Event::Transaction(tr)))

|

||||

{

|

||||

return Err(AccountingError::SendError);

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Commit funds to the 'to' party.

|

||||

fn complete_transaction(self: &mut Self, tr: &Transaction<i64>) {

|

||||

if self.balances.contains_key(&tr.to) {

|

||||

if let Some(x) = self.balances.get_mut(&tr.to) {

|

||||

*x += tr.asset;

|

||||

}

|

||||

} else {

|

||||

self.balances.insert(tr.to, tr.asset);

|

||||

/// Verify and process the given Transaction.

|

||||

pub fn log_transaction(&mut self, tr: Transaction) -> Result<()> {

|

||||

if !tr.verify() {

|

||||

return Err(AccountingError::InvalidTransfer);

|

||||

}

|

||||

|

||||

self.log_verified_transaction(tr)

|

||||

}

|

||||

|

||||

/// Return funds to the 'from' party.

|

||||

fn cancel_transaction(self: &mut Self, tr: &Transaction<i64>) {

|

||||

if let Some(x) = self.balances.get_mut(&tr.from) {

|

||||

*x += tr.asset;

|

||||

}

|

||||

}

|

||||

|

||||

// TODO: Move this to transaction.rs

|

||||

fn all_satisfied(&self, conds: &[Condition]) -> bool {

|

||||

let mut satisfied = true;

|

||||

for cond in conds {

|

||||

if let &Condition::Timestamp(dt) = cond {

|

||||

if dt > self.last_time {

|

||||

satisfied = false;

|

||||

}

|

||||

} else {

|

||||

satisfied = false;

|

||||

}

|

||||

}

|

||||

satisfied

|

||||

}

|

||||

|

||||

/// Process a Transaction that has already been verified.

|

||||

fn process_verified_transaction(

|

||||

self: &mut Self,

|

||||

tr: &Transaction<i64>,

|

||||

tr: &Transaction,

|

||||

allow_deposits: bool,

|

||||

) -> Result<()> {

|

||||

if !reserve_signature(&mut self.historian.signatures, &tr.sig) {

|

||||

if !self.historian.reserve_signature(&tr.sig) {

|

||||

return Err(AccountingError::InvalidTransferSignature);

|

||||

}

|

||||

|

||||

if !tr.unless_any.is_empty() {

|

||||

// TODO: Check to see if the transaction is expired.

|

||||

}

|

||||

|

||||

if !Self::is_deposit(allow_deposits, &tr.from, &tr.to) {

|

||||

if !Self::is_deposit(allow_deposits, &tr.from, &tr.plan) {

|

||||

if let Some(x) = self.balances.get_mut(&tr.from) {

|

||||

*x -= tr.asset;

|

||||

*x -= tr.tokens;

|

||||

}

|

||||

}

|

||||

|

||||

if !self.all_satisfied(&tr.if_all) {

|

||||

self.pending.insert(tr.sig, tr.clone());

|

||||

return Ok(());

|

||||

let mut plan = tr.plan.clone();

|

||||

plan.apply_witness(&Witness::Timestamp(self.last_time));

|

||||

|

||||

if plan.is_complete() {

|

||||

complete_transaction(&mut self.balances, &plan);

|

||||

} else {

|

||||

self.pending.insert(tr.sig, plan);

|

||||

}

|

||||

|

||||

self.complete_transaction(tr);

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Signature that has already been verified.

|

||||

fn process_verified_sig(&mut self, from: PublicKey, tx_sig: Signature) -> Result<()> {

|

||||

let mut cancel = false;

|

||||

if let Some(tr) = self.pending.get(&tx_sig) {

|

||||

// Cancel:

|

||||

// if Signature(from) is in unless_any, return funds to tx.from, and remove the tx from this map.

|

||||

|

||||

// TODO: Use find().

|

||||

for cond in &tr.unless_any {

|

||||

if let Condition::Signature(pubkey) = *cond {

|

||||

if from == pubkey {

|

||||

cancel = true;

|

||||

break;

|

||||

}

|

||||

}

|

||||

if let Occupied(mut e) = self.pending.entry(tx_sig) {

|

||||

e.get_mut().apply_witness(&Witness::Signature(from));

|

||||

if e.get().is_complete() {

|

||||

complete_transaction(&mut self.balances, e.get());

|

||||

e.remove_entry();

|

||||

}

|

||||

}

|

||||

};

|

||||

|

||||

if cancel {

|

||||

if let Some(tr) = self.pending.remove(&tx_sig) {

|

||||

self.cancel_transaction(&tr);

|

||||

}

|

||||

}

|

||||

|

||||

// Process Multisig:

|

||||

// otherwise, if "Signature(from) is in if_all, remove it. If that causes that list

|

||||

// to be empty, add the asset to to, and remove the tx from this map.

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process a Witness Timestamp that has already been verified.

|

||||

fn process_verified_timestamp(&mut self, from: PublicKey, dt: DateTime<Utc>) -> Result<()> {

|

||||

// If this is the first timestamp we've seen, it probably came from the genesis block,

|

||||

// so we'll trust it.

|

||||

@ -205,92 +176,85 @@ impl Accountant {

|

||||

} else {

|

||||

return Ok(());

|

||||

}

|

||||

// TODO: Lookup pending Transaction waiting on time, signed by a whitelisted PublicKey.

|

||||

|

||||

// Expire:

|

||||

// if a Timestamp after this DateTime is in unless_any, return funds to tx.from,

|

||||

// and remove the tx from this map.

|

||||

|

||||

// Check to see if any timelocked transactions can be completed.

|

||||

let mut completed = vec![];

|

||||

for (key, tr) in &self.pending {

|

||||

for cond in &tr.if_all {

|

||||

if let Condition::Timestamp(dt) = *cond {

|

||||

if self.last_time >= dt {

|

||||

if tr.if_all.len() == 1 {

|

||||

completed.push(*key);

|

||||

}

|

||||

}

|

||||

}

|

||||

for (key, plan) in &mut self.pending {

|

||||

plan.apply_witness(&Witness::Timestamp(self.last_time));

|

||||

if plan.is_complete() {

|

||||

complete_transaction(&mut self.balances, plan);

|

||||

completed.push(key.clone());

|

||||

}

|

||||

// TODO: Add this in once we start removing constraints

|

||||

//if tr.if_all.is_empty() {

|

||||

// // TODO: Remove tr from pending

|

||||

// self.complete_transaction(tr);

|

||||

//}

|

||||

}

|

||||

|

||||

for key in completed {

|

||||

if let Some(tr) = self.pending.remove(&key) {

|

||||

self.complete_transaction(&tr);

|

||||

}

|

||||

self.pending.remove(&key);

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

|

||||

/// Process an Transaction or Witness that has already been verified.

|

||||

fn process_verified_event(self: &mut Self, event: &Event, allow_deposits: bool) -> Result<()> {

|

||||

match *event {

|

||||

Event::Tick => Ok(()),

|

||||

Event::Transaction(ref tr) => self.process_verified_transaction(tr, allow_deposits),

|

||||

Event::Signature { from, tx_sig, .. } => self.process_verified_sig(from, tx_sig),

|

||||

Event::Timestamp { from, dt, .. } => self.process_verified_timestamp(from, dt),

|

||||

}

|

||||

}

|

||||

|

||||

/// Create, sign, and process a Transaction from `keypair` to `to` of

|

||||

/// `n` tokens where `last_id` is the last Entry ID observed by the client.

|

||||

pub fn transfer(

|

||||

self: &mut Self,

|

||||

n: i64,

|

||||

keypair: &KeyPair,

|

||||

to: PublicKey,

|

||||

last_id: Hash,

|

||||

) -> Result<Signature> {

|

||||

let tr = Transaction::new(keypair, to, n, self.last_id);

|

||||

let tr = Transaction::new(keypair, to, n, last_id);

|

||||

let sig = tr.sig;

|

||||

self.process_transaction(tr).map(|_| sig)

|

||||

self.log_transaction(tr).map(|_| sig)

|

||||

}

|

||||

|

||||

/// Create, sign, and process a postdated Transaction from `keypair`

|

||||

/// to `to` of `n` tokens on `dt` where `last_id` is the last Entry ID

|

||||

/// observed by the client.

|

||||

pub fn transfer_on_date(

|

||||

self: &mut Self,

|

||||

n: i64,

|

||||

keypair: &KeyPair,

|

||||

to: PublicKey,

|

||||

dt: DateTime<Utc>,

|

||||

last_id: Hash,

|

||||

) -> Result<Signature> {

|

||||

let tr = Transaction::new_on_date(keypair, to, dt, n, self.last_id);

|

||||

let tr = Transaction::new_on_date(keypair, to, dt, n, last_id);

|

||||

let sig = tr.sig;

|

||||

self.process_transaction(tr).map(|_| sig)

|

||||

self.log_transaction(tr).map(|_| sig)

|

||||

}

|

||||

|

||||

pub fn get_balance(self: &Self, pubkey: &PublicKey) -> Option<i64> {

|

||||

self.balances.get(pubkey).map(|x| *x)

|

||||

self.balances.get(pubkey).cloned()

|

||||

}

|

||||

}

|

||||

|

||||

#[cfg(test)]

|

||||

mod tests {

|

||||

use super::*;

|

||||

use recorder::ExitReason;

|

||||

use signature::KeyPairUtil;

|

||||

use logger::ExitReason;

|

||||

|

||||

#[test]

|

||||

fn test_accountant() {

|

||||

let alice = Mint::new(10_000);

|

||||

let bob_pubkey = KeyPair::new().pubkey();

|

||||

let mut acc = Accountant::new(&alice, Some(2));

|

||||

acc.transfer(1_000, &alice.keypair(), bob_pubkey).unwrap();

|

||||

acc.transfer(1_000, &alice.keypair(), bob_pubkey, alice.seed())

|

||||

.unwrap();

|

||||

assert_eq!(acc.get_balance(&bob_pubkey).unwrap(), 1_000);

|

||||

|

||||

acc.transfer(500, &alice.keypair(), bob_pubkey).unwrap();

|

||||

acc.transfer(500, &alice.keypair(), bob_pubkey, alice.seed())

|

||||

.unwrap();

|

||||

assert_eq!(acc.get_balance(&bob_pubkey).unwrap(), 1_500);

|

||||

|

||||

drop(acc.historian.sender);

|

||||

@ -305,9 +269,10 @@ mod tests {

|

||||

let alice = Mint::new(11_000);

|

||||

let mut acc = Accountant::new(&alice, Some(2));

|

||||

let bob_pubkey = KeyPair::new().pubkey();

|

||||

acc.transfer(1_000, &alice.keypair(), bob_pubkey).unwrap();

|

||||

acc.transfer(1_000, &alice.keypair(), bob_pubkey, alice.seed())

|

||||

.unwrap();

|

||||

assert_eq!(

|

||||

acc.transfer(10_001, &alice.keypair(), bob_pubkey),

|

||||

acc.transfer(10_001, &alice.keypair(), bob_pubkey, alice.seed()),

|

||||

Err(AccountingError::InsufficientFunds)

|

||||

);

|

||||

|

||||

@ -322,13 +287,38 @@ mod tests {

|

||||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_overspend_attack() {

|

||||

let alice = Mint::new(1);

|

||||

let mut acc = Accountant::new(&alice, None);

|

||||

let bob_pubkey = KeyPair::new().pubkey();

|

||||

let mut tr = Transaction::new(&alice.keypair(), bob_pubkey, 1, alice.seed());

|

||||

if let Plan::Pay(ref mut payment) = tr.plan {

|

||||

payment.tokens = 2; // <-- attack!

|

||||

}

|

||||

assert_eq!(

|

||||

acc.log_transaction(tr.clone()),

|

||||

Err(AccountingError::InvalidTransfer)

|

||||

);

|

||||

|

||||

// Also, ensure all branchs of the plan spend all tokens

|

||||

if let Plan::Pay(ref mut payment) = tr.plan {

|

||||